CN 11-4766/R

主办:中国科学院心理研究所

出版:科学出版社

心理科学进展 ›› 2023, Vol. 31 ›› Issue (11): 2050-2062.doi: 10.3724/SP.J.1042.2023.02050 cstr: 32111.14.2023.02050

收稿日期:2023-02-06

出版日期:2023-11-15

发布日期:2023-08-28

通讯作者:

魏一璞, E-mail: weiyipu@pku.edu.cn基金资助:Received:2023-02-06

Online:2023-11-15

Published:2023-08-28

摘要:

视觉情境范式是一种通过追踪、测量人眼在视觉物体上的注视轨迹来研究实时口语加工的眼动实验范式。该范式运用于语言理解类研究的理论基础是眼动连接假设(如: 协同互动理论、基于目标的连接假设理论等), 这些连接假设在眼动轨迹与口语加工进程之间建立起了有意义的关联。使用视觉情境范式所获取的数据能够为口语加工提供精确的时间信息, 常用的数据分析方法包括: 时间兴趣区内注视比例均值分析、分叉点分析、生长曲线分析等。该范式为研究词汇语音识别、句法解歧、语义理解、语篇语用信息加工等问题提供了关键性证据。

中图分类号:

魏一璞. (2023). 利用视觉情境范式揭示口语加工的时间进程. 心理科学进展 , 31(11), 2050-2062.

WEI Yipu. (2023). Visual world paradigm reveals the time course of spoken language processing. Advances in Psychological Science, 31(11), 2050-2062.

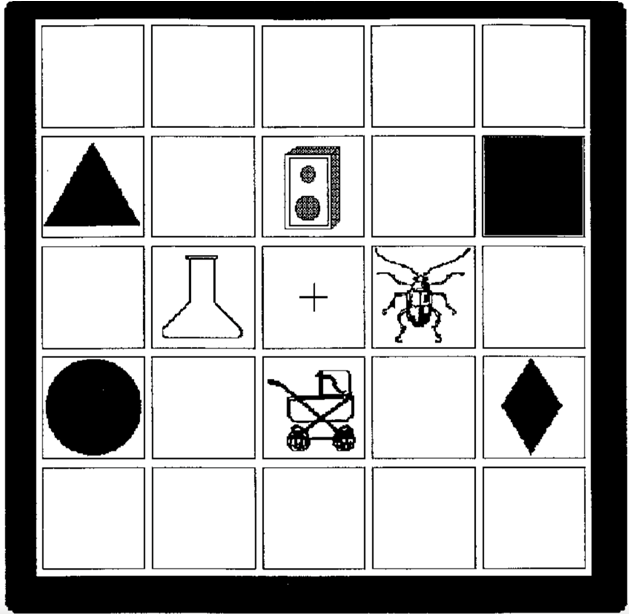

图2 视觉情境范式实验视觉刺激示意图 注:语音指令为: beaker “烧杯”。4个用于测量的物体分别为: 左−目标指代物体(referent) beaker “烧杯”、右−语音同群竞争项(cohort) beetle “甲虫”、上−韵律竞争项(rhyme) speaker “扬声器”、下−无关项(unrelated) carriage “婴儿车”。 资料来源: Allopenna等人(1998), 已获使用许可。

图3 使用视觉情境范式的词汇识别任务所得数据示意图 注:横轴: 从目标词开始呈现后的1000 ms时间轴; 纵轴: 注视比例。4条曲线分别代表看向目标指代物体(referent) beaker “烧杯”、语音同群竞争项(cohort) beetle “甲虫”、韵律竞争项(rhyme) speaker “扬声器”、无关项(unrelated) carriage “婴儿车”的注视比例。 资料来源: Allopenna等人(1998), 已获使用许可。

| [1] | 林桐, 王娟. (2018). 基于视觉情境范式的口语词汇理解研究进展. 心理技术与应用, 6(9), 570-576. |

| [2] | 邱丽景, 王穗苹, 关心. (2009). 口语理解的视觉-情境范式研究. 华南师范大学学报, (1), 130-136. |

| [3] |

Allopenna, P. D., Magnuson, J. S., & Tanenhaus, M. K. (1998). Tracking the time course of spoken word recognition using eye movements: Evidence for continuous mapping models. Journal of Memory and Language, 38(4), 419-439. https://doi.org/10.1006/jmla.1997.2558

doi: 10.1006/jmla.1997.2558 URL |

| [4] |

Altmann, G. T. M. (2004). Language-mediated eye movements in the absence of a visual world: The “blank screen paradigm”. Cognition, 93(2), 79-87. https://doi.org/10.1016/j.cognition.2004.02.005

URL pmid: 15147941 |

| [5] |

Altmann, G. T. M., & Kamide, Y. (1999). Incremental interpretation at verbs: Restricting the domain of subsequent reference. Cognition, 73(3), 247-264. https://doi.org/10.1016/s0010-0277(99)00059-1

doi: 10.1016/s0010-0277(99)00059-1 URL pmid: 10585516 |

| [6] |

Altmann, G. T. M., & Kamide, Y. (2007). The real-time mediation of visual attention by language and world knowledge: Linking anticipatory (and other) eye movements to linguistic processing. Journal of Memory and Language, 57(4), 502-518. https://doi.org/10.1016/j.jml.2006.12.004

doi: 10.1016/j.jml.2006.12.004 URL |

| [7] |

Altmann, G. T. M., & Mirković, J. (2009). Incrementality and prediction in human sentence processing. Cognitive Science, 33(4), 583-609. https://doi.org/10.1111/j.1551-6709.2009.01022.x

doi: 10.1111/j.1551-6709.2009.01022.x URL pmid: 20396405 |

| [8] | Arnold, J. E., Eisenband, J. G., Brown-Schmidt, S., & Trueswell, J. C. (2000). The rapid use of gender information: Evidence of the time course of pronoun resolution from eyetracking. Cognition, 76(1), B13-B26. https://doi.org/10.1016/s0010-0277(00)00073-1 |

| [9] |

Barr, D. J., Jackson, L., & Phillips, I. (2014). Using a voice to put a name to a face: The psycholinguistics of proper name comprehension. Journal of Experimental Psychology: General, 143(1), 404-413. https://doi.org/10.1037/a0031813

doi: 10.1037/a0031813 URL |

| [10] |

Canseco-Gonzalez, E., Brehm, L., Brick, C. A., Brown- Schmidt, S., Fischer, K., & Wagner, K. (2010). Carpet or cárcel: The effect of age of acquisition and language mode on bilingual lexical access. Language and Cognitive Processes, 25(5), 669-705. https://doi.org/10.1080/01690960903474912

doi: 10.1080/01690960903474912 URL |

| [11] |

Chambers, C. G., Tanenhaus, M. K., Eberhard, K. M., Filip, H., & Carlson, G. N. (2002). Circumscribing referential domains during real-time language comprehension. Journal of Memory and Language, 47(1), 30-49. https://doi.org/10.1006/jmla.2001.2832

doi: 10.1006/jmla.2001.2832 URL |

| [12] |

Chambers, C. G., Tanenhaus, M. K., & Magnuson, J. S. (2004). Actions and affordances in syntactic ambiguity resolution. Journal of Experimental Psychology: Learning, Memory, and Cognition, 30(3), 687-696. https://doi.org/10.1037/0278-7393.30.3.687

doi: 10.1037/0278-7393.30.3.687 URL |

| [13] |

Chow, W. Y., & Chen, D. (2020). Predicting (in)correctly: Listeners rapidly use unexpected information to revise their predictions. Language, Cognition and Neuroscience, 35(9), 1149-1161. https://doi.org/10.1080/23273798.2020.1733627

doi: 10.1080/23273798.2020.1733627 URL |

| [14] |

Cooper, R. M. (1974). The control of eye fixation by the meaning of spoken Language. Cognitive Psychology, 6(1), 84-107. https://doi.org/10.1016/0010-0285(74)90005-x

doi: 10.1016/0010-0285(74)90005-X URL |

| [15] |

Corps, R. E., Brooke, C., & Pickering, M. J. (2021). Prediction involves two stages: Evidence from visual-world eye-tracking. Journal of Memory and Language, 122, 104298. https://doi.org/10.1016/j.jml.2021.104298

doi: 10.1016/j.jml.2021.104298 URL |

| [16] |

Cozijn, R., Commandeur, E., Vonk, W., & Noordman, L. G.. (2011). The time course of the use of implicit causality information in the processing of pronouns: A visual world paradigm study. Journal of Memory and Language, 64(4), 381-403. https://doi.org/10.1016/j.jml.2011.01.001

doi: 10.1016/j.jml.2011.01.001 URL |

| [17] |

Dahan, D., & Tanenhaus, M. K. (2004). Continuous mapping from sound to meaning in spoken-language comprehension: Immediate effects of verb-based thematic constraints. Journal of Experimental Psychology: Learning, Memory, and Cognition, 30(2), 498-513. https://doi.org/10.1037/0278-7393.30.2.498

doi: 10.1037/0278-7393.30.2.498 URL |

| [18] |

Degen, J., & Tanenhaus, M. K. (2015). Processing scalar implicature: A constraint-based approach. Cognitive Science, 39(4), 667-710. https://doi.org/10.1111/cogs.12171

doi: 10.1111/cogs.12171 URL pmid: 25265993 |

| [19] |

Degen, J., & Tanenhaus, M. K. (2016). Availability of alternatives and the processing of scalar implicatures: A visual world eye-tracking study. Cognitive Science, 40(1), 172-201. https://doi.org/10.1111/cogs.12227

doi: 10.1111/cogs.12227 URL pmid: 25807866 |

| [20] |

DeLong, K. A., Urbach, T. P., & Kutas, M. (2005). Probabilistic word pre-activation during language comprehension inferred from electrical brain activity. Nature Neuroscience, 8(8), 1117-1121. https://doi.org/10.1038/nn1504

URL pmid: 16007080 |

| [21] |

Eichert, N., Peeters, D., & Hagoort, P. (2018). Language- driven anticipatory eye movements in virtual reality. Behavior Research Methods, 50(3), 1102-1115. https://doi.org/10.3758/s13428-017-0929-z

doi: 10.3758/s13428-017-0929-z URL pmid: 28791625 |

| [22] |

Ferreira, F., Foucart, A., & Engelhardt, P. E. (2013). Language processing in the visual world: Effects of preview, visual complexity, and prediction. Journal of Memory and Language, 69(3), 165-182. https://doi.org/10.1016/j.jml.2013.06.001

doi: 10.1016/j.jml.2013.06.001 URL |

| [23] | Frazier, L. (1987). Sentence processing:A tutorial review. In M.Coltheart (Ed.), Attention and performance XII: The psychology of reading (pp. 559-586). Lawrence Erlbaum Associates. |

| [24] | Gardner, B., Dix, S., Lawrence, R., Morgan, C., Sullivan, A., & Kurumada, C. (2021). Online pragmatic interpretations of scalar adjectives are affected by perceived speaker reliability. PLoS ONE, 16(2), e0245130. https://doi.org/10.1371/journal.pone.0245130 |

| [25] |

Garnham, A., Traxler, M., Oakhill, J., & Gernsbacher, M. A. (1996). The locus of implicit causality effects in comprehension. Journal of Memory and Language, 35(4), 517-543. https://doi.org/doi.org/10.1006/jmla.1996.0028

URL pmid: 26221059 |

| [26] |

Grüter, T., Lau, E., & Ling, W. (2020). How classifiers facilitate predictive processing in L1 and L2 Chinese: The role of semantic and grammatical cues. Language, Cognition and Neuroscience, 35(2), 221-234. https://doi.org/10.1080/23273798.2019.1648840

doi: 10.1080/23273798.2019.1648840 URL |

| [27] |

Hanna, J. E., & Tanenhaus, M. K. (2004). Pragmatic effects on reference resolution in a collaborative task: Evidence from eye movements. Cognitive Science, 28(1), 105-115. https://doi.org/10.1016/j.cogsci.2003.10.002

doi: 10.1207/s15516709cog2801_5 URL |

| [28] | Henderson, J. M., & Ferreira, F. (2004). Scene perception for psycholinguists. In J. M.Henderson & F.Ferreira (Eds.), The interface of language, vision, and action: Eye movements and the visual world (pp. 1-58). Psychology Press. https://doi.org/10.4324/9780203488430 |

| [29] |

Henry, N., Jackson, C. N., & Hopp, H. (2022). Cue coalitions and additivity in predictive processing: The interaction between case and prosody in L2 German. Second Language Research, 38(3), 397-422. https://doi.org/10.1177/0267658320963151

doi: 10.1177/0267658320963151 URL |

| [30] |

Heyselaar, E., Peeters, D., & Hagoort, P. (2020). Do we predict upcoming speech content in naturalistic environments. Language, Cognition and Neuroscience, 36(4), 440-461. https://doi.org/10.1080/23273798.2020.1859568

doi: 10.1080/23273798.2020.1859568 URL |

| [31] |

Huang, Y., & Snedeker, J. (2020). Evidence from the visual world paradigm raises questions about unaccusativity and growth curve analyses. Cognition, 200, 104251. https://doi.org/10.1016/j.cognition.2020.104251

doi: 10.1016/j.cognition.2020.104251 URL |

| [32] |

Huang, Y. T., & Snedeker, J. (2009). Semantic meaning and pragmatic interpretation in 5-year-olds: Evidence from real-time spoken language comprehension. Developmental Psychology, 45(6), 1723-1739. https://doi.org/10.1037/a0016704

doi: 10.1037/a0016704 URL pmid: 19899927 |

| [33] |

Huang, Y. T., & Snedeker, J. (2011). Logic and conversation revisited: Evidence for a division between semantic and pragmatic content in real-time language comprehension. Language and Cognitive Processes, 26(8), 1161-1172. https://doi.org/10.1080/01690965.2010.508641

doi: 10.1080/01690965.2010.508641 URL |

| [34] |

Huettig, F., & Guerra, E. (2019). Effects of speech rate, preview time of visual context, and participant instructions reveal strong limits on prediction in language processing. Brain Research, 1706, 196-208. https://doi.org/10.1016/j.brainres.2018.11.013

doi: S0006-8993(18)30565-1 URL pmid: 30439351 |

| [35] |

Huettig, F., & McQueen, J. M. (2007). The tug of war between phonological, semantic and shape information in language-mediated visual search. Journal of Memory and Language, 57(4), 460-482. https://doi.org/10.1016/j.jml.2007.02.001

doi: 10.1016/j.jml.2007.02.001 URL |

| [36] |

Huettig, F., Olivers, C. N. L., & Hartsuiker, R. J. (2011a). Looking, language, and memory: Bridging research from the visual world and visual search paradigms. Acta Psychologica, 137(2), 138-150. https://doi.org/10.1016/j.actpsy.2010.07.013

doi: 10.1016/j.actpsy.2010.07.013 URL |

| [37] |

Huettig, F., Rommers, J., & Meyer, A. S. (2011b). Using the visual world paradigm to study language processing: A review and critical evaluation. Acta Psychologica, 137(2), 151-171. https://doi.org/10.1016/j.actpsy.2010.11.003

doi: 10.1016/j.actpsy.2010.11.003 URL |

| [38] | Ito, A., & Knoeferle, P. (2022). Analysing data from the psycholinguistic visual-world paradigm: Comparison of different analysis methods. Behavior Research Methods. https://doi.org/10.3758/s13428-022-01969-3 |

| [39] |

Ito, A., Pickering, M. J., & Corley, M. (2018). Investigating the time-course of phonological prediction in native and non-native speakers of English: A visual world eye- tracking study. Journal of Memory and Language, 98, 1-11. https://doi.org/10.1016/j.jml.2017.09.002

doi: 10.1016/j.jml.2017.09.002 URL |

| [40] | Kaan, E., & Grüter, T. (2021). Prediction in second language processing and learning:Advances and directions. In E.Kaan & T.Grüter (Eds.), Prediction in second language processing and learning (pp. 1-24). John Benjamins. |

| [41] | Kaiser, E. (2016). Discourse-level Processing. In P.Knoeferle, P.Pyykkönen-Klauck, & M. W.Crocker (Eds.), Visually situated language comprehension (pp. 151-184). John Benjamins Publishing. |

| [42] |

Kamide, Y., Scheepers, C., & Altmann, G. T. M. (2003). Integration of syntactic and semantic information in predictive processing: Cross-linguistic evidence from German and English. Journal of Psycholinguistic Research, 32(1), 37-55. https://doi.org/10.1023/a:1021933015362

URL pmid: 12647562 |

| [43] |

Karimi, H., Brothers, T., & Ferreira, F. (2019). Phonological versus semantic prediction in focus and repair constructions: No evidence for differential predictions. Cognitive Psychology, 112, 25-47. https://doi.org/10.1016/j.cogpsych.2019.04.001

doi: S0010-0285(18)30233-0 URL pmid: 31078824 |

| [44] |

Knoeferle, P., & Crocker, M. W. (2006). The coordinated interplay of scene, utterance, and world knowledge: Evidence from eye tracking. Cognitive Science, 30(3), 481-529. https://doi.org/10.1207/s15516709cog0000_65

doi: 10.1207/s15516709cog0000_65 URL pmid: 21702823 |

| [45] |

Knoeferle, P., & Crocker, M. W. (2007). The influence of recent scene events on spoken comprehension: Evidence from eye movements. Journal of Memory and Language, 57(4), 519-543. https://doi.org/10.1016/j.jml.2007.01.003

doi: 10.1016/j.jml.2007.01.003 URL |

| [46] |

Knoeferle, P., Crocker, M. W., Scheepers, C., & Pickering, M. J. (2005). The influence of the immediate visual context on incremental thematic role-assignment: Evidence from eye-movements in depicted events. Cognition, 95(1), 95-127. https://doi.org/10.1016/j.cognition.2004.03.002

URL pmid: 15629475 |

| [47] |

Koornneef, A. W., & van Berkum, J. J. A. (2006). On the use of verb-based implicit causality in sentence comprehension: Evidence from self-paced reading and eye tracking. Journal of Memory and Language, 54, 445-465. https://doi.org/10.1016/j.jml.2005.12.003

doi: 10.1016/j.jml.2005.12.003 URL |

| [48] |

Koring, L., Mak, P., & Reuland, E. (2012). The time course of argument reactivation revealed: Using the visual world paradigm. Cognition, 123(3), 361-379. https://doi.org/10.1016/j.cognition.2012.02.011

doi: 10.1016/j.cognition.2012.02.011 URL pmid: 22475295 |

| [49] |

Kukona, A. (2020). Lexical constraints on the prediction of form: Insights from the visual world paradigm. Journal of Experimental Psychology: Learning, Memory, and Cognition, 46(11), 2153-2162. https://doi.org/10.1037/xlm0000935

doi: 10.1037/xlm0000935 URL |

| [50] |

Kuperberg, G. R., & Jaeger, T. F. (2016). What do we mean by prediction in language comprehension. Language, Cognition and Neuroscience, 31(1), 32-59. https://doi.org/10.1080/23273798.2015.1102299

URL pmid: 27135040 |

| [51] | Levinson, S. C. (2000). Presumptive meanings: The theory of generalized conversational implicature. MIT Press. |

| [52] |

Levy, R. (2008). Expectation-based syntactic comprehension. Cognition, 106(3), 1126-1177. https://doi.org/10.1016/j.cognition.2007.05.006

URL pmid: 17662975 |

| [53] |

Li, X., Li, X., & Qu, Q. (2022). Predicting phonology in language comprehension: Evidence from the visual world eye-tracking task in Mandarin Chinese. Journal of Experimental Psychology: Human Perception and Performance, 48(5), 531-547. https://doi.org/10.1037/xhp0000999

doi: 10.1037/xhp0000999 URL |

| [54] |

Magnuson, J. S. (2019). Fixations in the visual world paradigm: Where, when, why. Journal of Cultural Cognitive Science, 3(2), 113-139. https://doi.org/10.1007/s41809-019-00035-3

doi: 10.1007/s41809-019-00035-3 URL |

| [55] |

Magnuson, J. S., Tanenhaus, M. K., Aslin, R. N., & Dahan, D. (2003). The time course of spoken word learning and recognition: Studies with artificial lexicons. Journal of Experimental Psychology: General, 132(2), 202-227. https://doi.org/10.1037/0096-3445.132.2.202

doi: 10.1037/0096-3445.132.2.202 URL |

| [56] |

Mak, W. M., Tribushinina, E., Lomako, J., Gagarina, N., Abrosova, E., & Sanders, T. (2017). Connective processing by bilingual children and monolinguals with specific language impairment: Distinct profiles. Journal of Child Language, 44(2), 329-345. https://doi.org/10.1017/s0305000915000860

doi: 10.1017/S0305000915000860 URL pmid: 26847820 |

| [57] |

Matin, E., Shao, K. C., & Boff, K. R. (1993). Saccadic overhead: Information-processing time with and without saccades. Perception & Psychophysics, 53(4), 372-380. https://doi.org/10.3758/bf03206780

doi: 10.3758/BF03206780 URL |

| [58] |

McMurray, B., Samelson, V. M., Lee, S. H., & Tomblin, J. B. (2010). Individual differences in online spoken word recognition: Implications for SLI. Cognitive Psychology, 60(1), 1-39. https://doi.org/10.1016/j.cogpsych.2009.06.003

doi: 10.1016/j.cogpsych.2009.06.003 URL pmid: 19836014 |

| [59] |

McRae, K., Spivey-Knowlton, M. J., & Tanenhaus, M. K. (1998). Modeling the influence of thematic fit (and other constraints) in on-line sentence comprehension. Journal of Memory and Language, 38(3), 283-312. https://doi.org/10.1006/jmla.1997.2543

doi: 10.1006/jmla.1997.2543 URL |

| [60] |

Millis, K. K., & Just, M. A. (1994). The influence of connectives on sentence comprehension. Journal of Memory and Language, 33(1), 128-147. https://doi.org/10.1006/jmla.1994.1007

doi: 10.1006/jmla.1994.1007 URL |

| [61] | Mirman, D. (2014). Growth Curve Analysis and Visualization Using R. CRC Press. |

| [62] |

Mirman, D., Dixon, J. A., & Magnuson, J. S. (2008). Statistical and computational models of the visual world paradigm: Growth curves and individual differences. Journal of Memory and Language, 59(4), 475-494. https://doi.org/10.1016/j.jml.2007.11.006

doi: 10.1016/j.jml.2007.11.006 URL pmid: 19060958 |

| [63] | Nieuwland, M. S., Politzer-Ahles, S., Heyselaar, E., Segaert, K., Darley, E., Kazanina, N., ... Huettig, F. (2018). Large-scale replication study reveals a limit on probabilistic prediction in language comprehension. ELife, 7, 1-24. https://doi.org/10.7554/eLife.33468 |

| [64] | Porretta, V., Kyröläinen, A.-J., van Rij, J., & Järvikivi, J. (2018). Visual world paradigm data:From preprocessing to nonlinear time-course analysis. In I.Czarnowski, R.Howlett, & L.Jain (Eds.), Intelligent decision technologies 2017. Smart innovation, systems and technologies (Vol. 73, pp. 268-277). Springer. |

| [65] |

Pickering, M. J., & Gambi, C. (2018). Predicting while comprehending language: A theory and review. Psychological Bulletin, 144(10), 1002-1044. https://doi.org/10.1037/bul0000158

doi: 10.1037/bul0000158 URL pmid: 29952584 |

| [66] |

Pyykkönen, P., & Järvikivi, J. (2010). Activation and persistence of implicit causality information in spoken language comprehension. Experimental Psychology, 57(1), 5-16. https://doi.org/10.1027/1618-3169/a000002.

doi: 10.1027/1618-3169/a000002 URL pmid: 20176549 |

| [67] | Pyykkönen-Klauck, P., & Crocker, M. W. (2016). Attention and eye movement metrics in visual world eye tracking. In P.Knoeferle, P.Pyykkönen-Klauck, & M. W.Crocker (Eds.), Visually Situated Language Comprehension (pp. 67-82). John Benjamins Publishing. |

| [68] |

Rayner, K. (1978). Eye movements in reading and information processing. Psychological Bulletin, 85(3), 618-660. https://doi.org/10.1037/0033-2909.85.3.618

URL pmid: 353867 |

| [69] | Runner, J. T., Sussman, R. S., & Tanenhaus, M. K. (2003). Assignment of reference to reflexives and pronouns in picture noun phrases: Evidence from eye movements. Cognition, 89(1), B1-B13. https://doi.org/10.1016/S0010-0277(03)00065-9 |

| [70] |

Salverda, A. P., Brown, M., & Tanenhaus, M. K. (2011). A goal-based perspective on eye movements in visual world studies. Acta Psychologica, 137(2), 172-180. https://doi.org/10.1016/j.actpsy.2010.09.010

doi: 10.1016/j.actpsy.2010.09.010 URL pmid: 21067708 |

| [71] | Salverda, A. P., & Tanenhaus, M. K. (2017). The visual world paradigm. In A. M. B. deGroot & P., Hagoort (Eds.), Research methods in psycholinguistics and the neurobiology of language: A practical guide (pp. 89-110). Wiley- Blackwell. |

| [72] |

Saryazdi, R., & Chambers, C. G. (2021). Real-time communicative perspective taking in younger and older adults. Journal of Experimental Psychology: Learning, Memory, and Cognition, 47(3), 439-454.

doi: 10.1037/xlm0000890 URL |

| [73] |

Saslow, M. G. (1967). Latency of saccadic eye movement. Journal of the Optical Society of America, 57(8), 1030-1033. https://doi.org/10.2466/pms.2003.96.1.173

doi: 10.1364/josa.57.001030 URL pmid: 6035297 |

| [74] |

Seedorff, M., Oleson, J., & McMurray, B. (2018). Detecting when timeseries differ: Using the bootstrapped differences of timeseries (BDOTS) to analyze visual world paradigm data (and more). Journal of Memory and Language, 102, 55-67. https://doi.org/10.1016/j.jml.2018.05.004

doi: 10.1016/j.jml.2018.05.004 URL pmid: 32863563 |

| [75] |

Snedeker, J., & Trueswell, J. C. (2004). The developing constraints on parsing decisions: The role of lexical-biases and referential scenes in child and adult sentence processing. Cognitive Psychology, 49(3), 238-299. https://doi.org/10.1016/j.cogpsych.2004.03.001

URL pmid: 15342261 |

| [76] |

Stewart, A. J., Pickering, M. J., & Sanford, A. J. (2000). The time course of the influence of implicit causality information: Focusing versus integration accounts. Journal of Memory and Language, 42(3), 423-443. https://doi.org/10.1006/jmla.1999.2691

doi: 10.1006/jmla.1999.2691 URL |

| [77] |

Stone, K., Lago, S., & Schad, D. J. (2021). Divergence point analyses of visual world data: Applications to bilingual research. Bilingualism: Language and Cognition, 24(5), 833-841. https://doi.org/10.1017/s1366728920000607

doi: 10.1017/S1366728920000607 URL |

| [78] |

Tanenhaus, M. K., Magnuson, J. S., Dahan, D., & Chambers, C. (2000). Eye movements and lexical access in spoken-language comprehension: Evaluating a linking hypothesis between fixations and linguistic processing. Journal of Psycholinguistic Research, 29(6), 557-580. https://doi.org/10.1023/a:1026464108329

URL pmid: 11196063 |

| [79] |

Tanenhaus, M. K., Spivey-Knowlton, M. J., Eberhard, K. M., & Sedivy, J. C. (1995). Integration of visual and linguistic information in spoken language comprehension. Science, 268(5217), 1632-1634. https://doi.org/10.1126/science.7777863

URL pmid: 7777863 |

| [80] | Traxler, M. J., Bybee, M. D., & Pickering, M. J. (1997). Influence of connectives on language comprehension: Eye tracking evidence for incremental interpretation. The Quarterly Journal of Experimental Psychology, 50A(3), 481-497. https://doi.org/10.1080/027249897391982 |

| [81] |

Trueswell, J. C., Tanenhaus, M. K., & Garnsey, S. M. (1994). Semantic influences on parsing: Use of thematic role information in syntactic ambiguity resolution. Journal of Memory and Language, 33(3), 285-318. https://doi.org/10.1006/jmla.1994.1014

doi: 10.1006/jmla.1994.1014 URL |

| [82] |

Weber, A., & Cutler, A. (2004). Lexical competition in non-native spoken-word recognition. Journal of Memory and Language, 50(1), 1-25. https://doi.org/10.1016/S0749-596x (03)00105-0

doi: 10.1016/S0749-596X(03)00105-0 URL |

| [83] |

Wei, Y., Mak, W. M., Evers-Vermeul, J., & Sanders, T. J. M. (2019). Causal connectives as indicators of source information: Evidence from the visual world paradigm. Acta Psychologica, 198, 102866. https://doi.org/10.1016/j.actpsy.2019.102866

doi: 10.1016/j.actpsy.2019.102866 URL |

| [1] | 于洋, 姜英杰, 王永胜, 于明阳. 瞳孔变化在记忆加工中的生物标记作用[J]. 心理科学进展, 2020, 28(3): 416-425. |

| [2] | 裴宗雯, 牛盾. 单字命名任务中影响汉字饱和进程的因素:来自眼动的证据[J]. 心理科学进展, 2017, 25(suppl.): 8-8. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||