1 引言

长期的音乐训练会引起皮层(Chen, Penhune, & Zatorre, 2008; James et al., 2014; Steele, Bailey, Zatorre, & Penhune, 2013)和皮层下(脑干) (Bidelman, Weiss, Moreno, & Alain, 2014; Musacchia, Strait, & Kraus, 2008; Wong, Skoe, Russo, Dees, & Kraus, 2007)结构和功能的变化, 使得音乐训练者从低级的听觉感知到高级的多层级加工(注意、记忆等)能力得到增强(Moreno & Bidelman, 2014), 从而促进语言加工。然而, 音乐训练者在加工语言的韵律信息, 尤其是在加工堪称中国语言韵律典范的近体诗时, 是否也具有优势尚未可知。本研究拟使用七言绝句考察此问题。

韵律在语言中包括语速、语调、声调(声调语言独有)、重读等超音段特征(Hausen, Torppa, Salmela, Vainio, & Särkämö, 2013), 与音强、时长和基频等声学参数有关。在口语句子理解中, 韵律线索可以用来建立句法结构并提供语义信息, 是语音习得和感知不可缺少的元素(Dahan, 2015; Julia et al., 2013; Kotz et al., 2003; Steinhauer, Alter, & Friederici, 1999)。音乐同语言一样, 作为人类获取听觉信息的主要来源, 可以通过音调的高低、节奏的快慢等特征实现表情、表意、表态的功能(Dilley, Mattys, & Vinke, 2010; Juslin & Laukka, 2003; Patel, 2003; 蒋存梅, 2016)。有研究发现作曲家对音乐旋律的创作受言语韵律的影响(Patel, Iversen, & Rosenberg, 2006), 具有音乐障碍的先天性失乐症患者(尤其是音高加工障碍)在感知、识别言语韵律时更加困难(Liu, Patel, Fourcin, & Stewart, 2010; Nan, Sun, & Peretz, 2010), 表明音乐和语言在韵律方面有着诸多联系(Hausen et al., 2013; Patel, Peretz, Tramo, & Labreque, 1998; Patel & Daniele, 2003)。

此外, 音乐家加工与韵律相关的声学线索时具有优势(南云, 2017)。例如, 音乐家在感知音高和声调变化时的脑干编码更精确(Bidelman, Gandour, & Krishnan, 2011; Lee & Hung, 2008), 并且在皮层电反应上对音高以及音节水平语音时间结构(如音长、声音开始时间)的细微变化更加敏感(Kühnis, Elmer, Meyer, & Jäncke, 2013; Partanen, Vainio, Kujala, & Huotilainen, 2011; Wu et al., 2015), 其中纵向研究结果表明音乐能力在语言领域的迁移效应更可能源于训练而非基因倾向(Chobert, François, Velay, & Besson, 2012; Nan et al., 2018)。长期的音乐训练不仅提高了对低水平声学信息加工的敏感性, 而且对一些高级认知加工起促进作用, 如注意(Wang, Ossher, & Reuter-Lorenz, 2015)、言语记忆(Franklin et al., 2008)、执行功能(陈杰, 刘雷, 王蓉, 沈海洲, 2017)、语音分段(François, Chobert, Besson, & Schön, 2012)、语言表达技能(Milovanov, Huotilainen, Välimäki, Esquef, & Tervaniemi, 2008)等。基于以往结果, OPERA假说解释了为何音乐训练可能通过共享的神经资源对语言加工起促进作用:当音乐对这两个领域共享的感官和认知加工机制提出的要求比语音更高时, 音乐能力可以增强语音加工; 这些更高要求的结合以及与音乐能力相关的情感奖励, 重复和注意激活了神经可塑性, 改变了影响语音加工的神经结构和功能, 从而促进语言加工(Patel, 2014)。因此, 长时间系统的音乐训练可能提高人们对言语韵律的敏感性。

诗歌, 作为音乐和语言的中介(interlude), 其包含的押韵、平仄(通过声调体现)等格律要素也属于韵律信息。诗歌韵律往往体现了其民族语言的特色, 是通过不断地探索和尝试, 最终确定下来的几近完美的格式和规律, 是语言韵律的结晶。中国的近体诗因为有着严格且成熟的格律要求, 其传达的语言韵律之美更是达到了世界诗歌史的巅峰(吴洁敏, 朱宏达, 2001)。在七言绝句这一近体诗中, 每篇为四句两联, 每句要求“平仄”交替配置, 一联的上下句中平仄也要相互对立, 每一联为一个周期。此外, 七言绝句通常第一句、第二句、第四句最后一个字的韵母相同, 且声调为平声(即押韵)。这些格律要素中变化的规律性和整体性构成了语流上的和谐韵律, 增强了诗句的音乐性、表现力和共鸣感。因此某些位置上违反格律要求的变化(如, 变化韵脚的声调和韵母)会破坏诗句的韵律美。那么, 音乐训练是否会影响听者感知并加工由声调和韵母变化引起的诗句整体的韵律违反?这是我们关注的第一个问题。

句末是句法、语义、韵律等信息完整呈现的位置, 听者/读者在此位置的加工负荷相比句内更大, 并存在更高水平的整合加工(Just & Carpenter, 1980; Kuperberg, Kreher, Goff, McGuire, & David, 2006)。已有行为和眼动研究发现, 句末相较于其他位置的阅读时间更长(de Vincenzi et al., 2003; Just, Carpenter, & Woolley, 1982)、回视和眼跳更多(Camblin, Gordon, & Swaab, 2007; Rayner, Kambe, & Duffy, 2000)。采用ERP技术探究绝句韵律边界加工的研究发现, 与诗句内部各韵律边界诱发的脑电效应(CPS)不同, 在诗句末边界诱发了波幅更大的正波(P3), 可能反映了句法终止和完整语言单元的结束(李卫君, 杨玉芳, 2010)。值得注意的是, 一系列研究将句法、语义信息违反设置在句内, 却在句末发现违反相比合适条件诱发了波幅更大且更持久的中后部分布的N400效应(Bohan, Leuthold, Hijikata, & Sanford, 2012; Ditman, Holcomb, & Kuperberg, 2007; Hagoort, 2003; 金花等, 2009; Molinaro, Vespignani, & Job, 2008; Osterhout, & Holcomb, 1993; Osterhout & Mobley, 1995; Osterhout & Nicol, 1999)。更大波幅的N400可能反映了被试在句末完成句法、语义整合时, 整合难度更大。也就是说, 被试在将前面出现的违反信息整合进整个语境时耗费了更多的认知资源。那么, 对于句子内部出现韵律违反, 在句末位置是否也会探测到像句法、语义违反一样的整合加工过程, 并诱发相似的脑电效应?这是本研究探讨的另一个问题。

综上, 与句中不同, 句末位置存在基于整个句子的整合加工过程。以往研究主要考察了句法和语义的句末整合加工, 尚未有研究考察韵律信息的句末整合机制。目前采用违反范式探究言语韵律的研究主要考察了声调、韵母信息违反的即时加工过程。大多研究采用行为和脑电技术, 使用不同的材料(如, 单字对、成语、高限制语义句子、诗句等)和不同的实验任务(如, 词汇判断、语义判断、词汇决策等), 探究声调和韵母在词汇识别和限制语义整合加工过程中的时间进程(Hu, Gao, Ma, & Yao, 2012; Huang, Liu, Yang, Zhao, & Zhou, 2018; Li, Wang, & Yang, 2014; Schirmer, Tang, Penney, Gunter, & Chen, 2005)。虽然尚未得出一致结论, 但是大多数研究发现相较于声调信息, 韵母信息的加工相对更快; 即使母语是声调语言者, 韵母在词汇识别和语义限制方面仍具有更强的影响力和约束力(Tong, Francis, & Gandour, 2008; Hu et al., 2012; Huang et al., 2018; Li et al., 2014)。韵母信息的即时加工优势在句末整合时是否仍然会有体现?我们拟在本研究探讨。同时, 长期高强度的音乐训练使音乐训练者的生理基础及功能发生了改变。以往研究者通过变化句末单词的音高或音节时长调节句子韵律, 观察音乐训练者加工韵律信息的时间进程, 发现音乐训练者对韵律变化的反应更加灵敏, 加工过程更加高效(Magne, Schön, & Besson, 2006; Marques, Moreno, Luís Castro, & Besson, 2007; Marie, Magne, & Besson, 2011; Schön, Besson, & Magne, 2004; Zioga, Luft, & Bhattacharya, 2016)。但是, 目前还不清楚音乐训练对诗句内发生韵律违反, 诗句末完成韵律信息整合时是否也会起到促进作用。基于此, 本研究拟采用七言绝句为材料, 利用押韵判断任务, 考察音乐训练组和对照组在诗句末整合韵律信息的认知过程。为了避免诗句末整合与操纵的刺激本身诱发效应的混淆, 本研究通过改变绝句第一联末字的声调和韵母造成押韵违反, 在绝句末考察两组被试完成绝句韵律信息整合引起的脑电效应。我们预期诗句内的声调和韵母违反均可能引起句末整合困难:相比于合适条件, 诗句内的声调和韵母违反会在句末引发反映整合困难的N400效应。此外, 音乐训练组由于其长期音乐训练导致对韵律信息更为敏感, 会相比对照组诱发更早、波幅更大的脑电效应。

2 方法

2.1 被试

从高校招募受过和未受过音乐训练的普通话母语者各25名(9男, 16女, 年龄范围均为18~26岁, 其中音乐训练组年龄为20.56 ± 1.90岁, 对照组年龄为20.84 ± 1.85岁)。两组被试均为右利手, 无精神病史, 视力或矫正视力及听力正常。音乐训练组均自我报告从7岁或以前开始接受音乐训练, 有十年以上专业音乐训练经历, 且近期平均每天至少有1小时的音乐练习。两组被试在年龄、性别、教育程度上进行匹配(如表1所示)。正式实验开始前, 每位被试完成瑞文高级智力测验简化版(Arthur & Day, 1994)。统计发现, 音乐训练者(M = 7.52, SD = 1.90)和普通人(M = 8.44, SD = 1.87)流体智力水平无显著差异, t (48) = 1.73, p = 0.091(双侧)。研究得到辽宁师范大学伦理委员会批准, 且被试在实验前签署了知情同意书, 在实验后获得一定报酬。

表1 被试人口学资料

| 编号 | 性别 | 年龄(岁) | 受教育程度 | 训练开始年龄(岁) | 训练时长(年) | 乐器 | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| M | N | M | N | M | N | M | N | M | N | M | N | |

| 1 | 男 | 男 | 18 | 18 | 本科生 | 本科生 | 7 | 无 | 11 | 0 | 竹笛 | 无 |

| 2 | 男 | 男 | 18 | 19 | 本科生 | 本科生 | 7 | 无 | 11 | 0 | 爵士鼓、小军鼓 | 无 |

| 3 | 男 | 男 | 19 | 19 | 本科生 | 本科生 | 7 | 无 | 12 | 0 | 钢琴 | 无 |

| 4 | 男 | 男 | 19 | 20 | 本科生 | 本科生 | 7 | 无 | 11 | 0 | 钢琴 | 无 |

| 5 | 男 | 男 | 19 | 20 | 本科生 | 本科生 | 7 | 无 | 10 | 0 | 二胡、双排键 | 无 |

| 6 | 男 | 男 | 21 | 20 | 本科生 | 本科生 | 7 | 无 | 10 | 0 | 竹笛、葫芦丝 | 无 |

| 7 | 男 | 男 | 21 | 21 | 本科生 | 本科生 | 6 | 无 | 10 | 0 | 二胡、钢琴 | 无 |

| 8 | 男 | 男 | 22 | 22 | 本科生 | 本科生 | 7 | 无 | 15 | 0 | 二胡 | 无 |

| 9 | 男 | 男 | 23 | 23 | 本科生 | 本科生 | 7 | 无 | 15 | 0 | 钢琴、吉他 | 无 |

| 10 | 女 | 女 | 19 | 19 | 本科生 | 本科生 | 7 | 无 | 11 | 0 | 扬琴 | 无 |

| 11 | 女 | 女 | 19 | 19 | 本科生 | 本科生 | 6 | 无 | 12 | 0 | 扬琴 | 无 |

| 12 | 女 | 女 | 19 | 19 | 本科生 | 本科生 | 6 | 无 | 13 | 0 | 扬琴 | 无 |

| 13 | 女 | 女 | 19 | 20 | 本科生 | 本科生 | 6 | 无 | 13 | 0 | 琵琶 | 无 |

| 14 | 女 | 女 | 19 | 20 | 本科生 | 本科生 | 5 | 无 | 10 | 0 | 琵琶 | 无 |

| 15 | 女 | 女 | 20 | 20 | 本科生 | 本科生 | 7 | 无 | 13 | 0 | 钢琴、古筝 | 无 |

| 16 | 女 | 女 | 20 | 20 | 本科生 | 本科生 | 7 | 无 | 13 | 0 | 钢琴、吉他 | 无 |

| 17 | 女 | 女 | 20 | 20 | 本科生 | 本科生 | 6 | 无 | 14 | 0 | 钢琴 | 无 |

| 18 | 女 | 女 | 21 | 21 | 本科生 | 本科生 | 7 | 无 | 14 | 0 | 钢琴 | 无 |

| 19 | 女 | 女 | 21 | 21 | 本科生 | 本科生 | 5 | 无 | 16 | 0 | 钢琴、大提琴 | 无 |

| 20 | 女 | 女 | 21 | 22 | 本科生 | 本科生 | 4 | 无 | 17 | 0 | 钢琴、古筝 | 无 |

| 21 | 女 | 女 | 21 | 22 | 本科生 | 本科生 | 4 | 无 | 17 | 0 | 钢琴、小号、古筝 | 无 |

| 22 | 女 | 女 | 23 | 23 | 本科生 | 本科生 | 7 | 无 | 16 | 0 | 钢琴、古筝、架子鼓 | 无 |

| 23 | 女 | 女 | 23 | 23 | 本科生 | 本科生 | 7 | 无 | 16 | 0 | 钢琴、双排键、琵琶 | 无 |

| 24 | 女 | 女 | 23 | 24 | 研究生 | 研究生 | 5 | 无 | 18 | 0 | 钢琴、双排键、琵琶 | 无 |

| 25 | 女 | 女 | 26 | 26 | 研究生 | 研究生 | 7 | 无 | 12 | 0 | 钢琴、葫芦丝、长笛 | 无 |

注:M = 音乐训练组(musician), N = 对照组(nonmusician)

2.2 实验材料

实验材料采用以往研究使用的160首低熟悉度的七言绝句(Li et al., 2014)。由于韵母完全相同且熟悉度低的诗句数量有限, 因此我们使用的绝句除了第一句、第二句、第四句最后一个字的主要韵母相同的材料外, 还包括了三种其他押韵方式的材料:eng, ing:如“生, 轻, 行”; en, in:如“侵, 阴, 深”; ou, iu:如“流, 幽, 鸥” (占总材料20.6%)。低熟悉度量化标准具体为:首先通过网络和书籍选择实验者不熟悉的300首七言绝句, 然后进行预实验。预实验中, 16名大学生阅读依次呈现的绝句, 并要求他们判断这些绝句是否熟悉, 并在7点量表上表明该绝句意义上的可理解程度。最终我们选择被试认为不熟悉并且意义性较低(5分及其以下, 主要为了平衡最小语义干扰和开展ERP实验所需试次)的240首七言绝句作为正式实验材料。通过改变诗句第一联最后一个汉字的声调或韵母构成4种实验条件:声调、韵母均合适(V+T+), 声调合适、韵母违反(V-T+), 声调违反、韵母合适(V+T-), 声调违反、韵母违反(V-T-)。具体为, 声调违反条件统一由4声代替原诗句的1声或2声; 韵母违反通过变化其韵母部分, 使其与第一联第一句中最后一个字的韵母不同。在我们操纵韵母违反时并没有细致地从介音、主要元音和韵尾的角度考虑, 仅是以违反押韵规则为标准将韵母看作一个整体进行变化, 使其与符合押韵规则的韵母在感知上存在显著差别(如图1所示)。双违反条件则同时将其声调变为4声, 并使韵母发生如上所述变化。实验最终形成640首诗句。为避免被试对特定位置产生预期, 实验还包括其余80首七言绝句作为填充材料:声调、韵母均合适(40首), 以及通过变化第二联最后一个汉字形成的声调合适、韵母违反(13首), 声调违反、韵母合适(13首), 声调、韵母均违反(14首)。采用拉丁方将实验材料进行分组, 使得同一诗句的不同实验条件分别出现在四个列表(list)中; 将填充材料加入后对同一列表中诗句进行伪随机排序。所有材料由一名男性发音人正常朗读, 采样率为 22 kHz。对于声调和韵母全合适的绝句, 发音人会完整朗读每首绝句; 声调和韵母任何一个维度违反的绝句, 发音人仅朗读诗句第一联, 然后通过语音拼接技术将第一联末字拼接到完全合适的绝句中。因此, 对于同一绝句的不同条件, 仅第一联末字不同, 其他部分完全相同。通过统计发现, 该发音人朗读每首合适绝句总体发音时间在14 s到17 s之间。

图1

图1

实验材料各条件举例及实验流程。V+T+:韵母合适、声调合适; V+T-:韵母合适、声调违反; V-T+:韵母违反、声调合适; V-T-:韵母违反、声调违反

2.3 实验设计及程序

采用2被试类型(音乐训练组、对照组) × 2声调(合适、违反) × 2韵母(合适、违反)三因素混合实验设计。被试类型为组间变量, 声调、韵母为组内变量。

实验在光线柔和, 安静舒适的房间内进行。被试坐于液晶显示屏(规格为23², 刷新率为60 Hz)前, 佩戴Panasonic RP-HS47挂耳式耳机完成实验。实验共有6个区组(block), 每个区组包括40个试次, 同一条件的实验材料连续呈现不超过三次。每个被试听240首诗(160首实验材料, 80首填充材料)。每个区组大约用时10分钟, 区组的间歇被试根据自身情况休息。

被试经练习熟练掌握实验要求后, 开始正式实验。练习前明确告知被试押韵规则。在练习中, 我们将eng, ing; en, in; ou, iu三种类型的押韵材料增加在内, 并告知被试受历史影响这些诗句仍属于押韵合适。每个试次中, 首先同时呈现注视点(“+”)和提示音300 ms, 随后“+”继续呈现在屏幕中央以减少被试眼动, 同时播放一首七言绝句, 声音刺激播放完毕后会在1/3的试次后出现探测界面, 要求被试既快又准地判断诗句是否押韵。押韵按“F”键, 不押韵按“J”键, 并在被试间进行按键平衡。试次间的随机间隔为1000~1200 ms (如图1所示)。

2.4 数据采集和分析

采用ANT设备(ANT Neuro), 按照国际10-20系统扩展的64导电极帽记录EEG。信号记录的采样率为500 Hz, 以CPz为在线参考。电极M1和M2分别置于左侧和右侧乳突。电极与头皮之间的阻抗小于5 kΩ, 在线记录的滤波带通为0.01~100 Hz。离线分析时从各导联的脑电数据中减去双侧乳突的平均数作再参考。采用Brain Vision Analyzer 2.0软件进行数据处理, 对脑电信号进行滤波(高通为0.01 Hz, 低通为30 Hz)。脑电分析锁定的起始点为每首诗中第二联最后一个字的开始位置, 截取此关键位置之前200 ms和之后1000 ms的数据存为 EEG数据, 并进行基线矫正。排除眼动伪迹及电位超过 ± 80 μV的其它伪迹后, 每种条件下的有效试次均在30次以上。

基于诱发脑电效应的分布情况及以往相关研究(Hagoort, 2003; 李卫君, 刘梦, 张政华, 邓娜丽, 邢钰珊, 2018)。对中线和左右两半球100~300 ms和300~750 ms两个时间窗口进行被试类型(音乐训练组、对照组) × 韵母(合适、违反) × 声调(合适、违反)重复测量方差分析。中线上3个兴趣区包括额区(Fz, FCz), 中央区(Cz, CPz)和顶区(Pz, POz)。两侧增加半球(左, 右)因素, 左右半球共划分6个兴趣区, 包括左前(F1, F3, F5, FC1, FC3, FC5), 右前(F2, F4, F6, FC2, FC4, FC6), 左中(C1, C3, C5, CP1, CP3, CP5), 右中(C2, C4, C6, CP2, CP4, CP6), 左后(P1, P3, P5, PO3, PO5, O1)和右后(P2, P4, P6, PO4, PO6, O2)。用Greenhouse-Geisser对不符合球形检验的P值进行校正(Greenhouse and Geisser, 1959)。

3 结果

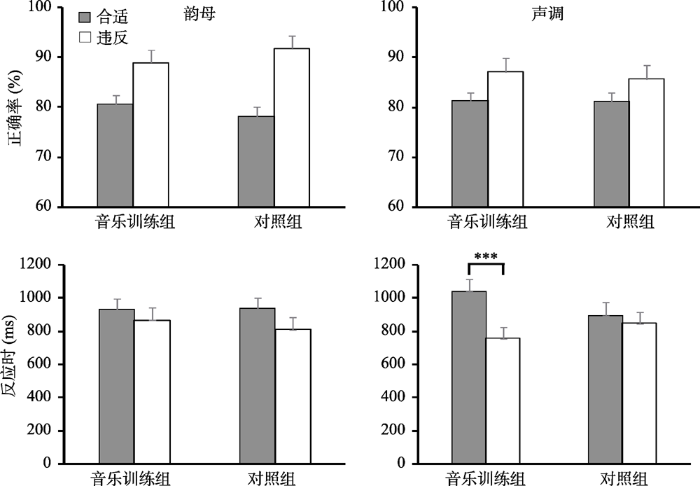

3.1 行为结果

对正确率进行独立样本t检验发现, 音乐训练组(M = 83.24%, SE = 6.64%)和对照组(M = 82.64%, SE = 9.15%)完成押韵合适性判断的正确率不存在显著差异, t(48) = 0.27, p = 0.791。进行2 (被试类型:音乐训练组、对照组) × 2 (韵母:合适、违反) × 2 (声调:合适、违反)重复测量方差分析发现, 韵母违反条件下的正确率(M = 90.70%, SE = 1.90%)高于韵母合适条件(M = 79.90%, SE = 1.60%), F(1, 48) = 26.09, p < 0.001, ηp2 = 0.35, 声调违反条件下的正确率(M = 87.10%, SE = 2.00%)高于声调合适条件(M = 83.60%, SE = 1.20%), F(1, 48) = 3.93, p = 0.053, ηp2 = 0.08(边缘显著) (如图2所示)。

图2

对反应时进行2 (被试类型:音乐训练组、对照组) × 2 (韵母:合适、违反) × 2(声调:合适、违反)重复测量方差分析发现, 韵母违反条件下的反应时(M = 838.79, SE = 51.66)短于韵母合适条件(M = 933.44, SE = 45.06), F(1, 48) = 5.27, p = 0.026, ηp2 = 0.10。声调违反条件下的反应时(M = 803.91, SE = 46.75)短于声调合适条件(M = 968.32, SE = 53.28), F(1, 48) = 11.50, p = 0.001, ηp2 = 0.19。被试和声调的交互作用显著, F(1, 48) = 5.85, p = 0.019, ηp2 = 0.11。简单效应分析表明, 仅在音乐训练组中发现声调违反条件下的反应时显著短于声调合适条件, F(1, 48) = 16.88, p < 0.001, ηp2 = 0.26 (如图2所示)。

3.2 ERP结果

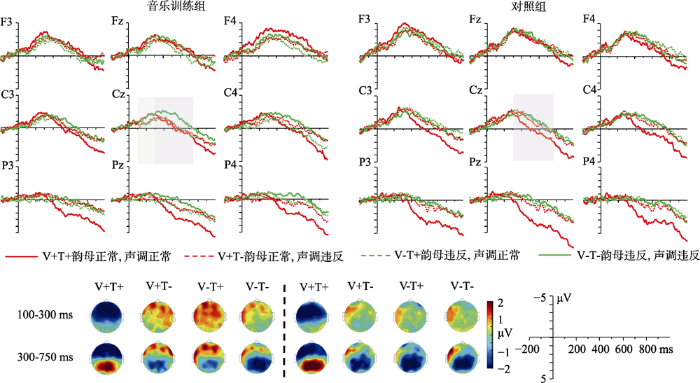

3.2.1 100~300 ms时间窗

统计分析发现, 中线上, 被试、韵母和声调交互作用显著, F(1, 48) = 7.59, p = 0.008, ηp2 = 0.14。简单效应分析表明, 音乐训练组在声调合适时, 韵母违反相比韵母合适诱发了波幅更大的正效应, F(1, 48) = 9.84, p = 0.003, ηp2 = 0.17; 韵母合适时, 声调违反相比声调合适诱发了波幅更大的正效应(边缘显著), F(1, 48) = 3.80, p = 0.057, ηp2 = 0.07; 韵母违反时, 声调违反相比声调合适诱发了波幅更小的正效应, F(1, 48) = 10.68, p = 0.002, ηp2 = 0.18。对照组在以上所有实验条件下均未诱发明显正效应(ps > 0.1)。

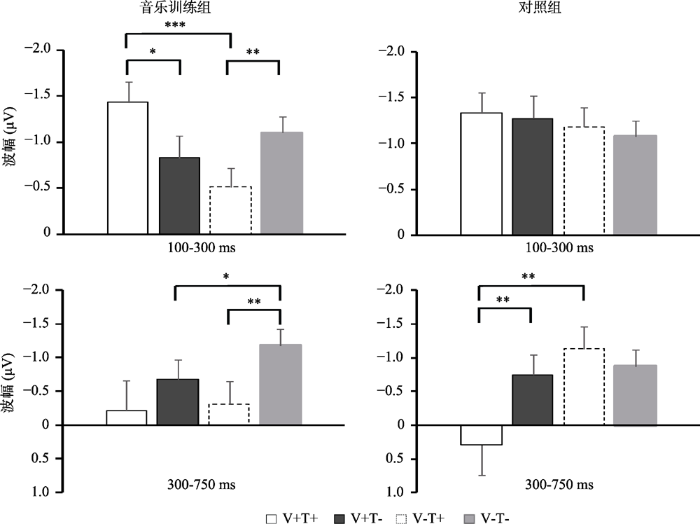

在两侧, 韵母违反(M = -0.97, SE = 0.11)相比韵母合适(M = -1.22, SE = 0.15)诱发了波幅更大的正效应, F(1, 48) = 4.04, p = 0.05, ηp2 = 0.08。被试、韵母和声调交互作用显著, F(1, 48) = 7.54, p = 0.008, ηp2 = 0.14。简单效应分析表明, 音乐训练组在声调合适时, 韵母违反相比韵母合适诱发了波幅更大的正效应, F(1, 48) = 15.41, p < 0.001, ηp2 = 0.24; 韵母合适时, 声调违反相比声调合适诱发了波幅更大的正效应, F(1, 48) = 7.27, p = 0.010, ηp2 = 0.13; 韵母违反时, 声调违反相比声调合适诱发了波幅更小的正效应, F(1, 48) = 7.37, p = 0.009, ηp2 = 0.13。对照组在以上所有实验条件下均未诱发明显正效应(ps > 0.1)。被试、韵母、半球和脑区的交互作用显著, F(2, 96) = 3.91, p = 0.033, ηp2 = 0.08。简单效应分析表明, 左半球中部, 音乐训练组韵母违反相比韵母合适诱发了明显正效应, F(1, 48) = 4.69, p = 0.35, ηp2 = 0.09; 对照组韵母违反和韵母合适无显著差异(F < 1) (如图3, 4所示)。

图3

图3

25个音乐训练者(左)和25个对照者(右)加工七言绝句押韵信息的总平均波形(上)和地形图(下)。ERP分析的开始时间为第二联末字开始位置。地形图为全合适条件的地形图以及各违反条件与全合适条件的差异波地形图。

图4

图4

25个音乐训练者(左)和25个对照组被试(右)加工七言绝句押韵信息时大脑两侧100~300 ms (上)和300~750 ms (下)诱发脑电效应的平均波幅。V+T+:韵母正常, 声调正常; V+T-:韵母正常, 声调违反; V-T+:韵母违反, 声调正常; V-T-:韵母违反, 声调违反。

总之, 该时间窗口的结果表明, 仅音乐训练组在绝句末会对绝句内部声调和韵母的一致性进行深入分析和整合。

3.2.2 300~750 ms时间窗

统计分析发现, 中线上, 韵母与脑区交互作用显著, F(2, 96) = 9.96, p = 0.001, ηp2 = 0.17。简单效应分析表明, 韵母违反相比于韵母合适在中部[F(1,48) = 16.45, p < 0.001, ηp2 = 0.26]和后部[F(1,48) = 24.87, p < 0.001, ηp2 = 0.34]诱发了波幅更大的负效应。声调与脑区交互作用显著, F(2, 96) = 5.61, p = 0.008, ηp2 = 0.11。简单效应分析表明, 声调违反相比于声调合适在中部[F(1,48) = 16.10, p < 0.001, ηp2 = 0.25]和后部[F(1,48) = 45.65, p < 0.001, ηp2 = 0.49]诱发了更大的负效应。被试、韵母和声调交互作用显著, F(1, 48) = 6.66, p = 0.013, ηp2 = 0.12。简单效应分析表明, 音乐训练组在声调违反时, 韵母违反相比韵母合适诱发了波幅更大的负效应, F(1, 48) = 5.14, p = 0.028, ηp2 = 0.10; 韵母违反时, 声调违反相比声调合适诱发了波幅更大的负效应, F(1, 48) = 12.94, p = 0.001, ηp2 = 0.21。对照组在声调合适时, 韵母违反相比韵母合适诱发了波幅更大的负效应, F(1, 48) = 15.07, p < 0.001, ηp2 = 0.24; 韵母合适时, 声调违反相比声调合适诱发了波幅更大的负效应, F(1, 48) = 10.88, p = 0.002, ηp2 = 0.19。

在两侧, 韵母与脑区交互作用显著, F(2, 96) = 10.97, p = 0.001, ηp2 = 0.19。简单效应分析表明, 韵母违反相比于韵母合适在中部[F(1,48) = 13.38, p = 0.001, ηp2 = 0.22]和后部[F(1,48) = 22.22, p < 0.001, ηp2 = 0.32]诱发了波幅更大的负效应。声调与脑区交互作用显著, F(2, 96) = 12.54, p < 0.001, ηp2 = 0.21。简单效应分析表明, 声调违反相比于声调合适在中部[F(1,48) = 21.35, p < 0.001, ηp2 = 0.31]和后部[F(1,48) = 27.69, p < 0.001, ηp2 = 0.37]诱发了波幅更大的负效应。被试、韵母和声调交互作用显著, F(1, 48) = 5.60, p = 0.022, ηp2 = 0.10。简单效应分析表明, 音乐训练组在声调违反时, 韵母违反相比韵母合适诱发了波幅更大的负效应(边缘显著), F(1, 48) = 2.92, p = 0.094, ηp2 = 0.06; 韵母违反时, 声调违反相比声调合适诱发了波幅更大的负效应, F(1, 48) = 9.65, p = 0.003, ηp2 = 0.17。对照组在声调合适时, 韵母违反相比韵母合适诱发了波幅更大的负效应, F(1, 48) = 12.04, p = 0.001, ηp2 = 0.20; 韵母合适时, 声调违反相比声调合适诱发了波幅更大的负效应, F(1, 48) = 8.27, p = 0.006, ηp2 = 0.15 (如图3所示)。

总之, 在此时间窗口两类被试都会在诗句末对诗句内发生的声调和韵母违反完成进一步分析, 不过音乐训练组在声调和韵母违反时才对韵母和声调的一致性进行分析, 而对照组则在声调和韵母合适时才会深入分析韵母和声调的一致性。

4 讨论

本研究通过给被试呈现不熟悉的七言绝句, 利用押韵判断任务, 考察音乐训练组和对照组在绝句末完成韵律信息的整合过程。行为结果表明, 音乐训练组和对照组在完成任务的正确率上未出现显著差异, 且相对声调和韵母合适条件, 二者加工声调和韵母违反时错误率更低; 仅在音乐训练组中发现声调违反条件下的反应时显著短于声调合适条件。ERP结果发现, 音乐训练组和对照组完成诗句韵律信息的整合加工存在明显差异。首先, 在100~ 300 ms, 对照组没有诱发任何显著效应, 音乐训练组在声调/韵母合适条件下, 对韵母/声调的一致性进行深入分析, 并诱发了更大的正波。在韵母违反条件下, 声调违反相比声调合适诱发了更小的正波。此外, 在300~750 ms, 两组被试均在绝句末对绝句内出现的韵母和声调违反进行整合分析。不过, 对照组在声调/韵母合适条件下, 对韵母/声调的一致性进行深入分析并诱发负波, 而音乐训练组在声调/韵母违反条件下, 对韵母/声调的一致性进行分析并诱发负波。以上结果表明, 两组被试均会在绝句末进一步对诗句内部押韵违反进行深入分析, 不过音乐训练影响了该分析的具体过程, 由此说明长期的音乐训练可能改变了大脑皮层对韵律加工的反应模式, 促使音乐训练者对语音韵律的加工更快速, 并且对声调信息的变化更加敏感。

4.1 诗句韵律信息的诗句末整合

行为结果发现, 合适条件比违反条件的正确率低、反应时慢的结果与以往研究一致(Schirmer et al., 2005, 使用高完型概率的粤语句子; Hu et al., 2012, 使用成语; 及Li et al., 2014, 使用诗句)。当前实验对于合适条件和违反条件在正确率和反应时上产生的差异, 可能是因为, 被试只要探测到第一联末出现不合适情况, 就能够判定该绝句属于押韵违反, 相对容易; 但是对于合适情况则需要听完整个绝句, 确定绝句每一个地方都合适, 才能做出合适判断, 相对更难。

更重要的是, 不论是音乐训练组还是对照组, 均在诗句末稳定探测到了由绝句内部押韵违反引起的整合加工过程。由此表明, 人们不仅在句末会完成对由句中句法、语义违反引起的整合加工(Bohan et al., 2012; Ditman et al., 2007; Molinaro et al., 2008), 也会对韵律违反进行相似的整合过程。具体表现在绝句末字开始后大约300 ms, 诗句内声调和韵母违反相比合适条件均诱发了中后部分布的负波, 且这一效应一直持续到750 ms或更久, 与以往探究句法、语义句末整合加工的研究结果一致(Bohan et al., 2012; Molinaro et al., 2008; Osterhout & Holcomb, 1993; Osterhout & Nicol, 1999)。当前研究中负波存在三种可能的解释:第一, 其潜伏期、脑区分布与经典N400一致, 多解释为语义加工(Kutas & Federmeier, 2011)。先前研究发现即使要求被试仅关注实验材料的韵律信息(音长或押韵), 语义违反仍会诱发N400, 体现了听者对语音材料语义加工的自动化(Magne et al., 2007; Marie et al., 2011; Perrin & García-Larrea, 2003)。本研究中虽选取熟悉度低的诗句为材料, 但在汉语中声调和韵母均对汉字的识别起重要作用。因此诗句中出现的声调、韵母信息引起的韵律违反导致了诗句末语义信息整合困难。第二, 该负波直接反映了对韵律信息的整合困难。有研究发现当要求被试对依次呈现的单词进行押韵判断时, 即使是毫无意义的假词, 不押韵的词也会诱发后部分布的N400 (Coch, Grossi, Skendzel, & Neville, 2005; Praamstra, & Stegeman, 1993)。这表明N400不仅反映语义整合, 也对词汇加工过程中的音韵信息非常敏感(Chen et al., 2016; Li et al., 2014)。与此解释一致, 当前结果可能表明在押韵判断任务下, 听者会在诗句末再次审视整首诗句的韵律信息, 当绝句内部出现与预期韵律不符的刺激时, 会在诗句末增加对整首诗韵律信息的整合难度。第三, 针对句中不同的信息违反(句法、语义、韵律), 在句末仍会稳定的诱发在潜伏期、分布位置等无明显差异的负波(Bohan et al., 2012; Molinaro et al., 2008; Osterhout & Holcomb, 1993; Osterhout & Nicol, 1999)。说明在任务导向下, 在句末位置会对这些违反信息进行无差别的再整合, 此负波可能反映的是将有错误的句子元素整合进连贯整体的语音表征中有困难, 更大波幅的负波表明整合难度更大。不论诗句末负波解释为何种过程, 本研究都表明, 两类被试会在诗句末对诗句内出现的声调、韵母违反信息进行整合加工。

以往采用违反范式探究言语韵律的研究主要考察了声调、韵母信息违反的即时加工过程。大多数研究发现相较于声调信息, 韵母信息的加工相对更快; 即使母语是声调语言者, 韵母在词汇识别和语义限制方面仍具有更强的影响力和约束力(Tong, Francis, & Gandour, 2008; Hu et al., 2012; Huang et al., 2018; Li et al., 2014)。与本研究最为相关的研究(Li et al., 2014, 使用普通被试)也发现了韵母信息的加工优势。研究者通过变化七言绝句第一联末字声调和韵母信息产生韵律违反, 发现在300~500 ms韵母违反相比韵母合适诱发了波幅更大的负波(N450); 在600~1000 ms, 声调违反相比声调合适在中后部诱发波幅更大的正波(LPC), 并且在声调合适条件下, 韵母违反相比韵母合适在后部诱发波幅更大的正波(LPC)。本研究主要考察了音乐训练组和对照组被试诗句末的韵律整合过程。对照组的结果显示, 在300~750 ms, 声调合适条件下, 韵母违反相比韵母合适诱发波幅更大的负波; 韵母合适条件下, 声调违反相比声调合适诱发波幅更大的负波。两研究结果比较, 可以发现在对诗句中出现的押韵违反信息的即时加工过程中, 人们探测韵母违反相较于声调违反更加快速, 声调和韵母的交互作用发生在晚期时间窗口(600~1000 ms)。诗句末韵律整合加工是对绝句内出现的声调、韵母信息违反进行的深入整合, 在绝句末关键字出现后300 ms即同时开始了声调和韵母的整合加工, 并诱发了中后部分布的持久负波。总之, 对照组诗句末韵律的整合过程不同于诗句内韵律信息的即时加工, 体现了诗句末韵律加工的独特过程。

4.2 音乐训练组的加工优势

本研究发现, 音乐训练组和对照组均在声调/韵母合适的情况下, 对韵母/声调的一致性进行深入分析, 只是音乐训练组完成这一过程的时间(100~300 ms)相比对照组(300~750 ms)更早, 且主要体现为一个类似P200的早期正波。以往研究表明, 长期高要求的音乐训练会引起大脑环路结构和功能的变化, 从而通过低水平的感知和高水平认知控制的一系列增强来实现语言加工优势效应, 使得音乐训练者比非音乐训练者在一些任务中表现更好(Bidelman, et al., 2014; James et al., 2014; Nan et al., 2018; Zioga et al., 2016)。本研究中音乐训练组在100~300 ms时间窗诱发的正效应, 与以往研究中音乐训练者在音乐或语言领域加工优势的发现一致。例如, 有研究发现与非音乐家相比, 音乐家加工句子韵律(音节时长)时在150~250 ms时间窗诱发了更大的正效应(Marie et al., 2011)。不仅是长期的音乐训练, 有研究发现短期的声音感知训练也会导致早期时间窗正波波幅的增加(Atienza, Cantero, & Dominguez-Marin, 2002; Reinke, He, Wang, & Alain, 2003; Tremblay, Kraus, McGee, Ponton, & Otis, 2001), 表明此时间段的正波可能体现了音乐训练的神经可塑性(Marie et al., 2011; Reinke et al., 2003; Shahin, Bosnyak, Trainor, & Roberts, 2003), 更大波幅的正波可能反映了神经同步性的增强(Shahin et al., 2003)或是更多神经元的集合反应(Reinke et al., 2003)。以往研究者将此时间段的正波定义为P200, 认为其属于独立于注意状态的外源成分, 反映了对低水平声学刺激特征的自动加工(Crowley & Colrain, 2004; Shahin, Roberts, Pantev, Trainor, &Ross, 2005), 快速注意捕获过程(Carpenter, Cranford, Hymel, de Chicchis, & Holbert, 2002; Fan et al., 2016), 也可能与早期音韵加工负荷有关(Huang, Yang, Zhang, & Guo, 2014; Marie et al., 2011)。这些解释都是在对目标刺激的即时加工中得出的。本研究中绝句末字不存在条件间差异, 虽然诱发的正波在时间上出现的较早, 但是实验控制的条件发生在绝句内, 所以它更可能反映的是对诗句内发生押韵违反的迅速探测和对韵律信息的初步整合。对于音乐训练组被试, 相比于仅一种违反(如韵母违反, 声调合适), 全违反条件(即韵母违反, 声调违反) 下的整合难度较小, 诱发的正波波幅相应更小; 同时, 相较于全合适条件(韵母合适, 声调合适), 被试整合仅一种违反时(即韵母合适, 声调违反或韵母违反, 声调合适)消耗的认知资源更多, 诱发的正波波幅也更大。相反, 对照组在此时间窗口没有诱发任何显著的效应, 可能表明普通人无法在绝句末迅速完成对绝句内出现的任何押韵违反的探测和整合。

不过, 对照组在绝句末也会对绝句内发生的押韵违反进行深入分析, 体现为声调和韵母合适时, 韵母和声调不一致会诱发一个300 ms开始出现的类似于N400的负效应。不管该效应反映了语义(Magne et al., 2007; Marie et al., 2011; Perrin & García- Larrea, 2003)还是韵律(Chen et al., 2016; Li et al., 2014)的整合加工, 当前结果与以往考察声调和韵母即时加工的研究诱发了相似的效应(Schirmer et al., 2005; Hu et al., 2012; Li et al., 2014)。在此时间窗口, 音乐训练组仅在韵母和声调违反时才会继续对声调、韵母一致性进行深入分析。这表明音乐训练组在上一阶段已经基本完成了韵律信息的整合; 在此时间窗对声调和韵母违反的深入分析则体现了他们对韵律信息的感知加工更为精细。研究发现长期的音乐训练可以促进工作记忆广度和刷新能力的提升(Nutley, Darki, & Klingberg, 2014; Slevc Davey, Buschkuehl, & Jaeggi, 2016), 包括言语工作记忆(Clayton et al., 2016; Hansen, Wallentin, & Vuust, 2013; Roden, Kreutz, & Bongard, 2012)。为了完成当前押韵判断任务, 听者需要动态存储诗句押韵信息并且在诗句末提取相关信息做出行为反应。音乐训练组可能相比对照组能更有效调动储存在工作记忆的信息, 从而完成对声调和韵母更为精细和深入的加工过程。

以往采用被动oddball范式, 探究音乐训练影响前注意阶段声调加工的研究发现, 音乐训练组在声调变化时相比普通人会诱发更大的MMN、P3a成分(Nan et al., 2018; Tang, Xiong, Zhang, Dong, & Nan, 2016)。当前研究中, 我们仅在音乐训练组发现声调违反条件下的反应时显著短于声调合适条件; 即使韵母违反, 音乐训练组仍会深入加工声调的合适性, 体现在早期的正效应和晚期的负效应上。这可能表明在有意识注意状态下音乐训练组对声调信息仍然更为敏感。以往研究发现, 不论音乐训练者的母语为声调还是非声调语言, 其声调识别能力均优于非音乐训练者(Delogu, Lampis, & Belardinelli, 2010; Gottfried, Staby, & Ziemer, 2004; Cooper & Wang, 2012; Tang et al., 2016)。本研究结果则进一步表明, 无论是在即时的声调加工过程中, 还是在延迟的整合加工时, 音乐训练者均相比普通人对声调投入更多注意资源, 也更为敏感。

OPERA理论指出音乐训练使得音乐训练者在基础声学线索的感知、一般认知能力等方面有很大的提高, 并且伴随脑结构和功能的变化, 为音乐能力在语言领域的迁移提供基础。本研究发现长期的音乐训练影响了人们对诗句韵律整合加工, 使得音乐训练组在脑皮层水平上表现出了更加迅速、精细的神经整合过程。音乐训练在语言领域的这一迁移效应支持了OPERA理论, 并且将现有探究音乐训练促进言语韵律信息即时加工的问题拓展到了对整体韵律信息的整合加工中。此外, 本研究在有意识的主动注意状态下发现母语为声调语言的音乐训练组仍具加工优势, 表明音乐训练的影响广泛, 不仅体现在简单的声音感知方面, 还体现在更加复杂、高级的认知加工过程。

5 结论

本研究采用ERP技术, 通过让被试完成七言绝句的押韵判断任务, 考察音乐训练组和对照组在诗歌末完成韵律信息的整合过程。结果发现, 虽然两类被试都在声调/韵母合适的情况下, 引起了由韵母/声调违反导致的整合困难, 但是整合阶段不同:音乐训练组在早期就开始并快速完成整合, 对照组则在较晚期时间窗才开始。此外, 音乐训练组从早期就表现出对声调信息的敏感性, 表现为即使韵母违反, 声调信息的一致性仍会影响整合过程且贯穿整个加工过程; 在晚期整合阶段, 声调违反时, 韵母违反也会增加音乐训练组的整合难度。综上, 相比于对照组, 音乐训练组对韵律违反信息(尤其是声调)的加工更敏感和快速, 且对不同的违反类型有更精细的差异性反应。

参考文献

Development of a short form for the raven advanced progressive matrices test

The time course of neural changes underlying auditory perceptual learning

Cross- domain effects of music and language experience on the representation of pitch in the human auditory brainstem

Coordinated plasticity in brainstem and auditory cortex contributes to enhanced categorical speech perception in musicians

The processing of good-fit semantic anomalies: An ERP investigation

The interplay of discourse congruence and lexical association during sentence processing: Evidence from ERPs and eye tracking

DOI:10.1016/j.jml.2006.07.005

URL

PMID:17218992

[本文引用: 1]

Five experiments used ERPs and eye tracking to determine the interplay of word-level and discourse-level information during sentence processing. Subjects read sentences that were locally congruent but whose congruence with discourse context was manipulated. Furthermore, critical words in the local sentence were preceded by a prime word that was associated or not. Violations of discourse congruence had early and lingering effects on ERP and eye-tracking measures. This indicates that discourse representations have a rapid effect on lexical semantic processing even in locally congruous texts. In contrast, effects of association were more malleable: Very early effects of associative priming were only robust when the discourse context was absent or not cohesive. Together these results suggest that the global discourse model quickly influences lexical processing in sentences, and that spreading activation from associative priming does not contribute to natural reading in discourse contexts.

Electrophysiologic signs of attention versus distraction in a binaural listening task

The effect of musical training on executive functions

音乐训练对执行功能的影响

Listening to musical rhythms recruits motor regions of the brain

Prosodic expectations in silent reading: ERP evidence from rhyme scheme and semantic congruence in classic Chinese poems

Twelve months of active musical training in 8-to 10-year- old children enhances the preattentive processing of syllabic duration and voice onset time

Executive function, visual attention and the cocktail party problem in musicians and non-musicians

ERP nonword rhyming effects in children and adults

The influence of linguistic and musical experience on cantonese word learning

DOI:10.1121/1.4714355

URL

PMID:22712948

[本文引用: 1]

Adult non-native speech perception is subject to influence from multiple factors, including linguistic and extralinguistic experience such as musical training. The present research examines how linguistic and musical factors influence non-native word identification and lexical tone perception. Groups of native tone language (Thai) and non-tone language listeners (English), each subdivided into musician and non-musician groups, engaged in Cantonese tone word training. Participants learned to identify words minimally distinguished by five Cantonese tones during training, also completing musical aptitude and phonemic tone identification tasks. First, the findings suggest that either musical experience or a tone language background leads to significantly better non-native word learning proficiency, as compared to those with neither musical training nor tone language experience. Moreover, the combination of tone language and musical experience did not provide an additional advantage for Thai musicians above and beyond either experience alone. Musicianship was found to be more advantageous than a tone language background for tone identification. Finally, tone identification and musical aptitude scores were significantly correlated with word learning success for English but not Thai listeners. These findings point to a dynamic influence of musical and linguistic experience, both at the tone dentification level and at the word learning stage.

A review of the evidence for P2 being an independent component process: Age, sleep and modality

Prosody and language comprehension

Differences in the perception and time course of syntactic and semantic violations

From melody to lexical tone: Musical ability enhances specific aspects of foreign language perception

DOI:10.1080/09541440802708136 URL [本文引用: 1]

Potent prosody: Comparing the effects of distal prosody, proximal prosody, and semantic context on word segmentation

DOI:10.1016/j.jml.2010.06.003

URL

[本文引用: 1]

AbstractRecent work shows that word segmentation is influenced by distal prosodic characteristics of the input several syllables from the segmentation point (Dilley & McAuley, 2008). Here, participants heard eight-syllable sequences with a lexically ambiguous four-syllable ending (e.g., crisis turnip vs. cry sister nip). The prosodic characteristics of the initial five syllables were resynthesized in a manner predicted to favor parsing of the final syllables as either a monosyllabic or a disyllabic word; the acoustic characteristics of the final three syllables were held constant. Experiments 1a–c replicated earlier results showing that utterance-initial prosody influences segmentation utterance-finally, even when lexical content is removed through low-pass filtering, and even when an on-line cross-modal paradigm is used. Experiments 2 and 3 pitted distal prosody against, respectively, distal semantic context and prosodic attributes of the test words themselves. Although these factors jointly affected which words participants heard, distal prosody remained an extremely robust segmentation cue. These findings suggest that distal prosody is a powerful factor for consideration in models of word segmentation and lexical access.]]>

An investigation of concurrent ERP and self-paced reading methodologies

Negative emotion weakens the degree of self-reference effect: Evidence from ERPs

Music training for the development of speech segmentation

The effects of musical training on verbal memory

Musical experience and mandarin tone discrimination and imitation

Interplay between syntax and semantics during sentence comprehension: ERP effects of combining syntactic and semantic violations

Working memory and musical competence of musicians and non-musicians

Music and speech prosody: A common rhythm

Dissociation of tone and vowel processing in Mandarin idioms

The time course of spoken word recognition in mandarin Chinese: A unimodal ERP study

DOI:10.1016/j.neuropsychologia.2014.08.015

URL

PMID:25172388

[本文引用: 1]

In the present study, two experiments were carried out to investigate the time course of spoken word recognition in Mandarin Chinese using both event-related potentials (ERPs) and behavioral measures. To address the hypothesis that there is an early phonological processing stage independent of semantics during spoken word recognition, a unimodal word-matching paradigm was employed, in which both prime and target words were presented auditorily. Experiment 1 manipulated the phonological relations between disyllabic primes and targets, and found an enhanced P2 (200-270 ms post-target onset) as well as a smaller early N400 to word-initial phonological mismatches over fronto-central scalp sites. Experiment 2 manipulated both phonological and semantic relations between monosyllabic primes and targets, and replicated the phonological mismatch-associated P2, which was not modulated by semantic relations. Overall, these results suggest that P2 is a sensitive electrophysiological index of early phonological processing independent of semantics in Mandarin Chinese spoken word recognition.

Tonal and vowel information processing in Chinese spoken word recognition: An event-related potential study

DOI:10.1097/WNR.0000000000000912 URL [本文引用: 3]

Musical training intensity yields opposite effects on grey matter density in cognitive versus sensorimotor networks

The time course of world knowledge integration in sentence comprehension

世界知识在句子理解中的整合时程

Brain response to prosodic boundary cues depends on boundary position

Communication of emotions in vocal expression and music performance: Different channels, same code?

A theory of reading: from eye fixations to comprehension

Paradigms and processes in reading comprehension

On the lateralization of emotional prosody: An event-related functional MR investigation

The encoding of vowels and temporal speech cues in the auditory cortex of professional musicians: An EEG study

DOI:10.1016/j.neuropsychologia.2013.04.007

URL

PMID:23664833

[本文引用: 1]

Here, we applied a multi-feature mismatch negativity (MMN) paradigm in order to systematically investigate the neuronal representation of vowels and temporally manipulated CV syllables in a homogeneous sample of string players and non-musicians. Based on previous work indicating an increased sensitivity of the musicians' auditory system, we expected to find that musically trained subjects will elicit increased MMN amplitudes in response to temporal variations in CV syllables, namely voice-onset time (VOT) and duration. In addition, since different vowels are principally distinguished by means of frequency information and musicians are superior in extracting tonal (and thus frequency) information from an acoustic stream, we also expected to provide evidence for an increased auditory representation of vowels in the experts. In line with our hypothesis, we could show that musicians are not only advantaged in the pre-attentive encoding of temporal speech cues, but most notably also in processing vowels. Additional

Building up linguistic context in schizophrenia: Evidence from self-paced reading

Thirty years and counting: Finding meaning in the N400 component of the event related brain potential (ERP)

Identification of mandarin tones by English-speaking musicians and nonmusicians

DOI:10.1121/1.2990713

URL

PMID:19045807

[本文引用: 1]

This study examined Mandarin tone identification by 36 English-speaking musicians and 36 nonmusicians and musical note identification by the musicians. In the Mandarin task, participants were given a brief tutorial on Mandarin tones and identified the tones of the syllable sa produced by 32 speakers. The stimuli included intact syllables and acoustically modified syllables with limited F0 information. Acoustic analyses showed considerable overlap in F0 range among the tones due to the presence of multiple speakers. Despite no prior experience with Mandarin, the musicians identified intact tones at 68% and silent-center tones at 54% correct, both exceeding chance (25%). The musicians also outperformed the nonmusicians, who identified intact tones at 44% and silent-center tones at 36% correct. These results indicate musical training facilitated lexical tone identification, although the facilitation varied as a function of tone and the type of acoustic input. In the music task, the musicians listened to synthesized musical notes of three timbres and identified the notes without a reference pitch. Average identification accuracy was at chance level even when multiple semitone errors were allowed. Since none of the musicians possessed absolute pitch, the role of absolute pitch in Mandarin tone identification remains inconclusive.

Neural processing of ambiguous Chinese phrases of stutters

口吃者加工汉语歧义短语的神经过程

Chinese tone and vowel processing exhibits distinctive temporal characteristics: An electrophysiological perspective from classical Chinese poem processing

The cognitive processing of prosodic boundary and its related brain effect in quatrain

绝句韵律边界的认知加工及其脑电效应

Intonation processing in congenital amusia: Discrimination, identification and imitation

Influence of syllabic lengthening on semantic processing in spoken French: Behavioral and electrophysiological evidence

DOI:10.1093/cercor/bhl174

URL

PMID:17264253

[本文引用: 2]

The present work investigates the relationship between semantic and prosodic (metric) processing in spoken language under 2 attentional conditions (semantic and metric tasks) by analyzing both behavioral and event-related potential (ERP) data. Participants listened to short sentences ending in semantically and/or metrically congruous or incongruous trisyllabic words. In the metric task, ERP data showed that metrically incongruous words elicited both larger early negative and late positive components than metrically congruous words, thereby demonstrating the online processing of the metric structure of words. Moreover, in the semantic task, metrically incongruous words also elicited an early negative component with similar latency and scalp distribution as the classical N400 component. This finding highlights the automaticity of metrical structure processing. Moreover, it demonstrates that violations of a word's metric structure may hinder lexical access and word comprehension. This interpretation is supported by the behavioral data showing that participants made more errors for semantically congruous but metrically incongruous words when they were attending to the semantic aspects of the sentence. Finally, the finding of larger N400 components to semantically incongruous than congruous words, in both the semantic and metric tasks, suggests that the N400 component reflects automatic aspects of semantic processing.

Musician children detect pitch violations in both music and language better than nonmusician children: Behavioral and electrophysiological approaches

Musicians and the metric structure of words

DOI:10.1162/jocn.2010.21413

URL

PMID:20044890

[本文引用: 6]

The present study aimed to examine the influence of musical expertise on the metric and semantic aspects of speech processing. In two attentional conditions (metric and semantic tasks), musicians listened to short sentences ending in trisyllabic words that were semantically and/or metrically congruous or incongruous. Both ERPs and behavioral data were analyzed and the results were compared to previous nonmusicians' data. Regarding the processing of meter, results showed that musical expertise influenced the automatic detection of the syllable temporal structure (P200 effect), the integration of metric structure and its influence on word comprehension (N400 effect), as well as the reanalysis of metric violations (P600 and late positivities effects). By contrast, results showed that musical expertise did not influence the semantic level of processing. These results are discussed in terms of transfer of training effects from music to speech processing.

Musicians detect pitch violation in a foreign language better than nonmusicians: Behavioral and electrophysiological evidence

DOI:10.1162/jocn.2007.19.9.1453

URL

PMID:17714007

[本文引用: 1]

The aim of this study was to determine whether musical expertise influences the detection of pitch variations in a foreign language that participants did not understand. To this end, French adults, musicians and nonmusicians, were presented with sentences spoken in Portuguese. The final words of the sentences were prosodically congruous (spoken at normal pitch height) or incongruous (pitch was increased by 35% or 120%). Results showed that when the pitch deviations were small and difficult to detect (35%: weak prosodic incongruities), the level of performance was higher for musicians than for nonmusicians. Moreover, analysis of the time course of pitch processing, as revealed by the event-related brain potentials to the prosodically congruous and incongruous sentence-final words, showed that musicians were, on average, 300 msec faster than nonmusicians to categorize prosodically congruous and incongruous endings. These results are in line with previous ones showing that musical expertise, by increasing discrimination of pitch--a basic acoustic parameter equally important for music and speech prosody--does facilitate the processing of pitch variations not only in music but also in language. Finally, comparison with previous results [Schon, D., Magne, C., & Besson, M. The music of speech: Music training facilitates pitch processing in both music and language. Psychophysiology, 41, 341-349, 2004] points to the influence of semantics on the perception of acoustic prosodic cues.

Musical aptitude and second language pronunciation skills in school-aged children: Neural and behavioral evidence

DOI:10.1016/j.brainres.2007.11.042

URL

PMID:18182165

[本文引用: 1]

The main focus of this study was to examine the relationship between musical aptitude and second language pronunciation skills. We investigated whether children with superior performance in foreign language production represent musical sound features more readily in the preattentive level of neural processing compared with children with less-advanced production skills. Sound processing accuracy was examined in elementary school children by means of event-related potential (ERP) recordings and behavioral measures. Children with good linguistic skills had better musical skills as measured by the Seashore musicality test than children with less accurate linguistic skills. The ERP data accompany the results of the behavioral tests: children with good linguistic skills showed more pronounced sound-change evoked activation with the music stimuli than children with less accurate linguistic skills. Taken together, the results imply that musical and linguistic skills could partly be based on shared neural mechanisms.

A deeper reanalysis of a superficial feature: An ERP study on agreement violations

DOI:10.1016/j.brainres.2008.06.064

URL

PMID:18619420

[本文引用: 4]

A morphosyntactic agreement violation during reading elicits a well-documented biphasic ERP pattern (LAN+P600). The cognitive variables that affect both the amplitude of the two components and the topography of the anterior negativity are still debated. We studied the ERP correlates of the violation of a specific agreement feature based on the phonology of the critical word. This was compared with the violation of a lexical feature, namely grammatical gender. These two features are different both in the level of representation involved in the agreement computation and in terms of their role in establishing structural relations with possible following constituents. The ERP pattern elicited by the two agreement violations showed interesting dissociations. The LAN was distributed ventrally for both types of violation, but showed a central extension for the gender violation. The P600 showed an amplitude modulation: this component was larger for phonotactic violations in its late time window (700-900 ms). The former result is indicative of a difference in the brain structures recruited for the processing of violations at different levels of representation. The P600 effect is interpreted assuming a hierarchical relation among features that forces a deeper reanalysis of the violation involving a word form property. Finally the two features elicit distinct end-of-sentence wrap-up effects, consistent with the different roles they play in the processing of the whole sentence.

Examining neural plasticity and cognitive benefit through the unique lens of musical training

DOI:10.1016/j.heares.2013.09.012

URL

[本文引用: 1]

This article is part of a Special Issue entitled . (C) 2013 Elsevier B.V.]]>

Relationships between behavior, brainstem and cortical encoding of seen and heard speech in musicians and non-musicians

DOI:10.1016/j.heares.2008.04.013

URL

PMID:18562137

[本文引用: 1]

Musicians have a variety of perceptual and cortical specializations compared to non-musicians. Recent studies have shown that potentials evoked from primarily brainstem structures are enhanced in musicians, compared to non-musicians. Specifically, musicians have more robust representations of pitch periodicity and faster neural timing to sound onset when listening to sounds or both listening to and viewing a speaker. However, it is not known whether musician-related enhancements at the subcortical level are correlated with specializations in the cortex. Does musical training shape the auditory system in a coordinated manner or in disparate ways at cortical and subcortical levels? To answer this question, we recorded simultaneous brainstem and cortical evoked responses in musician and non-musician subjects. Brainstem response periodicity was related to early cortical response timing across all subjects, and this relationship was stronger in musicians. Peaks of the brainstem response evoked by sound onset and timbre cues were also related to cortical timing. Neurophysiological measures at both levels correlated with musical skill scores across all subjects. In addition, brainstem and cortical measures correlated with the age musicians began their training and the years of musical practice. Taken together, these data imply that neural representations of pitch, timing and timbre cues and cortical response timing are shaped in a coordinated manner, and indicate corticofugal modulation of subcortical afferent circuitry.

The facilitation effect of music learning on speech processing

DOI:10.3724/SP.J.1042.2017.01844 URL [本文引用: 1]

音乐学习对语言加工的促进作用

Piano training enhances the neural processing of pitch and improves speech perception in Mandarin-speaking children

DOI:10.1073/pnas.1808412115 URL [本文引用: 3]

Congenital amusia in speakers of a tone language: Association with lexical tone agnosia

DOI:10.1093/brain/awq178

URL

PMID:20685803

[本文引用: 1]

Congenital amusia is a neurogenetic disorder that affects the processing of musical pitch in speakers of non-tonal languages like English and French. We assessed whether this musical disorder exists among speakers of Mandarin Chinese who use pitch to alter the meaning of words. Using the Montreal Battery of Evaluation of Amusia, we tested 117 healthy young Mandarin speakers with no self-declared musical problems and 22 individuals who reported musical difficulties and scored two standard deviations below the mean obtained by the Mandarin speakers without amusia. These 22 amusic individuals showed a similar pattern of musical impairment as did amusic speakers of non-tonal languages, by exhibiting a more pronounced deficit in melody than in rhythm processing. Furthermore, nearly half the tested amusics had impairments in the discrimination and identification of Mandarin lexical tones. Six showed marked impairments, displaying what could be called lexical tone agnosia, but had normal tone production. Our results show that speakers of tone languages such as Mandarin may experience musical pitch disorder despite early exposure to speech-relevant pitch contrasts. The observed association between the musical disorder and lexical tone difficulty indicates that the pitch disorder as defining congenital amusia is not specific to music or culture but is rather general in nature.

Music practice is associated with development of working memory during childhood and adolescence

DOI:10.3389/fnhum.2013.00926

URL

PMID:24431997

[本文引用: 1]

Event-related potentials and syntactic anomaly: Evidence of anomaly detection during the perception of continuous speech

Event-related brain potentials elicited by failure to agree

On the distinctiveness, independence, and time course of the brain responses to syntactic and semantic anomalies

Linguistic multifeature MMN paradigm for extensive recording of auditory discrimination profiles

DOI:10.1111/j.1469-8986.2011.01214.x

URL

PMID:21564122

[本文引用: 1]

We studied whether a multifeature mismatch negativity (MMN) paradigm using naturally produced speech stimuli is feasible for studies of auditory discrimination accuracy of adult participants. A naturally produced trisyllabic pseudoword was used in the paradigm, and MMNs were recorded to changes that were acoustic (changes in fundamental frequency or intensity) or potentially phonological (changes in vowel identity or vowel duration). All the different changes were presented in three different word segments (initial, middle, or final syllable). All changes elicited an MMN response, but the vowel duration change elicited a different response pattern than the other deviant types. Changes in vowel duration and identity also had an effect on MMN lateralization. Our results show that assessing speech sound discrimination of several features in word context is possible in a short recording time (30 min) with the multifeature paradigm.

Language, music, syntax and the brain

DOI:10.1038/nn1082

URL

PMID:12830158

[本文引用: 1]

The comparative study of music and language is drawing an increasing amount of research interest. Like language, music is a human universal involving perceptually discrete elements organized into hierarchically structured sequences. Music and language can thus serve as foils for each other in the study of brain mechanisms underlying complex sound processing, and comparative research can provide novel insights into the functional and neural architecture of both domains. This review focuses on syntax, using recent neuroimaging data and cognitive theory to propose a specific point of convergence between syntactic processing in language and music. This leads to testable predictions, including the prediction that that syntactic comprehension problems in Broca's aphasia are not selective to language but influence music perception as well.

Can nonlinguistic musical training change the way the brain processes speech? The expanded OPERA hypothesis

DOI:10.1016/j.heares.2013.08.011

URL

PMID:24055761

[本文引用: 1]

.]]>

An empirical comparison of rhythm in language and music

DOI:10.1016/s0010-0277(02)00187-7

URL

PMID:12499110

[本文引用: 1]

Musicologists and linguists have often suggested that the prosody of a culture's spoken language can influence the structure of its instrumental music. However, empirical data supporting this idea have been lacking. This has been partly due to the difficulty of developing and applying comparable quantitative measures to melody and rhythm in speech and music. This study uses a recently-developed measure for the study of speech rhythm to compare rhythmic patterns in English and French language and classical music. We find that English and French musical themes are significantly different in this measure of rhythm, which also differentiates the rhythm of spoken English and French. Thus, there is an empirical basis for the claim that spoken prosody leaves an imprint on the music of a culture.

Comparing the rhythm and melody of speech and music: The case of British English and French

Processing prosodic and musical patterns: A neuropsychological investigation

DOI:10.1006/brln.1997.1862

URL

PMID:9448936

[本文引用: 1]

To explore the relationship between the processing of melodic and rhythmic patterns in speech and music, we tested the prosodic and musical discrimination abilities of two

Modulation of the N400 potential during auditory phonological/semantic interaction

DOI:10.1016/s0926-6410(03)00078-8

URL

PMID:12763190

[本文引用: 2]

The processing of phonological and semantic word attributes has been commonly explored with electrophysiological methods using simple contexts where competition between features is eliminated. Conversely, the interaction between phonological and semantic attributes has not been systematically examined. We therefore recorded an event-related electrophysiological marker of word discordance, the N400, in response to sequences of auditory word pairs containing semantic incongruences, phonological discordances, or a mixture of the two. N400 enhancement to semantically unrelated words was systematically observed, whether the subjects heard the sequences passively (no instruction) or actively (semantic judgement task), and even in contexts where the task did not concern semantic attributes. In contrast, the N400 effect to phonologically unrelated (non-rhyming) words was exclusively obtained in the active situation (phonological judgment), while it disappeared in passive conditions and during semantic/phonological interference. This suggests that the detection of semantic incongruences is a more robust and automatized mechanism than that of phonological ones, and tends to occlude this latter when both features are in competition. Our data also provide new elements supporting the persistence of the semantic N400 during 'shallow' word processing tasks, i.e. tasks that discourage analysis of semantic aspects of the words.

Phonological effects on the auditory N400 event-related brain potential

DOI:10.1016/0926-6410(93)90013-u

URL

PMID:8513242

[本文引用: 1]

We report 3 experiments exploring the responsiveness of the auditory N400 event-related potential to the phonological relations between word or non-word targets and preceding prime words. When subjects had to decide whether primes and targets rhymed, non-rhyming words produced greater negativity in the N400 time range than rhyming words. The same effect was obtained when these targets were spoken by another voice than the prime words, suggesting that the effect is determined by phonological factors, and not merely by a physical-acoustic mismatch (Experiment 1). In the rhyming task, the differential N400 for non-rhyming vs. rhyming words was equally pronounced for non-rhyming vs. rhyming non-words (Experiment 2). In a lexical decision task on the same stimuli, a difference between non-rhyming and rhyming targets was obtained for words, but not for non-words (Experiment 3). The results show that the auditory N400 is sensitive to phonological variables. It is further proposed that phonological effects on the auditory N400 are not manifestations unique to phonological processes that demand conscious attention, but may also reflect operations that are performed automatically during auditory word recognition.

The effect of clause wrap-up on eye movements during reading

Perceptual learning modulates sensory evoked response during vowel segregation

DOI:10.1016/s0926-6410(03)00202-7

URL

PMID:14561463

[本文引用: 3]

With practice, people become better at discriminating two similar stimuli, such as two sounds. The neural mechanisms that underlie this type of learning have been of interest to researchers investigating neural plasticity associated with learning and recovery of function following stroke. We utilized event related potentials (ERP) to study the neural substrates underlying auditory discrimination learning. Stimuli were five steady-state American English vowels. On each trial, participants were presented with a pair of vowels created by summing together the digital waveforms of two different vowels. Listeners were instructed to identify both vowels in the pair. ERPs were recorded during two sessions separated by 1 week. Half of the participants practised the discrimination task during the intervening week while the other half served as controls and did not receive any training. Trained listeners showed greater improvement in accuracy than untrained participants. In both groups, vowels generated N1 and P2 waves at the fronto-central and temporal scalp regions. The behavioral effects of training were paralleled by decreased N1 and P2 latencies as well as enhanced P2 amplitude in the trained compared with untrained listeners. The effects of training on sensory evoked responses are consistent with the proposal that perceptual learning is associated with changes in sensory cortices.

Effects of a school-based instrumental music program on verbal and visual memory in primary school children: a longitudinal study

Brain responses to segmentally and tonally induced semantic violations in Cantonese

DOI:10.1162/0898929052880057

URL

PMID:15701235

[本文引用: 3]

The present event-related potential (ERP) study examined the role of tone and segmental information in Cantonese word processing. To this end, participants listened to sentences that were either semantically correct or contained a semantically incorrect word. Semantically incorrect words differed from the most expected sentence completion at the tone level, at the segmental level, or at both levels. All semantically incorrect words elicited an increased frontal negativity that was maximal 300 msec following word onset and an increased centroparietal positivity that was maximal 650 msec following word onset. There were differences between completely incongruous words and the other two violation conditions with respect to the latency and amplitude of the ERP effects. These differences may be due to differences in the onset of acoustic deviation of the presented from the expected word and different mechanisms involved in the processing of complete as compared to partial acoustic deviations. Most importantly, however, tonally and segmentally induced semantic violations were comparable. This suggests that listeners access tone and segmental information at a similar point in time and that both types of information play comparable roles during word processing in Cantonese.

The music of speech: music training facilitates pitch processing in both music and language

DOI:10.1111/1469-8986.00172.x

URL

PMID:15102118

[本文引用: 1]

The main aim of the present experiment was to determine whether extensive musical training facilitates pitch contour processing not only in music but also in language. We used a parametric manipulation of final notes' or words' fundamental frequency (F0), and we recorded behavioral and electrophysiological data to examine the precise time course of pitch processing. We compared professional musicians and nonmusicians. Results revealed that within both domains, musicians detected weak F0 manipulations better than nonmusicians. Moreover, F0 manipulations within both music and language elicited similar variations in brain electrical potentials, with overall shorter onset latency for musicians than for nonmusicians. Finally, the scalp distribution of an early negativity in the linguistic task varied with musical expertise, being largest over temporal sites bilaterally for musicians and largest centrally and over left temporal sites for nonmusicians. These results are taken as evidence that extensive musical training influences the perception of pitch contour in spoken language.

Enhancement of neuroplastic P2 and N1c auditory evoked potentials in musicians

P2 and N1c components of the auditory evoked potential (AEP) have been shown to be sensitive to remodeling of the auditory cortex by training at pitch discrimination in nonmusician subjects. Here, we investigated whether these neuroplastic components of the AEP are enhanced in musicians in accordance with their musical training histories. Highly skilled violinists and pianists and nonmusician controls listened under conditions of passive attention to violin tones, piano tones, and pure tones matched in fundamental frequency to the musical tones. Compared with nonmusician controls, both musician groups evidenced larger N1c (latency, 138 msec) and P2 (latency, 185 msec) responses to the three types of tonal stimuli. As in training studies with nonmusicians, N1c enhancement was expressed preferentially in the right hemisphere, where auditory neurons may be specialized for processing of spectral pitch. Equivalent current dipoles fitted to the N1c and P2 field patterns localized to spatially differentiable regions of the secondary auditory cortex, in agreement with previous findings. These results suggest that the tuning properties of neurons are modified in distributed regions of the auditory cortex in accordance with the acoustic training history (musical- or laboratory-based) of the subject. Enhanced P2 and N1c responses in musicians need not be considered genetic or prenatal markers for musical skill.

Modulation of P2 auditory-evoked responses by the spectral complexity of musical sounds

DOI:10.1097/01.wnr.0000185017.29316.63

URL

PMID:16237326

[本文引用: 1]

We investigated whether N1 and P2 auditory-evoked responses are modulated by the spectral complexity of musical sounds in pianists and non-musicians. Study participants were presented with three variants of a C4 piano tone equated for temporal envelope but differing in the number of harmonics contained in the stimulus. A fourth tone was a pure tone matched to the fundamental frequency of the piano tones. A simultaneous electroencephalographic/magnetoencephalographic recording was made. P2 amplitude was larger in musicians and increased with spectral complexity preferentially in this group, but N1 did not. The results suggest that P2 reflects the specific features of acoustic stimuli experienced during musical practice and point to functional differences in P2 and N1 that relate to their underlying mechanisms.

Tuning the mind: Exploring the connections between musical ability and executive functions

DOI:10.1016/j.cognition.2016.03.017

URL

PMID:27107499

[本文引用: 1]

A growing body of research suggests that musical experience and ability are related to a variety of cognitive abilities, including executive functioning (EF). However, it is not yet clear if these relationships are limited to specific components of EF, limited to auditory tasks, or reflect very general cognitive advantages. This study investigated the existence and generality of the relationship between musical ability and EFs by evaluating the musical experience and ability of a large group of participants and investigating whether this predicts individual differences on three different components of EF - inhibition, updating, and switching - in both auditory and visual modalities. Musical ability predicted better performance on both auditory and visual updating tasks, even when controlling for a variety of potential confounds (age, handedness, bilingualism, and socio-economic status). However, musical ability was not clearly related to inhibitory control and was unrelated to switching performance. These data thus show that cognitive advantages associated with musical ability are not limited to auditory processes, but are limited to specific aspects of EF. This supports a process-specific (but modality-general) relationship between musical ability and non-musical aspects of cognition.

Early musical training and white-matter plasticity in the corpus callosum: Evidence for a sensitive period

DOI:10.1523/JNEUROSCI.3578-12.2013

URL

PMID:23325263

[本文引用: 1]

Training during a sensitive period in development may have greater effects on brain structure and behavior than training later in life. Musicians are an excellent model for investigating sensitive periods because training starts early and can be quantified. Previous studies suggested that early training might be related to greater amounts of white matter in the corpus callosum, but did not control for length of training or identify behavioral correlates of structural change. The current study compared white-matter organization using diffusion tensor imaging in early- and late-trained musicians matched for years of training and experience. We found that early-trained musicians had greater connectivity in the posterior midbody/isthmus of the corpus callosum and that fractional anisotropy in this region was related to age of onset of training and sensorimotor synchronization performance. We propose that training before the age of 7 years results in changes in white-matter connectivity that may serve as a scaffold upon which ongoing experience can build.

Brain potentials indicate immediate use of prosodic cues in natural speech processing

DOI:10.1038/5757

URL

PMID:10195205

[本文引用: 1]

Spoken language, in contrast to written text, provides prosodic information such as rhythm, pauses, accents, amplitude and pitch variations. However, little is known about when and how these features are used by the listener to interpret the speech signal. Here we use event-related brain potentials (ERP) to demonstrate that intonational phrasing guides the initial analysis of sentence structure. Our finding of a positive shift in the ERP at intonational phrase boundaries suggests a specific on-line brain response to prosodic processing. Additional ERP components indicate that a false prosodic boundary is sufficient to mislead the listener's sentence processor. Thus, the application of ERP measures is a promising approach for revealing the time course and neural basis of prosodic information processing.

Musical experience facilitates lexical tone processing among mandarin speakers: Behavioral and neural evidence

DOI:10.1016/j.neuropsychologia.2016.08.003

URL

PMID:27503769

[本文引用: 2]

Music and speech share many sound attributes. Pitch, as the percept of fundamental frequency, often occupies the center of researchers' attention in studies on the relationship between music and speech. One widely held assumption is that music experience may confer an advantage in speech tone processing. The cross-domain effects of musical training on non-tonal language speakers' linguistic pitch processing have been relatively well established. However, it remains unclear whether musical experience improves the processing of lexical tone for native tone language speakers who actually use lexical tones in their daily communication. Using a passive oddball paradigm, the present study revealed that among Mandarin speakers, musicians demonstrated enlarged electrical responses to lexical tone changes as reflected by the increased mismatch negativity (MMN) amplitudes, as well as faster behavioral discrimination performance compared with age- and IQ-matched nonmusicians. The current results suggest that in spite of the preexisting long-term experience with lexical tones in both musicians and nonmusicians, musical experience can still modulate the cortical plasticity of linguistic tone processing and is associated with enhanced neural processing of speech tones. Our current results thus provide the first electrophysiological evidence supporting the notion that pitch expertise in the music domain may indeed be transferable to the speech domain even for native tone language speakers.

Processing dependencies between segmental and suprasegmental features in mandarin Chinese

Central auditory plasticity: Changes in the N1-P2 complex after speech-sound training

DOI:10.1097/00003446-200104000-00001

URL

PMID:11324846

[本文引用: 1]

OBJECTIVE: To determine whether the N1-P2 complex reflects training-induced changes in neural activity associated with improved voice-onset-time (VOT) perception. DESIGN: Auditory cortical evoked potentials N1 and P2 were obtained from 10 normal-hearing young adults in response to two synthetic speech variants of the syllable /ba/. Using a repeated measures design, subjects were tested before and after training both behaviorally and neurophysiologically to determine whether there were training-related changes. In between pre- and post-testing sessions, subjects were trained to distinguish the -20 and -10 msec VOT /ba/ syllables as being different from each other. Two stimulus presentation rates were used during electrophysiologic testing (390 msec and 910 msec interstimulus interval). RESULTS: Before training, subjects perceived both the -20 msec and -10 msec VOT stimuli as /ba/. Through training, subjects learned to identify the -20 msec VOT stimulus as

Examining the relationship between skilled music training and attention

Musical experience shapes human brainstem encoding of linguistic pitch patterns

DOI:10.1038/nn1872

URL

PMID:17351633

[本文引用: 1]

Music and speech are very cognitively demanding auditory phenomena generally attributed to cortical rather than subcortical circuitry. We examined brainstem encoding of linguistic pitch and found that musicians show more robust and faithful encoding compared with nonmusicians. These results not only implicate a common subcortical manifestation for two presumed cortical functions, but also a possible reciprocity of corticofugal speech and music tuning, providing neurophysiological explanations for musicians' higher language-learning ability.

Musical experience modulates categorical perception of lexical tones in native chinese speakers

DOI:10.3389/fpsyg.2015.00436

URL

PMID:25918511

[本文引用: 1]

Although musical training has been shown to facilitate both native and non-native phonetic perception, it remains unclear whether and how musical experience affects native speakers' categorical perception (CP) of speech at the suprasegmental level. Using both identification and discrimination tasks, this study compared Chinese-speaking musicians and non-musicians in their CP of a lexical tone continuum (from the high level tone, Tone1 to the high falling tone, Tone4). While the identification functions showed similar steepness and boundary location between the two subject groups, the discrimination results revealed superior performance in the musicians for discriminating within-category stimuli pairs but not for between-category stimuli. These findings suggest that musical training can enhance sensitivity to subtle pitch differences between within-category sounds in the presence of robust mental representations in service of CP of lexical tonal contrasts.

Musical training shapes neural responses to melodic and prosodic expectation

DOI:10.1016/j.brainres.2016.09.015

URL

PMID:27622645

[本文引用: 2]

Current research on music processing and syntax or semantics in language suggests that music and language share partially overlapping neural resources. Pitch also constitutes a common denominator, forming melody in music and prosody in language. Further, pitch perception is modulated by musical training. The present study investigated how music and language interact on pitch dimension and whether musical training plays a role in this interaction. For this purpose, we used melodies ending on an expected or unexpected note (melodic expectancy being estimated by a computational model) paired with prosodic utterances which were either expected (statements with falling pitch) or relatively unexpected (questions with rising pitch). Participants' (22 musicians, 20 nonmusicians) ERPs and behavioural responses in a statement/question discrimination task were recorded. Participants were faster for simultaneous expectancy violations in the melodic and linguistic stimuli. Further, musicians performed better than nonmusicians, which may be related to their increased pitch tracking ability. At the neural level, prosodic violations elicited a front-central positive ERP around 150ms after the onset of the last word/note, while musicians presented reduced P600 in response to strong incongruities (questions on low-probability notes). Critically, musicians' P800 amplitudes were proportional to their level of musical training, suggesting that expertise might shape the pitch processing of language. The beneficial aspect of expertise could be attributed to its strengthening effect of general executive functions. These findings offer novel contributions to our understanding of shared higher-order mechanisms between music and language processing on pitch dimension, and further demonstrate a potential modulation by musical expertise.