1 引言

个体将来自不同感觉通道(视觉、听觉、触觉等)的信息相互作用并整合为统一的、连贯的和有意义的知觉过程被称为多感觉整合(multisensory integration, MSI; Tang, Wu, & Shen, 2016)。多感觉整合主要有几种表现形式:其一为多感觉错觉效应(multisensory illusion effects), 例如McGurk效应(Tiippana, 2014)和腹语术效应(the ventriloquist effect; Callan, Callan, & Ando, 2015)等; 其二为多感觉促进效应(multisensory performance improvement effects), 例如冗余信号效应(redundant signals effect, RSE), 即相比单通道(视觉或听觉)刺激, 个体对同时呈现的多感觉通道刺激的反应更快速更准确(Mishler & Neider, 2016)。也有研究显示, 在两个位置或在单个位置监控多感觉通道刺激的反应时相比监控单通道刺激反应时更慢(Santangelo, Fagioli, & Macaluso, 2010), 即, 在某些特定条件下, 存在多感觉抑制效应(Meredith, Nemitz, & Stein, 1987) 。由于目前关于外源性注意与多感觉整合的研究大多还是集中在多感觉促进效应上, 因此本文主要基于多感觉促进效应的相关研究进行综述。

Posner等(1980)将注意分为内源性注意(endogenous attention)和外源性注意(exogenous attention)。其中, 外源性注意又被称为非自主性或刺激驱动注意, 是指在没有个体意图控制的情况下, 由个体以外的信息引起的无意识注意。例如, 在安静的自习室内, 一声巨大的关门声会不自觉吸引大家的注意。

注意与多感觉整合之间的关系已经引起研究者们的高度关注并一直处于探讨之中。虽然有一些研究者认为多感觉整合的发生独立于注意过程(Soto-Faraco, Navarra, & Alsius, 2004; Vroomen, Bertelson, & De Gelder, 2001), 但大多数研究者认为注意与多感觉整合之间存在密切的关系并从不同的研究视角出发提出了不同的关系理论框架(Koelewijn, Bronkhorst, & Theeuwes, 2010; Macaluso et al., 2016; Talsma, Senkowski, Soto- Faraco, & Woldorff, 2010; Tang, Wu, & Shen, 2016)。其中, 大部分侧重于探讨内源性注意与多感觉整合的关系, 仅有少部分涉及到外源性注意与多感觉整合的关系。因此, 本文基于已有研究成果, 从两方面综述了外源性注意与多感觉整合的关系:一方面, 外源性注意对多感觉整合的调节作用; 另一方面, 多感觉整合对外源性注意的调节作用。

2 外源性注意对多感觉整合的调节作用

我们的大脑无时无刻不在接受外在世界大量的信息输入, 多感觉整合可以将来自不同感觉通道的信息组合以减少感知系统内的干扰信息, 从而促进多感觉通道刺激的检测、识别和定位(Stein & Stanford, 2008)。外源性注意同样能够促进刺激的检测、识别和定位。例如, 外源性线索能够增强同一位置相对应的同一感觉通道或不同感觉通道的感知觉处理, 加快个体对刺激的反应速度(Spence, 2010)。鉴于多感觉整合与外源性注意都能促进刺激的加工, 帮助我们更好地感知外部世界, 研究者们开始探讨两者间的关系, 考察外源性注意对多感觉整合的调节作用。

外源性线索-靶子范式(cue-target paradigm)是研究外源性注意的经典范式(Posner & Cohen, 1984)。该范式主要以凸显特征(如方框变亮或变粗)作为线索呈现在左或右侧外周位置, 要求被试在经过一段时间间隔(stimulus onset asynchronies, SOA)后, 对呈现在与线索相同(即有效线索, valid cue)或不同(即无效线索, invalid cue)位置上的目标刺激进行反应。结果发现, 当线索-靶子间隔时间(即SOA)在300 ms以内时, 有效线索位置上的反应时显著快于无效线索位置, 被称为“易化效应(facilitation effect)”。然而当SOA大于300 ms时, 有效线索位置上的反应时却显著慢于无效线索位置, 被称为“返回抑制(inhibition of return, IOR)效应” (Posner & Cohen, 1984)。Van der Stoep等(2015)基于该范式考察了外源性听觉线索在短SOA (200~250 ms)条件下对多感觉整合的调节作用。实验通过声音诱发外源性注意, 要求被试对呈现在左或右侧的视觉(visual, V)、听觉(auditory, A)和视听觉(audiovisual, AV)通道目标刺激进行反应, 呈现在中间的目标不反应。研究结果显示, 外源性听觉线索能够加快对有效线索位置上目标刺激的反应, 即产生空间线索化易化效应。更重要的是, 相比无效线索位置, 有效线索位置上的多感觉整合效应减小。在后续研究中, Van der Stoep等(2016)基于相同的实验范式, 考察了外源性视觉线索在长SOA (350~450 ms)条件下诱发的IOR效应对多感觉整合的调节作用。结果发现, 虽然在反应时结果上发生反转(目标刺激通道为视觉时产生IOR效应), 但有效线索位置上的多感觉整合效应仍然小于无效线索位置。可见, 已有结果均显示, 外源性注意可以调节(减少)多感觉整合至少是视听觉整合效应(Van der Stoep, Van der Stigchel, & Nijboer, 2015; Van der Stoep, Van der Stigchel, Nijboer, & Spence, 2016)。

2.1 外源性注意调节多感觉整合的三种理论假说

基于已有研究结果, 研究者们提出了三种理论假说来解释外源性注意对多感觉整合的调节机制, 即空间不确定性、感知觉敏感度和感觉通道间信号强度差异假说。

2.1.1 空间不确定性假说

由于外源性线索-靶子范式中的线索不能预测随后呈现目标的位置以至于产生更高的目标位置不确定性, 使得被试对目标的反应要更多依赖于线索诱发的空间定向。当线索诱发的空间定向重要性提高时, 多感觉目标本身引起的空间定向重要性则会降低(Van der Stoep, Spence, Nijboer, & Van der Stigchel, 2015; Van der Stoep, Van der Stigchel, & Nijboer, 2015)。由于在有效线索位置上, 外源性线索与多感觉目标本身引起的空间定向信息是冗余的, 因此降低了有效线索位置上多感觉目标整合的重要性, 最终得到有效线索位置上多感觉整合效应减少的结果。

另外, 有研究结果表明, 大脑对不同感觉通道信息的整合符合统计最优化原则, 即大脑能够根据不同感觉通道信息的可靠性(reliability-based)来决定对其的利用权重(Fetsch, Pouget, Deangelis, & Angelaki, 2011; Li, Yang, Sun, & Wu, 2015; Li, Yu, Wu, & Gao, 2016)。而在整合过程中, 对于通道估计本身是否可靠的先验知识也是确定该通道整合权重的一个重要因素(刘强 等, 2010)。由于外源性线索不能预测随后呈现目标的位置, 大脑便形成线索对目标刺激提供的空间信息是不可靠的先验知识, 从而降低在有效线索位置上各感觉通道信息的利用权重, 最终影响多感觉整合效应。

2.1.2 感知觉敏感度假说

外源性空间线索能够提高其出现位置的感知觉敏感度, 同时相对增强紧接着呈现在该位置上目标的感知强度(Carrasco, 2011)。研究表明多感觉整合加工遵循反比效应原则(The principle of inverse effectiveness), 即高强度刺激引起的多感觉整合效应更小, 反之亦然(Senkowski, Saint- Amour, Höfle, & Foxe, 2011)。以外源性听觉线索调节多感觉整合的研究(Van der Stoep, Van der Stigchel, & Nijboer, 2015)为例, 在短SOA条件下, 相比无效线索位置, 有效线索位置上的感知觉敏感度更强, 因此呈现在该位置上的目标刺激强度也相应更强, 基于反比效应原则, 最终得到有效线索位置上多感觉整合效应减小的结果。

2.1.3 感觉通道间信号强度差异假说

前人研究发现当不同感觉通道之间的信号强度差异过大时, 多感觉整合效应更小, 而当不同感觉通道之间的信号强度相近时, 多感觉整合效应更大(Otto, Dassy, & Mamassian, 2013)。以外源性视觉线索调节多感觉整合的研究(Van der Stoep, Van der Stigchel, Nijboer, & Spence, 2016)为例, 在长SOA条件下, 视觉线索只引起同样为视觉通道目标的IOR效应, 并没有引起听觉通道目标的IOR效应, 当有效线索位置上视觉目标的加工时间由于返回抑制变得更慢, 而听觉目标并没有更慢时, 视、听感觉通道间加工速度差异的增大会导致信号强度差异也随之增大, 最终得到有效线索位置上视听觉整合效应减小的结果。

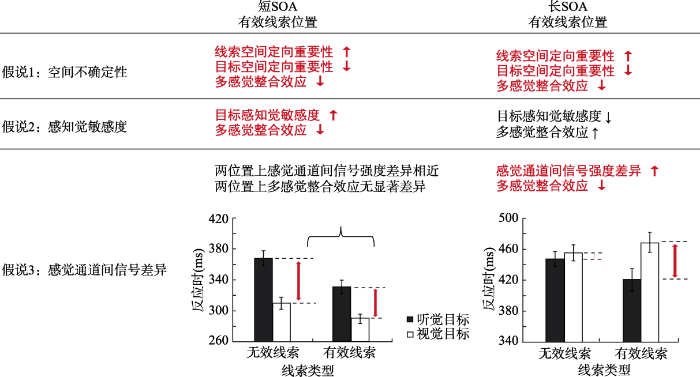

由于以上三种理论假说是研究者们基于不同研究背景(不同SOA条件)提出的, 因此本文将时间加工信息作为一个重要的影响因素, 把三种理论假说分别置于不同SOA条件下进行探讨(见图1)。

图1

图1

外源性注意调节多感觉整合的三种理论假说

注:图中分别表示三种理论假说(空间不确定性假说, 感知觉敏感度假说, 感觉通道间信号强度差异假说)在不同SOA条件下(短SOA vs长 SOA)的结果。其中, 加粗红色字体代表基于已有研究数据得到的结果, 黑色字体代表假定结果。假说3结果分别引自Van der Stoep等(2015实验1, 2016)。

首先, 目前在不同SOA条件下的研究结果都支持空间不确定性假说。因为不论在短还是长SOA条件下, 目标刺激呈现位置的空间不确定性一直存在, 因此多感觉整合效应在有效线索位置上均有所减小。未来研究可以通过调控外源性线索的有效性比例来进一步验证此假说(见图1假说1)。

其次, 目前只有在短SOA条件下的研究结果支持感知觉敏感度假说(见图1假说2短SOA)。由于在长SOA条件下, 有效线索位置上与目标早期感知觉加工密切相关的视觉P1成分振幅更小(Slagter, Prinssen, Reteig, & Mazaheri, 2016), 即长SOA条件下有效线索位置上的感知觉敏感度下降, 其位置上呈现的目标刺激强度也相应减弱, 因此基于反比效应原则, 我们假定多感觉整合效应会更大(见图1假说2长SOA)。未来研究可以通过ERP (Event-related Potential)技术观察在不同时间进程中ERP成分的变化来验证此假说。

综上所述, 在不同SOA条件下, 外源性注意对多感觉整合的调节作用基于三种理论假说有不同的解释。但由于目前缺乏操控SOA变量的研究, 因此无法排除外源性注意本身存在的两阶段特性(短SOA下的易化效应vs. 长SOA下的抑制效应) (Martín-ArÉvalo, Chica, & LupiÁñez, 2015)对多感觉整合调节机制不一致的可能, 这需要研究者们在未来进一步的考察。

2.2 外源性注意与内源性注意对多感觉整合调节的异同

在以往关于内源性注意调节多感觉整合的研究中。一方面, 内源性注意可以基于空间因素调节多感觉整合, 不管是基于低水平刺激(Gao et al., 2014; Li, Yang, Sun, & Wu, 2015; Senkowski, Saint-Amour, Gruber, & Foxe, 2008; Talsma & Woldorff, 2005), 还是高水平刺激(Fairhall & Macaluso, 2009); 另一方面, 内源性注意还可以基于感觉通道因素调节多感觉整合(Degerman, Rinne, Salmi, Salonen, & Alho, 2006; Talsma, Doty, & Woldorff, 2007)。虽然也有研究得到相反的结果, 例如在通道选择性注意条件下多感觉整合的行为表现被减弱甚至消除(Mozolic, Hugenschmidt, Peiffer, & Laurienti, 2008; Wu et al., 2012)。但这些结果都显示内源性注意能够对多感觉整合效应产生调节作用。

对比内源性注意与外源性注意调节多感觉整合的研究, 发现两者都能对多感觉整合产生调节作用, 且都在注意位置(有效线索位置)上观察到多感觉促进效应。但由于外源性注意与内源性注意本身存在较大的差异:第一, 内源性注意的空间定向效应随着任务的需求而变化, 而外源性注意空间定向效应不易受任务影响(Chica, Bartolomeo, & LupiÁñez, 2013); 第二, 外源性线索比内源性线索能够更快地诱发注意效应, 也更快消失(Busse, Katzner, & Treue, 2008)。第三, 外源性注意在SOA大于300 ms时能够产生IOR效应(Martín-ArÉvalo, Chica, & LupiÁñez, 2015), 而内源性注意只有在引发眼球运动系统作出自发的眼跳时才会产生IOR效应(Henderickx, Maetens, & Soetens, 2012)。因此, 两者对多感觉整合的调节存在着差异:

一方面, 两者对多感觉整合的调节方式有所差异。内源性注意作为目标驱动注意, 是个体根据自己的目的或意图主动分配心理活动或意识, 因此内源性注意是通过自上而下的方式来调节多感觉整合。而外源性注意本身作为一种刺激驱动注意, 则是通过自下而上的方式来调节多感觉整合(Van der Stoep, Van der Stigchel, & Nijboer, 2015; Van der Stoep, Van der Stigchel, Nijboer, & Spence, 2016)。可见, 两者对多感觉整合效应的调节方式不同。

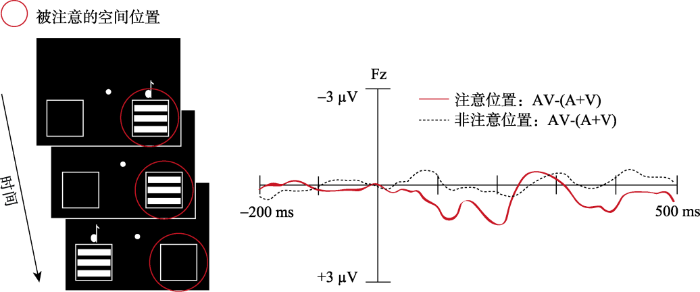

另一方面, 两者对多感觉整合的调节结果有所差异。在内源性注意调节多感觉整合的研究中, 内源性注意能够通过调节机制降低感觉通道内的干扰信息从而增强视听觉信息输入的可靠性(Macaluso et al., 2016)。因此, 相比非注意位置, 注意位置上多感觉通道刺激的行为反应结果更快更准确, 神经反应更强烈, 多感觉整合效应更大。以Talsma等(2005)研究为例, 如图2所示, 要求被试注意右侧位置出现的刺激, 对相应目标刺激进行反应。结果发现, 刺激出现后100~140 ms时, 额叶和中央区电极, 注意位置上AV与(A+V)间差异 1(1 AV与(A+V)间差异:通过比较视听觉刺激(AV)诱发的ERP 与相对应的单通道听觉(A)和视觉(V)刺激分别诱发的ERP 成分总和来反应多感觉整合加工(De Meo, Murray, Clarke, & Matusz, 2015; Giard & Peronnet, 1999)。)比非注意位置大, 即内源性注意位置上的多感觉整合效应更大, 刺激出现后160~200 ms和320~420 ms, 在中央内侧核区电极发现相同的注意效应。然而, 在外源性注意调节多感觉整合的研究中, 以Van der Stoep等(2015)研究中实验1结果为例, 有效线索位置(相当于注意位置)上的多感觉整合效应反而小于无效线索位置(相当于非注意位置)。可见, 两者对多感觉整合效应的调节结果不同。

图2

图2

Talsma等(2005)研究的实验流程与结果图

注:内源性注意调节多感觉整合的实验流程及其结果。实验中要求被试注意屏幕中的一侧(左/右侧), 并对该空间位置上的所有目标刺激(视觉、听觉和视听觉)进行检测反应(左图)。结果显示, 内源性注意位置上的多感觉整合效应更大(右图)。

此外, 根据前人研究结果我们假设两者对多感觉整合调节的时程机制也有所差异, 基于外源性注意与内源性注意调节刺激加工的时程研究结果, 在短SOA条件下, 外源性注意(易化效应)诱发的P1成分大于内源性注意(Hopfinger & West, 2006)。在长SOA条件下, 外源性注意(IOR效应)分别影响早期P1和晚期P3成分(Chica & LupiÁñez, 2009), 然而内源性注意只影响晚期P3成分(Chica et al., 2013), 因此两者调节多感觉整合的时程机制可能也有所差异。

本小节首先回顾了外源性注意对多感觉整合的调节作用。然后分别讨论了空间不确定性、感知觉敏感度和感觉通道间信号强度差异三种理论假说并提出了各假说未来的验证方向。最后对比了外源性注意与内源性注意对多感觉整合调节的异同点。总的来说, 在线索-靶子范式下, 已有研究结果暂且支持空间不确定性假说。但由于目前缺少神经机制的实验数据支持, 且在其它范式下(视觉搜索范式)发现对双通道刺激的反应反而慢于单通道刺激, 并没有发生多感觉促进效应, 有效线索位置上单、双通道的反应时差异也小于无效线索位置(Matusz & Eimer, 2013)。因此, 还需研究者们的进一步验证。

3 多感觉整合对外源性注意的调节作用

研究者们发现多感觉整合对外源性注意也存在调节作用, 不仅通过自下而上还能通过自上而下的方式。

3.1 多感觉整合自下而上地调节外源性注意

有证据表明在不需要注意资源的情况下可以发生早期的多感觉整合(Van der Burg, Olivers, Bronkhorst, & Theeuwes, 2008a), 刺激在早期阶段基于空间原则2(2 空间原则(the special rule):当来自不同感觉通道的信息由大致相同的位置呈现时, 多感觉整合效应最佳(Spence, 2013)。)或时间原则3(3 时间原则(the temporal rule):当来自不同感觉通道的信息由大致相近的时间呈现时, 多感觉整合效应最佳(Stevenson, Fister, Barnett, Nidiffer, & Wallace, 2012)。)自动整合起来, 整合后的多感觉通道刺激对外源性注意产生自下而上的调节作用。

3.1.1 线索-靶子范式中多感觉整合对外源性注意的调节作用

基于调控线索通道类型的线索-靶子范式, 实验中会先呈现不同通道类型的线索(例如视觉、听觉或视听觉线索), 再呈现目标刺激, 要求被试完成空间辨别任务, 最后比较由不同线索类型引起的空间线索化效应是否有所差异。早期研究者并没有发现多感觉通道线索与单通道线索(听觉、视觉)引起的空间线索化效应有显著差异, 因此并不认为多感觉通道刺激能够促进空间注意指向(Mahoney, Verghese, Dumas, Wang, & Holtzer, 2012; Santangelo, Van der Lubbe, Belardinelli, & Postma, 2006, 2008)。然而在Santangelo等(2008) ERP研究中显示, 外源性视听线索相比单独的视觉和听觉线索诱发了更强的神经活动。具体来看, 由双通道线索(视听觉)诱发的对侧顶枕区P1成分显著大于单一视、听觉线索诱发的成分之和, 由此证明多感觉通道线索在空间注意定向中发挥了重要的作用。后期研究者发现, 当操纵了被试的注意负荷变量后, 只有多感觉通道线索能够引起空间线索化效应(Barrett & Katrin, 2012; Matusz & Eimer, 2011; Santangelo & Spence, 2007)。也就是说, 当被试在高注意负荷条件下, 即在同一时间内既需要完成实验主任务又需要完成如快速序列视觉呈现(rapid serial visual presentation, RSVP)或时序判断(temporal order judgment, TOJ)等次任务的条件下, 多感觉通道线索相比单通道线索具备更大的提示效果。同样, 类似的结果在听触觉研究中也被发现(Ho, Santangelo, & Spence, 2009)。

基于感知负荷理论, 个体的注意资源有限, 当前任务对注意资源的占用程度决定了与任务无关的刺激得到多少加工(Lavie, 2005)。因此, 被试在高注意负荷条件下, 较少的注意资源可用于加工与任务无关的线索刺激, 只有整合后的多感觉通道线索刺激才具备更大的凸显性, 能更加集中地以刺激驱动的方式捕获个体的空间注意(Krause, Schneider, Engel, & Senkowski, 2012), 从而促进注意指向。

3.1.2 视觉搜索范式中多感觉整合对外源性注意的调节作用

Van der Burg等(2008 a,b)研究中采用视觉搜索任务范式, 要求被试在众多连续变化颜色和方向的倾斜干扰线段中搜索水平或垂直的目标刺激线段。其中, 目标刺激在颜色变化的同时伴随或不伴随听觉刺激, 听觉刺激并不提供关于目标刺激的任何信息, 对视觉目标不具有预测性。结果发现, 相比不伴随听觉刺激的视觉目标, 同步呈现听觉刺激的视觉目标其检测速度更快, 这种由听觉驱动的视觉搜索优势被称为“pip and pop”效应(Van der Burg, Olivers, Bronkhorst, & Theeuwes, 2008b; Van der Burg, Olivers, Bronkhorst, & Theeuwes, 2008a)。Van der Burg等(2011)在其后续研究中再次证实了听觉刺激能够加快同时呈现视觉目标的搜索时间, 并且发现代表视听觉整合的早期P50成分与行为学结果中的检测准确率高度相关。重要的是, 在刺激呈现后210~250 ms出现与自下而上注意分配关联的N2pc成分(Luck & Hillyard, 1994), 代表多感觉整合确实能够捕获注

意, 促进视觉搜索效率。这种通过多感觉通道刺激捕获的外源性注意被证明可以通过被试的空间注意分布状况来调节(Van Der Burg, Olivers, & Theeuwes, 2012)。有研究者解释, 同时呈现的听觉刺激与视觉目标发生整合, 从而增强了对视觉目标位置的指向, 并抑制了视觉干扰项的位置指向(Pluta, Rowland, Stanford, & Stein, 2011), 最终促进多个刺激之间的竞争, 使视觉目标从复杂环境中凸显出来(Van der Burg, Olivers, Bronkhorst, & Theeuwes, 2008a; Van der Burg, Talsma, Olivers, Hickey, & Theeuwes, 2011)。然而, 也有另一种解释, 行为学效率的提高不是由于多感觉整合的作用, 而是由于与目标同时呈现的线索导致目标在干扰项中被感知为“古怪(oddball)”的刺激, 从而捕获被试注意并促进视觉目标的识别(Ngo & Spence, 2012)。总之, 多感觉整合在视觉搜索中调节外源性注意的基本机制还需研究者们的进一步探索。值得注意的是, 虽然影响视觉搜索中选择性注意的因素既包括目标驱动(自上而下)又包括刺激驱动(自下而上) (Atchley, Jones, & Hoffman, 2003), 但本文在此关注的是同时呈现的听觉刺激与视觉目标自动整合所吸引的注意是刺激驱动的。

“pip and pop”效应还被证实可以由其它感觉通道例如触觉(Van der Burg, Olivers, Bronkhorst, & Theeuwes, 2009)、嗅觉(Chen, Zhou, Chen, He, & Zhou, 2013)等诱发, 同样能够提高同时呈现的视觉目标的显著性, 使其从复杂的视觉环境中凸显出来。总之, 当视觉目标与其它感觉通道的信号同步呈现时, 能在知觉上被整合, 捕获注意, 最终帮助个体更快地识别、检测目标刺激, 加快视觉搜索速度(Chamberland, Hodgetts, Vallières, Vachon, & Tremblay, 2016)。

3.2 多感觉整合自上而下地调节外源性注意

大脑可以基于当前任务的相关特征建立自上而下的信号模板(注意控制定势, Attentional Control Setting), 只有符合当前信号模板的刺激才有可能自动捕获被试的空间注意(Folk & Remington, 1998), 而是否符合当前的信号模板则取决于线索刺激是否共享目标的特征。这些线索属性不仅包括较低水平的特征属性(颜色、大小、明度、凸显性、运动等) (Ansorge & Becker, 2013; Goller & Ansorge, 2015), 还包括较高水平的语义概念(Folk, Berenato, & Wyble, 2014; Goodhew, Kendall, Ferber, & Pratt, 2014; 王慧媛,隋洁,张明,2016; 王慧媛,张明,隋洁,2014)。这种受调节的注意分配现象被称为关联性注意捕获, 强调被试基于任务要求的信号模板对注意分配的调节作用(Lamy & Árni, 2013)。

以往研究中, 主要针对单通道任务中的关联性注意捕获进行了探讨, 然而在日常生活中, 我们很少只对一种感觉通道的信息进行处理, 通常会对来自不同感觉通道的信息同时处理, 以提高行为效率。因此, 研究者们开始对跨通道任务中的关联性注意捕获进行探讨。Matusz和Eimer (2013)基于视觉搜索范式, 考察个体是否可以创建与目标刺激相关联的来自不同感觉通道(视觉和听觉)的多感觉信号模板。实验中要求被试在搜索阵列中检测包含由视觉特征(如红色矩形)或视听觉特征组合定义的目标刺激(如伴有高音调的红色矩形), 在此之前, 呈现与其中视觉目标特征相匹配但不提供空间信息的视觉线索。结果发现相比单独的视觉搜索任务, 视觉线索的空间线索化效应在视听觉搜索任务中减少, 且N2pc成分诱发了更小的振幅, 也就是说单通道视觉线索的注意捕获能力在视听觉搜索任务中减弱。研究者认为在视听觉搜索任务期间, 视听觉目标被整合为多感觉通道刺激并作为双通道信号模板自上而下地控制注意。

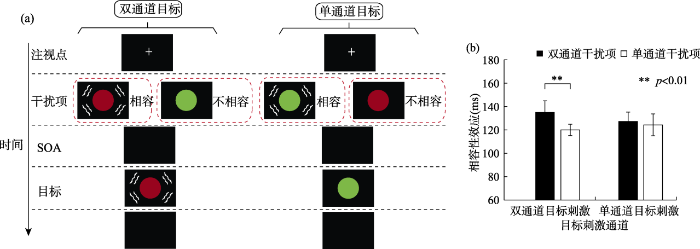

Mast等(2015) 采用非空间反应启动范式考察了自上而下的多感觉信号模板是否可以包含来自其它感觉通道如触觉的特征(见图3)。实验中的启动项(类似于线索-靶子范式中的线索)与目标刺激在相同位置被连续呈现, 以避免线索化范式中的超通道空间特征(避免启动项提供目标的空间位置信息)。目标和启动项都包括双通道与单通道条件。视觉目标与启动项颜色特征是否一致作为反应相容性条件, 当视觉目标与视觉启动项的反应颜色一致时为相容条件, 视觉目标与视觉启动项的反应颜色不一致时为不相容条件(如图3a所示)。结果发现, 仅对于双通道(视触觉)目标条件, 双通道(视触觉)启动项比单通道(视觉)启动项具有更大的相容性效应(不相容条件反应时减去相容条件反应时) (如图3b所示)。也就是说, 在双通道目标条件下, 由于视觉目标和触觉刺激的同时呈现使得触觉特征并入到被试自上而下的多感觉信号模板中, 而视触觉启动项符合当前信号模板, 从而引起更大的注意捕获效应。基于相同的实验范式, Mast等(2017)通过改变视觉或听觉通道是否作为反应相关维度对跨通道关联性注意捕获效应再次进行验证。结果发现, 当启动项与目标的主要和次要通道特征都匹配时, 产生更大的相容性效应, 即启动项和自上而下多感觉信号模板之间特征重叠的情况决定了注意捕获的强度。

图3

图3

Mast等(2015, 实验1b)研究的实验流程与结果图

注:(a)任务和实验流程。图中分别表示在双通道目标(左图)和单通道目标条件(右图)下的实验流程。双通道目标由视觉刺激(红色圆形)与触觉振动刺激(图上用波浪线表示)组合而成。单通道目标只有视觉目标(绿色圆形), 从不伴随触觉振动刺激。同样, 启动项也分为双通道与单通道条件。实验中要求被试忽略启动项颜色特征, 既快又准地对目标刺激的颜色特征进行反应。(b)行为学结果。纵坐标代表相容性效应, 结果显示只有在双通道目标条件下发现双通道启动项比单通道启动项有更大的相容性效应。(引并改自Mast等(2015), copyright (2015)The Psychonomic Society.)

上述研究表明, 跨通道关联性注意捕获不仅可以包含来自视觉和触觉通道的特征(Mast, Frings,

& Spence, 2015; Matusz & Eimer, 2013)还可以包含来自视觉与听觉通道的特征(Mast, Frings, & Spence, 2017)。另外, 跨通道关联性注意捕获既适用于与空间信息相关联的视觉搜索任务(Matusz & Eimer, 2013)又适用于与空间信息不相关的任务(Mast, Frings, & Spence, 2015; Mast, Frings, & Spence, 2017)。虽然有研究者认为跨通道关联性注意捕获并不是以纯粹自上而下的方式进行 (Matusz & Eimer, 2011), 这可能是由于视听启动项引起了大脑早期的多感觉加工(刺激后100 ms以内)和低级皮质中强烈的自下而上的影响(Murray et al., 2016)。但已有研究结果至少证明大脑可以将来自不同感觉通道(视觉、触觉、听觉)的刺激整合成多感觉信号模板进行储存, 从而自上而下地引导个体的外源性注意以优化对目标的选择。

4 小结与展望

外源性注意和多感觉整合都有助于控制信息处理, 且都是复杂的、多阶段的过程, 因此两者之间的交互关系同样也是复杂的。为了解释两者间的交互作用机制, 本文主要从两大方面综述了外源性注意与多感觉整合的交互关系。具体来看:(1)外源性注意可以通过自下而上的方式调节多感觉整合, 包括空间不确定性、感知觉敏感度和感觉通道间信号强度差异三种理论假说。外源性注意与内源性注意都能对多感觉整合产生调节作用, 但两者在调节方式和调节结果上有所差异。(2)多感觉整合也可以调节外源性注意。一方面, 多感觉整合自下而上地调节外源性注意。具体来看, 在线索-靶子范式中, 刺激之间能够以自下而上的方式自动整合, 整合后的多感觉通道线索相比单通道线索具有更大的注意捕获效应, 诱发更大的空间注意定向效应; 在视觉搜索任务中, 整合后的多感觉通道刺激同样能引起更大的注意捕获效应, 使得目标从复杂环境中凸显出来, 从而提高视觉搜索效率。另一方面, 整合后的多感觉通道刺激能够作为多感觉信号模板存储于大脑之中, 从而在任务中自上而下地调节注意捕获。

在未来研究中, 本文提出以下几点展望:

(1)外源性注意调节多感觉整合的认知神经科学机制有待进一步解决。目前, 关于内源性注意对多感觉整合的调节机制取得了丰富的成果。然而外源性注意调节多感觉整合的成果只在行为学层面上有所探讨, 具体机制尚不清楚, 外源性注意如何影响多感觉整合以及何时开始影响多感觉整合的问题都未得到解决, 因此需要研究者们进一步探索。

(2)外源性注意与多感觉整合交互作用的具体时程机制。并行整合框架指出, 多感觉整合能够基于刺激的时间、空间和内容发生在感觉处理的“早期”和“晚期”阶段(Calvert & Thesen, 2004)。虽然已有研究证明, 内源性注意可以调节多感觉整合的多个阶段(Talsma, Senkowski, Soto-Faraco, & Woldorff, 2010), 但目前关于外源性注意与多感觉整合交互作用的具体加工阶段还未进行过考察。

(3)其它因素如何影响外源性注意与多感觉整合的交互关系。已有研究证实, 任务要求与目标(Donohue, Green, & Woldorff, 2015), 注意负荷(Barrett & Katrin, 2012)以及个体期望(Kok, Jehee, & de Lange, 2012)都能影响内源性注意对多感觉整合的调节效应。同样, 在外源性注意调节多感觉整合的研究中也发现任务类型会影响其调节效应的大小(Van der Stoep, Spence, Nijboer, & Van der Stigchel, 2015)。因此, 未来研究除了揭示外源性注意与多感觉整合交互作用的基本原理之外, 还需要关注两者的交互关系是否受到其它因素的影响。

参考文献

通道估计可靠性先验知识在早期的知觉加工阶段影响多感觉信息整合

DOI:10.3724/SP.J.1041.2010.00227

URL

[本文引用: 1]

According to the statistically optimal integration theory, our brains weigh various sources of sensory information according to their reliabilities during multisensory integration. Recent behavioral studies have proposed that the prior knowledge about the reliability of different modalities can also play an important role in the weighting of different sensory information. The present study aimed to examine whether the prior knowledge affect multisensory integration in the early perceptual processing stage or in the post-perceptual processes stage. Fourteen participants (19-24 years old) were tested when a single letter (“B” or “E”) was presented simultaneously in both auditory and visual modalities. The letter was displayed in one of the two colors. In one condition the visual information presented was always (probability of 100%) congruent with auditory information and this is the same for either color presented (equal-probability condition). In another condition, the probability in which the visual and auditory information was congruent was varied. The probability was 100% (high probability, HP) when the visual letter was in one color and the probability was 30% (low probability, HP) when the visual letter was in another color. Therefore different colors were associated with different probability (different-probability condition). The participants were instructed to press the button 1 for letter B and button 2 for letter E regardless of the color of the visual stimuli and regardless of the auditory information. Each participant completed 240 trials during equal-probability condition and 1500 trials (consisting of 5 experimental blocks) during different-probability condition. Significantly different responses to the congruent audiovisual stimuli were found between HP and LP and the difference was only observed during block 4 and block 5 of difference-probability condition. These results suggested the different probabilities of auditory-visual congruency modulate the response time to auditory-visual congruent stimuli, which suggested the prior knowledge regarding the reliability of sensory cues affects the multisensory integration in the early perceptual processing stage.

线索靶子关联和搜索策略对注意捕获的作用——来自意义线索的证据

DOI:10.3724/SP.J.1041.2016.00783

URL

[本文引用: 1]

采用线索化范式,操作性地建立线索和靶子间的关联,通过比较不同搜索策略任务下不同线索靶子关联条件间的捕获量考察关联水平及搜索策略对注意捕获的作用,并分析抽象的意义概念对空间注意分配的调节作用.结果发现(1)意义线索的注意捕获符合关联性的注意定向假说,只有符合当前注意控制定势的线索才具有捕获注意的能力;(2)知觉关联在调节无意注意定向上具有主导性,调节能力强,能将其他因素效应掩盖;语义关联单独作用时对无意注意定向有调节作用,但调节程度小,效应易被掩盖;(3)搜索策略虽然能够大大提高反应速度,但只有基于特征独子的搜索模式对无意注意定向有调节作用,且调节能力有限.研究再次认证了空间无意注意转移中注意控制定势的作用,同时指出抽象的概念加工能够根据当前的环境有效指导后续的选择和行为.

线索靶子关联和搜索策略对注意捕获的作用

DOI:10.3724/SP.J.1041.2014.00185

URL

[本文引用: 1]

There is large amount of evidence showing that the saliency of a stimulus on a dimension (singleton) can involuntarily capture attention. Previous work has also demonstrated that attentional capture induced by feature singleton is modulated by top-down factors. For example, the semantic relationship between target and distractors impacts on the capture of attention; search strategies (Singleton Detection Mode and Feature Search Mode) are another potential factors affecting attentional capture. Here we measured how these factors guided attentional capture in a visual search task by manipulating cue-target relevance and search strategies and attempted to provide systematically evidence on capture of attention. A modified spatial cueing paradigm was employed in the current study. In a trial, a fixation screen was presented for 500 ms, followed by an uninformative red cue which appeared for 100 ms. Then a fixation screen was showed for 100 ms, followed by a target screen with Chinese characters surrounding by squares displayed 1000 ms. Participants had to make a judgment for the gap orientation of a target square while ignoring other distractors. Targets were Chinese characters randomly presented at the cued or non-cued locations, making cues valid or invalid. The effect of attentional capture referred to the fact slower responses to invalid contrasting to those to valid targets. The magnitude of the effect was computed to assess the effect size across different experimental conditions. In the current study, there were ten experimental conditions according to various combinations of perceptional relevance, semantic relevance between cue and target and singleton detection mode and feature search mode. The order of experimental conditions was counterbalanced across participants. As a result, the capture effect was not observed when cue and target were irrelevant whilst no search strategy was adopted, but feature search mode was induced. However, the capture effect was observed when singleton detection mode was used, and when the semantic relevance between the cue and the target was established. The capture effects were also reliable significant when there was the perceptional relevance between the cue and target. In contrast, the capture effects induced by singleton detection mode and by semantic relevance were eliminated when perceptional relevance occurred. It was concluded that (1) the perceptional relevance between cue and target was more pronounced to drive attentional capture than the semantic association between cue and target and search strategies, (2) although the latter was also able to modulate the magnitude of attentional capture, but the effect exclusively occurred when there was no perceptional relevance between cue and target. (3) attentional capture was modulated by the search strategy - singleton detection mode, but not by feature search mode after controlling the perceptional relevance and the semantic relevance between cue and target.

Contingent capture in cueing: The role of color search templates and cue-target color relations

DOI:10.1007/s00426-013-0497-5

URL

PMID:23807453

[本文引用: 1]

Visual search studies have shown that attention can be top-down biased to a specific target color, so that only items with this color or a similar color can capture attention. According to some theories of attention, colors from different categories (i.e., red, green, blue, yellow) are represented independently. However, other accounts have proposed that these are related ither because color is filtered through broad overlapping channels (4-channel view), or because colors are represented in one continuous feature space (e.g., CIE space) and search is governed by specific principles (e.g., linear separability between colors, or top-down tuning to relative colors). The present study tested these different views using a cueing experiment in which observers had to select one target color (e.g., red) and ignore two or four differently colored distractors that were presented prior to the target (cues). The results showed clear evidence for top-down contingent capture by colors, as a target-colored cue captured attention more strongly than differently colored cues. However, the results failed to support any of the proposed views that different color categories are related to one another by overlapping channels, linear separability, or relational guidance ( N = 96).

Visual marking: A convergence of goal- and stimulus-driven processes during visual search

DOI:10.3758/BF03194805

URL

PMID:12956576

[本文引用: 1]

Watson and Humphreys (1997) proposed that visual marking is a goal-directed process that enhances visual search through the inhibition of old objects. In addition to the standard marking case with targets at new locations, included in Experiment 1 was a set of trials with targets always at old locations, as well as a set of trials with targets varying between new and old locations. The participants performance when detecting the target at old locations was equivalent to their performance in the full-baseline condition when they knew the target would be at old locations, and was worse when the target appeared at old locations on 50% of the trials. Marking was observed when the target appeared at new locations. In Experiment 2, an offset paradigm was used to eliminate the influence of the salient abrupt-onset feature of the new objects. No significant benefits were found for targets at new locations in the absence of onsets at new locations. The results suggest that visual marking may be an attentional selection mechanism that significantly benefits visual search when (1) the observer has an appropriate search goal, (2) the goal necessitates inhibition of old objects, and (3) the new objects include a salient perceptual feature.

Evidence for multisensory integration in the elicitation of prior entry by bimodal cues

DOI:10.1007/s00221-012-3191-8

URL

PMID:22975896

[本文引用: 2]

AbstractThis study reports an experiment investigating the relative effects of intramodal, crossmodal and bimodal cues on visual and auditory temporal order judgements. Pairs of visual or auditory targets, separated by varying stimulus onset asynchronies, were presented to either side of a central fixation ( 45 ), and participants were asked to identify the target that had occurred first. In some of the trials, one of the targets was preceded by a short, non-predictive visual, auditory or audiovisual cue stimulus. The cue and target stimuli were presented at the exact same locations in space. The point of subjective simultaneity revealed a consistent spatiotemporal bias towards targets at the cued location. For the visual targets, the intramodal cue elicited the largest, and the crossmodal cue the smallest, bias. The bias elicited by the bimodal cue fell between the intramodal and crossmodal cue biases, with significant differences between all cue types. The pattern for the auditory targets was similar apart from a scaling factor and greater variance, so the differences between the cue conditions did not reach significance. These results provide evidence for multisensory integration in exogenous attentional cueing. The magnitude of the bimodal cueing effect was equivalent to the average of the facilitation elicited by the intramodal and crossmodal cues. Under the assumption that the visual and auditory cues were equally informative, this is consistent with the notion that exogenous attention, like perception, integrates multimodal information in an optimal way.

Temporal dynamics of neuronal modulation during exogenous and endogenous shifts of visual attention in macaque area MT

DOI:10.1073/pnas.0707369105

URL

PMID:18922778

[本文引用: 1]

Dynamically shifting attention between behaviorally relevant stimuli in the environment is a key condition for successful adaptive behavior. Here, we investigated how exogenous (reflexive) and endogenous (voluntary) shifts of visual spatial attention interact to modulate activity of single neurons in extrastriate area MT. We used a double-cueing paradigm, in which the first cue instructed two macaque monkeys to covertly attend to one of three moving random dot patterns until a second cue, whose unpredictable onset exogenously captured attention, either signaled to shift or maintain the current focus of attention. The neuronal activity revealed correlates of both exogenous and endogenous attention, which could be well distinguished by their characteristic temporal dynamics. The earliest effect was a transient interruption of the focus of endogenous attention by the onset of the second cue. The neuronal signature of this exogenous capture of attention was a short-latency decrease of responses to the stimulus attended so far. About 70 ms later, the influence of exogenous attention leveled off, which was reflected in two concurrent processes: responses to the newly cued stimulus continuously increased because of allocation of endogenous attention, while, surprisingly, there was also a gradual rebound of attentional enhancement of the previously relevant stimulus. Only after an additional 110 ms did endogenous disengagement of attention from this previously relevant stimulus become evident. These patterns of attentional modulation can be most parsimoniously explained by assuming two distinct attentional mechanisms drawing on the same capacity-limited system, with exogenous attention having a much faster time course than endogenous attention.

An fMRI study of the ventriloquism effect

DOI:10.1093/cercor/bhu306

URL

PMID:4816779

In spatial perception, visual information has higher acuity than auditory information and we often misperceive sound-source locations when spatially disparate visual stimuli are presented simultaneously. Ventriloquists make good use of this auditory illusion. In this study, we investigated neural substrates of the ventriloquism effect to understand the neural mechanism of multimodal integration. This study was performed in 2 steps. First, we investigated how sound locations were represented in the auditory cortex. Secondly, we investigated how simultaneous presentation of spatially disparate visual stimuli affects neural processing of sound locations. Based on the population rate code hypothesis that assumes monotonic sensitivity to sound azimuth across populations of broadly tuned neurons, we expected a monotonic increase of blood oxygenation level-dependent (BOLD) signals for more contralateral sounds. Consistent with this hypothesis, we found that BOLD signals in the posterior superior temporal gyrus increased monotonically as a function of sound azimuth. We also observed attenuation of the monotonic azimuthal sensitivity by spatially disparate visual stimuli. The alteration of the neural pattern was considered to reflect the neural mechanism of the ventriloquism effect. Our findings indicate that conflicting audiovisual spatial information of an event is associated with an attenuation of neural processing of auditory spatial localization.

Multisensory integration: Methodological approaches and emerging principles in the human brain

DOI:10.1016/j.jphysparis.2004.03.018

URL

PMID:15477032

[本文引用: 1]

Understanding the conditions under which the brain integrates the different sensory streams and the mechanisms supporting this phenomenon is now a question at the forefront of neuroscience. In this paper, we discuss the opportunities for investigating these multisensory processes using modern imaging techniques, the nature of the information obtainable from each method and their benefits and limitations. Despite considerable variability in terms of paradigm design and analysis, some consistent findings are beginning to emerge. The detection of brain activity in human neuroimaging studies that resembles multisensory integration responses at the cellular level in other species, suggests similar crossmodal binding mechanisms may be operational in the human brain. These mechanisms appear to be distributed across distinct neuronal networks that vary depending on the nature of the shared information between different sensory cues. For example, differing extents of correspondence in time, space or content seem to reliably bias the involvement of different integrative networks which code for these cues. A combination of data obtained from haemodynamic and electromagnetic methods, which offer high spatial or temporal resolution respectively, are providing converging evidence of multisensory interactions at both “early” and “late” stages of processing––suggesting a cascade of synergistic processes operating in parallel at different levels of the cortex.

Visual attention: The past 25 years

Pip and pop: When auditory alarms facilitate visual change detection in dynamic settings

DOI:10.1177/1541931213601065 URL [本文引用: 1]

Olfaction spontaneously highlights visual saliency map

DOI:10.1098/rspb.2013.1729

URL

PMID:23945694

[本文引用: 1]

中国科学院机构知识库(CAS IR GRID)以发展机构知识能力和知识管理能力为目标,快速实现对本机构知识资产的收集、长期保存、合理传播利用,积极建设对知识内容进行捕获、转化、传播、利用和审计的能力,逐步建设包括知识内容分析、关系分析和能力审计在内的知识服务能力,开展综合知识管理。

Two cognitive and neural systems for endogenous and exogenous spatial attention

DOI:10.1016/j.bbr.2012.09.027

URL

PMID:23000534

[本文引用: 2]

Orienting of spatial attention is a family of phylogenetically old mechanisms developed to select information for further processing. Information can be selected via top-down or endogenous mechanisms, depending on the goals of the observers or on the task at hand. Moreover, salient and potentially dangerous events also attract spatial attention via bottom-up or exogenous mechanisms, allowing a rapid and efficient reaction to unexpected but important events. Fronto-parietal brain networks have been demonstrated to play an important role in supporting spatial attentional orienting, although there is no consensus on whether there is a single attentional system supporting both endogenous and exogenous attention, or two anatomical and functionally different attentional systems. In the present paper we review behavioral evidence emphasizing the differential characteristics of both systems, as well as their possible interactions for the control of the final orienting response. Behavioral studies reporting qualitative differences between the effects of both systems as well as double dissociations of the effects of endogenous and exogenous attention on information processing, suggest that they constitute two independent attentional systems, rather than a single one. Recent models of attentional orienting in humans have put forward the hypothesis of a dorsal fronto-parietal network for orienting spatial attention, and a more ventral fronto-parietal network for detecting unexpected but behaviorally relevant events. Non-invasive neurostimulation techniques, as well as neuropsychological data, suggest that endogenous and exogenous attention are implemented in overlapping, although partially segregated, brain circuits. Although more research is needed in order to refine our anatomical and functional knowledge of the brain circuits underlying spatial attention, we conclude that endogenous and exogenous spatial orienting constitute two independent attentional systems, with different behavioral effects, and partially distinct neural substrates.

Effects of endogenous and exogenous attention on visual processing: An inhibition of return study

DOI:10.1016/j.brainres.2009.04.011

URL

PMID:19374885

[本文引用: 1]

We investigate early (P1) and late (P3) modulations of event-related potentials produced by endogenous (expected vs. unexpected location trials) and exogenous (cued vs. uncued location trials) orienting of spatial attention. A 75% informative peripheral cue was presented 1000 ms before the target in order to study Inhibition of Return (IOR), a mechanism that produces slower responses to peripherally cued versus uncued locations. Endogenous attention produced its effects more strongly at later stages of processing, while IOR (an index of exogenous orienting) was found to modulate both early and late stages of processing. The amplitude of P1 was reduced for cued versus uncued location trials, even when endogenous attention was maintained at the cued location. This result indicates that the perceptual effects of IOR are not eliminated by endogenous attention, suggesting that the IOR mechanism produces a perceptual decrement on the processing of stimuli at the cued location that cannot be counteracted by endogenous attention.

Top-down control and early multisensory processes: Chicken vs. egg

DOI:10.3389/fnint.2015.00017

URL

PMID:4347447

[本文引用: 1]

Top-down control and early multisensory processes: chicken vs. egg

Selective attention to sound location or pitch studied with fMRI

DOI:10.1016/j.brainres.2006.01.025

URL

PMID:16515772

[本文引用: 1]

We used 3-T functional magnetic resonance imaging to compare the brain mechanisms underlying selective attention to sound location and pitch. In different tasks, the subjects ( N02=0210) attended to a designated sound location or pitch or to pictures presented on the screen. In the Attend Location conditions, the sound location varied randomly (left or right), while the pitch was kept constant (high or low). In the Attend Pitch conditions, sounds of randomly varying pitch (high or low) were presented at a constant location (left or right). Both attention to location and attention to pitch produced enhanced activity (in comparison with activation caused by the same sounds when attention was focused on the pictures) in widespread areas of the superior temporal cortex. Attention to either sound feature also activated prefrontal and inferior parietal cortical regions. These activations were stronger during attention to location than during attention to pitch. Attention to location but not to pitch produced a significant increase of activation in the premotor/supplementary motor cortices of both hemispheres and in the right prefrontal cortex, while no area showed activity specifically related to attention to pitch. The present results suggest some differences in the attentional selection of sounds on the basis of their location and pitch consistent with the suggested auditory “what” and “where” processing streams.

The effects of attention on the temporal integration of multisensory stimuli

DOI:10.3389/fnint.2015.00032

URL

PMID:4407588

[本文引用: 1]

In unisensory contexts, spatially-focused attention tends to enhance perceptual processing. How attention influences the processing of multisensory stimuli, however, has been of much debate. In some cases, attention has been shown to be important for processes related to the integration of audio-visual stimuli, but in other cases such processes have been reported to occur independently of attention. To address these conflicting results, we performed three experiments to examine how attention interacts with a key facet of multisensory processing: the temporal window of integration (TWI). The first two experiments used a novel cued-spatial-attention version of the bounce/stream illusion, where two moving visual stimuli with intersecting paths tend to be perceived as bouncing off rather than streaming through each other when a brief sound occurs near in time. When the task was to report whether the visual stimuli appeared to bounce or stream, attention served to narrow this measure of the TWI and bias perception toward treaming . When the participants task was to explicitly judge the simultaneity of the sound with the intersection of the moving visual stimuli, however, the results were quite different. Specifically, attention served to mainly widen the TWI, increasing the likelihood of simultaneity perception, while also substantially increasing the simultaneity judgement accuracy when the stimuli were actually physically simultaneous. Finally, in Experiment 3, where the task was to judge the simultaneity of a simple, temporally discrete, flashed, visual stimulus and the same brief tone pip, attention had no effect on the measured TWI. These results highlight the flexibility of attention in enhancing multisensory perception and show that the effect of attention on multisensory processing is highly dependent on the task demands and observer goals.

Spatial attention can modulate audiovisual integration at multiple cortical and subcortical sites

DOI:10.1111/j.1460-9568.2009.06688.x

URL

PMID:19302160

[本文引用: 1]

The role of attention in multisensory integration (MI) is presently uncertain, with some studies supporting an automatic, pre-attentive process and others suggesting possible modulation through selective attention. The goal of this functional magnetic resonance imaging study was to investigate the role of spatial attention on the processing of congruent audiovisual speech stimuli (here indexing MI). Subjects were presented with two simultaneous visual streams (speaking lips in the left and right visual hemifields) plus a single central audio stream (spoken words). In the selective attention conditions, the auditory stream was congruent with one of the two visual streams. Subjects attended to either the congruent or the incongruent visual stream, allowing the comparison of brain activity for attended vs. unattended MI while the amount of multisensory information in the environment and the overall attentional requirements were held constant. Meridian mapping and a lateralized 'speaking-lips' localizer were used to identify early visual areas and to localize regions responding to contralateral visual stimulations. Results showed that attention to the congruent audiovisual stimulus resulted in increased activation in the superior temporal sulcus, striate and extrastriate retinotopic visual cortex, and superior colliculus. These findings demonstrate that audiovisual integration and spatial attention jointly interact to influence activity in an extensive network of brain areas, including associative regions, early sensory-specific visual cortex and subcortical structures that together contribute to the perception of a fused audiovisual percept.

Neural correlates of reliability-based cue weighting during multisensory integration

DOI:10.1038/nn.2983

URL

PMID:22101645

[本文引用: 1]

Integration of multiple sensory cues is essential for precise and accurate perception and behavioral performance, yet the reliability of sensory signals can vary across modalities and viewing conditions. Human observers typically employ the optimal strategy of weighting each cue in proportion to its reliability, but the neural basis of this computation remains poorly understood. We trained monkeys to perform a heading discrimination task from visual and vestibular cues, varying cue reliability randomly. The monkeys appropriately placed greater weight on the more reliable cue, and population decoding of neural responses in the dorsal medial superior temporal area closely predicted behavioral cue weighting, including modest deviations from optimality. We found that the mathematical combination of visual and vestibular inputs by single neurons is generally consistent with recent theories of optimal probabilistic computation in neural circuits. These results provide direct evidence for a neural mechanism mediating a simple and widespread form of statistical inference.

Semantic priming produces contingent attentional capture by conceptual content

Selectivity in distraction by irrelevant featural singletons: Evidence for two forms of attentional capture

DOI:10.1037/0096-1523.24.3.847

URL

PMID:9627420

[本文引用: 1]

Evaluates evidence of the effectiveness of top-down control over attentional capture focusing on the effects of irrelevant featural singletons in visual search. How irrelevant singletons produce distraction effects dissociable from shifts of spatial attention; Information on the mean response times on error rates for the nonsingleton and singleton target conditions; What the results suggested.

Effects of ipsilateral and bilateral auditory stimuli on audiovisual integration: A behavioral and event-related potential study

DOI:10.1097/WNR.0000000000000155

URL

PMID:24780895

[本文引用: 1]

Abstract We used event-related potential measures to compare the effects of ipsilateral and bilateral auditory stimuli on audiovisual (AV) integration. Behavioral results showed that the responses to visual stimuli with either type of auditory stimulus were faster than responses to visual stimuli only and that perceptual sensitivity (d') for visual detection was enhanced for visual stimuli with ipsilateral auditory stimuli. Furthermore, event-related potential components related to AV integrations were identified over the occipital areas at 180-200 ms during early-stage sensory processing by the effect of ipsilateral auditory stimuli and over the frontocentral areas at 300-320 ms during late-stage cognitive processing by the effect of ipsilateral and bilateral auditory stimuli. Our results confirmed that AV integration was also elicited, despite the effect of bilateral auditory stimuli, and only occurred at later stages of cognitive processing in response to a visual detection task. Furthermore, integration from early-stage sensory processing was observed by the effect of ipsilateral auditory stimuli, suggesting that the integration of AV information in the human brain might be particularly sensitive to ipsilaterally presented AV stimuli.

Auditory-visual integration during multimodal object recognition in humans: A behavioral and electrophysiological study

There is more to trial history than priming in attentional capture experiments

DOI:10.3758/s13414-015-0896-3

URL

PMID:25832193

[本文引用: 1]

We used contingent attentional capture to investigate whether capture in a given trial n was affected by the cue–target position relations in a preceding trial n –1. Typically, attentional capture by a cue facilitates reaction times for targets in valid conditions (with the cue and target at the same position) relative to invalid conditions (with the cue and target at different positions). Also, this validity effect holds for cues with a feature similar to the searched-for target features (i.e., matching cues), but not for cues dissimilar to the searched-for target features (i.e., nonmatching cues), a pattern termed contingent capture because capture is assumed to be contingent on the match between the cue and top-down control settings. Here, we replicated this contingent-capture pattern with cues that were nonpredictive of the target position. In addition, we showed that during search for white onset targets, red nonmatching color cues also created a validity effect if the same nonmatching cue had been used as a valid cue in trial n –1 (Exps. 1 and 2 ). This intertrial contingency of the nonmatching cue’s validity effect was also found if the cues and targets both changed their positions from trial to trial, rendering position priming unlikely (Exp. 2 ). A similar intertrial contingency was found for nonmatching white onset cues, but not for matching red color cues during search for red color targets (Exp. 3 ). These results are discussed in light of explanations of the contingent-capture effect and of intertrial contingencies.

Setting semantics: Conceptual set can determine the physical properties that capture attention

DOI:10.3758/s13414-014-0686-3

URL

PMID:24824982

[本文引用: 1]

The ability of a stimulus to capture visuospatial attention depends on the interplay between its bottom-up saliency and its relationship to an observer’s top-down control set, such that stimuli capture attention if they match the predefined properties that distinguish a searched-for target from distractors (Folk, Remington, & Johnston, Journal of Experimental Psychology : Human Perception & Performance, 18 , 1030–1044 1992 ). Despite decades of research on this phenomenon, however, the vast majority has focused exclusively on matches based on low-level physical properties. Yet if contingent capture is indeed a “top-down” influence on attention, then semantic content should be accessible and able to determine which physical features capture attention. Here we tested this prediction by examining whether a semantically defined target could create a control set for particular features. To do this, we had participants search to identify a target that was differentiated from distractors by its meaning (e.g., the word “red” among color words all written in black). Before the target array, a cue was presented, and it was varied whether the cue appeared in the physical color implied by the target word. Across three experiments, we found that cues that embodied the meaning of the word produced greater cuing than cues that did not. This suggests that top-down control sets activate content that is semantically associated with the target-defining property, and this content in turn has the ability to exogenously orient attention.

The involvement of bottom-up saliency processing in endogenous inhibition of return

DOI:10.3758/s13414-011-0234-3

URL

PMID:22038667

[本文引用: 1]

Participants are faster at detecting a visual target when it appears at a cued, as compared with an uncued, location. In general, a reversal of this cost–benefit pattern is observed after exogenous cuing when the cue–target interval exceeds approximately 25002ms (inhibition of return [IOR]), and not after endogenous cuing. We suggest that, usually, no IOR is found with endogenous cues because no bottom-up saliency-based orienting processes are claimed. Therefore, we developed an endogenous feature-based split-cue task to allow for endogenous saliency-based orienting. IOR was observed in the saliency-driven endogenous cuing condition, and not in the control condition that prevented saliency-based orienting. These results suggest that usage of saliency-based orienting processes in either endogenous or exogenous orienting warrants the appearance of IOR.

Multisensory warning signals: When spatial correspondence matters

DOI:10.1007/s00221-009-1778-5

URL

PMID:19381621

[本文引用: 1]

Abstract We report a study designed to investigate the effectiveness of task-irrelevant unimodal and bimodal audiotactile stimuli in capturing a person's spatial attention away from a highly perceptually demanding central rapid serial visual presentation (RSVP) task. In "Experiment 1", participants made speeded elevation discrimination responses to peripheral visual targets following the presentation of auditory stimuli that were either presented alone or else were paired with centrally presented tactile stimuli. The results showed that the unimodal auditory stimuli only captured spatial attention when participants were not performing the RSVP task, while the bimodal audiotactile stimuli did not result in any performance change in any of the conditions. In "Experiment 2", spatial auditory stimuli were either presented alone or else were paired with a tactile stimulus presented from the same direction. In contrast to the results of "Experiment 1", the bimodal audiotactile stimuli were especially effective in capturing participants' spatial attention from the concurrent RSVP task. These results therefore provide support for the claim that auditory and tactile stimuli should be presented from the same direction if they are to capture attention effectively. Implications for multisensory warning signal design are discussed.

Interactions between endogenous and exogenous attention on cortical visual processing

DOI:10.1016/j.neuroimage.2005.12.049

URL

PMID:16490366

[本文引用: 1]

Sensory processing is affected by both endogenous and exogenous mechanisms of attention, although how these mechanisms interact in the brain has remained unclear. In the present study, we recorded event-related potentials (ERPs) to investigate how multiple stages of information processing in the brain are affected when endogenous and exogenous mechanisms are concurrently engaged. We found that the earliest stage of cortical visual processing, the striate-cortex-generated C1, was immune to attentional modulation, even when endogenous and exogenous attention converged on a common location. The earliest stage of processing to be affected in this experiment was the late phase of the extrastriate-cortex-generated P1 component, which was dominated by exogenous attention. Processing at this stage was enhanced by exogenous attention, regardless of where endogenous attention had been oriented. Endogenous attention, however, dominated a later, higher-order stage of processing indexed by an enhancement of the P300 that was unaffected by exogenous attention. Critically, between these early and late stages, an interaction was found wherein endogenous and exogenous attention produced distinct, and overlapping, effects on information processing. At the same time that exogenous attention was producing an extended enhancement of the late-P1, endogenous attention was enhancing the occipital arietal N1 component. These results provide neurophysiological support for theories suggesting that endogenous and exogenous mechanisms represent two attention systems that can affect information processing in the brain in distinct ways. Furthermore, these data provide new evidence regarding the precise stages of neural processing that are, and are not, affected when endogenous and exogenous attentions interact.

Attention and the multiple stages of multisensory integration: A review of audiovisual studies

DOI:10.1016/j.actpsy.2010.03.010

URL

PMID:20427031

[本文引用: 1]

Multisensory integration and crossmodal attention have a large impact on how we perceive the world. Therefore, it is important to know under what circumstances these processes take place and how they affect our performance. So far, no consensus has been reached on whether multisensory integration and crossmodal attention operate independently and whether they represent truly automatic processes. This review describes the constraints under which multisensory integration and crossmodal attention occur and in what brain areas these processes take place. Some studies suggest that multisensory integration and crossmodal attention take place in higher heteromodal brain areas, while others show the involvement of early sensory specific areas. Additionally, the current literature suggests that multisensory integration and attention interact depending on what processing level integration takes place. To shed light on this issue, different frameworks regarding the level at which multisensory interactions takes place are discussed. Finally, this review focuses on the question whether audiovisual interactions and crossmodal attention in particular are automatic processes. Recent studies suggest that this is not always the case. Overall, this review provides evidence for a parallel processing framework suggesting that both multisensory integration and attentional processes take place and can interact at multiple stages in the brain.

Less is more: Expectation sharpens representations in the primary visual cortex

DOI:10.1016/j.neuron.2012.04.034

URL

PMID:22841311

[本文引用: 1]

In perception, prior expectations allow us to quickly deduce plausible interpretations from noisy and ambiguous data. Here, Kok et02al. show that expectation facilitates perception by reducing the overall neural response amplitude, yet increasing the informational content in primary visual cortex.

Capture of visual attention interferes with multisensory speech processing

DOI:10.3389/fnint.2012.00067

URL

PMID:23325222

[本文引用: 1]

Attending to a conversation in a crowded scene requires selection of relevant information, while ignoring other distracting sensory input, such as speech signals from surrounding people. The neural mechanisms of how distracting stimuli influence the processing of attended speech are not well understood. In this high-density electroencephalography (EEG) study, we investigated how different types of speech and non-speech stimuli influence the processing of attended audiovisual speech. Participants were presented with three horizontally aligned speakers who produced syllables. The faces of the three speakers flickered at specific frequencies (19 Hz for flanking speakers and 25 Hz for the center speaker), which induced steady-state visual evoked potentials (SSVEP) in the EEG that served as a measure of visual attention. The participants' task was to detect an occasional audiovisual target syllable produced by the center speaker, while ignoring distracting signals originating from the two flanking speakers. In all experimental conditions the center speaker produced a bimodal audiovisual syllable. In three distraction conditions, which were contrasted with a no-distraction control condition, the flanking speakers either produced audiovisual speech, moved their lips, and produced acoustic noise, or moved their lips without producing an auditory signal. We observed behavioral interference in the reaction times (RTs) in particular when the flanking speakers produced naturalistic audiovisual speech. These effects were paralleled by enhanced 19 Hz SSVEP, indicative of a stimulus-driven capture of attention toward the interfering speakers. Our study provides evidence that non-relevant audiovisual speech signals serve as highly salient distractors, which capture attention in a stimulus-driven fashion.

Is goal-directed attentional guidance just intertrial priming? A review

DOI:10.1167/13.3.14

URL

PMID:23818660

[本文引用: 1]

According to most models of selective visual attention, our goals at any given moment and saliency in the visual field determine attentional priority. But selection is not carried out in isolation--we typically track objects through space and time. This is not well captured within the distinction between goal-directed and saliency-based attentional guidance. Recent studies have shown that selection is strongly facilitated when the characteristics of the objects to be attended and of those to be ignored remain constant between consecutive selections. These studies have generated the proposal that goal-directed or top-down effects are best understood as intertrial priming effects. Here, we provide a detailed overview and critical appraisal of the arguments, experimental strategies, and findings that have been used to promote this idea, along with a review of studies providing potential counterarguments. We divide this review according to different types of attentional control settings that observers are thought to adopt during visual search: feature-based settings, dimension-based settings, and singleton detection mode. We conclude that priming accounts for considerable portions of effects attributed to top-down guidance, but that top-down guidance can be independent of intertrial priming.

Distracted and confused? Selective attention under load

DOI:10.1016/j.tics.2004.12.004

URL

PMID:15668100

[本文引用: 1]

The ability to remain focused on goal-relevant stimuli in the presence of potentially interfering distractors is crucial for any coherent cognitive function. However, simply instructing people to ignore goal-irrelevant stimuli is not sufficient for preventing their processing. Recent research reveals that distractor processing depends critically on the level and type of load involved in the processing of goal-relevant information. Whereas high perceptual load can eliminate distractor processing, high load on ‘frontal’ cognitive control processes increases distractor processing. These findings provide a resolution to the long-standing early and late selection debate within a load theory of attention that accommodates behavioural and neuroimaging data within a framework that integrates attention research with executive function.

Audiovisual interaction enhances auditory detection in late stage: An event-related potential study

DOI:10.1097/WNR.0b013e3283345f08

URL

PMID:20065887

Although many behavioral studies have investigated auditory detection enhancement by crossmodal audiovisual interaction, the results are controversial. In addition, no neuroimaging studies that identify this phenomenon have been conducted. Therefore, we used event-related potential (ERP) measures to investigate this phenomenon by comparing the ERPs elicited by the audiovisual stimuli to the sum of the ERPs elicited by the visual and auditory stimuli, and identified two brain regions that showed significantly different responses: the centro-medial area at 280-300 ms after the presentation of the stimulus and the right fronto-temporal area at 300-320 ms after the presentation of the stimulus. The ERP results suggested that the behavioral enhancement of auditory detection results from late-stage cognitive processes rather than early-stage sensory processes.

Spatiotemporal relationships among audiovisual stimuli modulate auditory facilitation of visual target discrimination

DOI:10.1068/p7846

URL

PMID:26562250

[本文引用: 1]

Sensory information is multimodal; through audiovisual interaction, task-irrelevant auditory stimuli tend to speed response times and increase visual perception accuracy. However, mechanisms underlying these performance enhancements have remained unclear. We hypothesize that task-irrelevant auditory stimuli might provide reliable temporal and spatial cues for visual target discrimination and behavioral response enhancement. Using signal detection theory, the present study investigated the effects of spatiotemporal relationships on auditory facilitation of visual target discrimination. Three experiments were conducted where an auditory stimulus maintained reliable temporal and/or spatial relationships with visual target stimuli. Results showed that perception sensitivity (d') to visual target stimuli was enhanced only when a task-irrelevant auditory stimulus maintained reliable spatiotemporal relationships with a visual target stimulus. When only reliable spatial or temporal information was contained, perception sensitivity was not enhanced. These results suggest that reliable spatiotemporal relationships between visual and auditory signals are required for audiovisual integration during a visual discrimination task, most likely due to a spread of attention. These results also indicate that auditory facilitation of visual target discrimination follows from late-stage cognitive processes rather than early stage sensory processes.

The spatial reliability of task-irrelevant sounds modulates bimodal audiovisual integration: An event-related potential study

DOI:10.1016/j.neulet.2016.07.003

URL

PMID:27392755

[本文引用: 1]

The integration of multiple sensory inputs is essential for perception of the external world. The spatial factor is a fundamental property of multisensory audiovisual integration. Previous studies of the spatial constraints on bimodal audiovisual integration have mainly focused on the spatial congruity of audiovisual information. However, the effect of spatial reliability within audiovisual information on bimodal audiovisual integration remains unclear. In this study, we used event-related potentials (ERPs) to examine the effect of spatial reliability of task-irrelevant sounds on audiovisual integration. Three relevant ERP components emerged: the first at 140–200ms over a wide central area, the second at 280–320ms over the fronto-central area, and a third at 380–440ms over the parieto-occipital area. Our results demonstrate that ERP amplitudes elicited by audiovisual stimuli with reliable spatial relationships are larger than those elicited by stimuli with inconsistent spatial relationships. In addition, we hypothesized that spatial reliability within an audiovisual stimulus enhances feedback projections to the primary visual cortex from multisensory integration regions. Overall, our findings suggest that the spatial linking of visual and auditory information depends on spatial reliability within an audiovisual stimulus and occurs at a relatively late stage of processing.

Spatial filtering during visual search: Evidence from human electrophysiology

DOI:10.1037/0096-1523.20.5.1000

URL

PMID:7964526

The identification of targets in visual search arrays may be improved by suppressing competing information from the surrounding distractor items. The present study provided evidence that this hypothetical filtering process has a neural correlate, the "N2pc" component of the event-related potential waveform. The N2pc was observed when a target item was surrounded by competing distractor items but was absent when the array could be rejected as a nontarget on the basis of simple feature information. In addition, the N2pc was eliminated when filtering was discouraged by removing the distractor items, making the distractors relevant, or making all items within an array identical. Combined with previous topographic analyses, these results suggest that attentional filtering occurs in occipital cortex under the control of feedback from higher cortical regions after a preliminary feature-based analysis of the stimulus array.

The curious incident of attention in multisensory integration: Bottom- up vs. top-down

The effect of multisensory cues on attention in aging

DOI:10.1016/j.brainres.2012.07.014

URL

PMID:3592377

[本文引用: 1]

78 Multisensory alerting cues were not facilitative for old and young adults. 78 Old and young adults demonstrated significant orienting effects for all multisensory cues. 78 However, orienting benefits varied by age group and multisensory condition. 78 Young adults benefited more from multisensory orienting cues than old adults.

No single electrophysiological marker for facilitation and inhibition of return: A review

DOI:10.1016/j.bbr.2015.11.030

URL

PMID:26643119

[本文引用: 2]

Different electrophysiological components have been associated with behavioural facilitation and inhibition of return (IOR), although there is no consensus about which of these components are essential to the mechanism/s underlying the cueing effects. Different spatial attention hypotheses propound different roles for these components. In this review, we try and describe these inconsistencies by first presenting the electrophysiological component modulations of exogenous spatial attention as predicted by different attentional hypotheses. We then review and quantitatively analyse data from the existing electrophysiological studies trying to accommodate their findings. Variables such as the task at hand, the temporal properties and interactions between cues and targets, the presence/absence of intervening events, or stimuli arrangement in the visual field, might critically explain the discrepancies between the theoretical predictions and the electrophysiological modulations that both facilitation and IOR produce. We conclude that there is no single neural marker for facilitation and IOR because the behavioural effect that is observed depends on the contribution of several components: perceptual (P1), late-perceptual (N1, Nd), spatial selection (N2pc), and decision processes (P3). Many variables determine the electrophysiological modulations of different attentional orienting mechanisms, which jointly define the observed spatial cueing effects.

Multisensory top-down sets: Evidence for contingent crossmodal capture

DOI:10.3758/s13414-015-0915-4

URL

[本文引用: 3]

Numerous studies that have investigated visual selective attention have demonstrated that a salient but task-irrelevant stimulus can involuntarily capture a participant’s attention. Over the years, a lively debate has erupted concerning the impact of contingent top-down control settings on such stimulus-driven attentional capture . In the research reported here, we investigated whether top-down sets would also affect participants’ performance in a multisensory task setting. A nonspatial compatibility task was used, in which the target and the distractor were always presented sequentially from the same spatial location. We manipulated target–distractor similarity by varying the visual and tactile features of the stimuli. Participants always responded to the visual target features (color); the tactile features were incorporated into the participants’ top-down set only when the experimental context allowed for the tactile feature to be used in order to discriminate the target from the distractor. Larger compatibility effects after bimodal distractors were observed only when the participants were searching for a bimodal target and when tactile information was useful. Taken together, these results provide the first demonstration of nonspatial contingent crossmodal capture.

Crossmodal attentional control sets between vision and audition

DOI:10.1016/j.actpsy.2017.05.011

URL

PMID:28575705

[本文引用: 3]

The interplay between top-down and bottom-up factors in attentional selection has been a topic of extensive research and controversy amongst scientists over the past two decades. According to the influential contingent capture hypothesis, a visual stimulus needs to match the feature(s) implemented into the current attentional control sets in order to be automatically selected. Recently, however, evidence has been presented that attentional control sets affect not only visual but also crossmodal selection. The aim of the present study was therefore to establish contingent capture as a general principle of multisensory selection. A non-spatial interference task with bimodal (visual and auditory) distractors and bimodal targets was used. The target and the distractors were presented in close temporal succession. In order to perform the task correctly, the participants only had to process a predefined target feature in either of the two modalities (e.g., colour when vision was the primary modality). Note that the additional crossmodal stimulation (e.g., a specific sound when hearing was the secondary modality) was not relevant for the selection of the correct response. Nevertheless, larger interference effects were observed when the distractor matched both the stimulus of the primary as well as the secondary modality and this pattern was even stronger if vision was the primary modality than if audition was the primary modality. These results are therefore in line with the crossmodal contingent capture hypothesis. Both visual and auditory early processing seem to be affected by top-down control sets even beyond the spatial dimension.

Multisensory enhancement of attentional capture in visual search

DOI:10.3758/s13423-011-0131-8

URL

PMID:21748418

[本文引用: 2]

Abstract Multisensory integration increases the salience of sensory events and, therefore, possibly also their ability to capture attention in visual search. This was investigated in two experiments where spatially uninformative color change cues preceded visual search arrays with color-defined targets. Tones were presented synchronously with these cues on half of all trials. Spatial-cuing effects indicative of cue-triggered capture of attention were larger on tone-present than on tone-absent trials, demonstrating multisensory enhancements of attentional capture. Larger capture effects for audiovisual events were found when cues were color singletons, and also when they appeared among heterogeneous color distractors. Tone-induced increases of attentional capture were independent of color-specific top-down task sets, suggesting that this multisensory effect is a stimulus-driven bottom-up phenomenon.

Top-down control of audiovisual search by bimodal search templates

DOI:10.1111/psyp.12086

URL

PMID:23834379

[本文引用: 4]

To test whether the attentional selection of targets defined by a combination of visual and auditory features is guided in a modality-specific fashion or by control processes that are integrated across modalities, we measured attentional capture by visual stimuli during unimodal visual and audiovisual search. Search arrays were preceded by spatially uninformative visual singleton cues that matched the current target-defining visual feature. Participants searched for targets defined by a visual feature, or by a combination of visual and auditory features (e.g., red targets accompanied by high-pitch tones). Spatial cueing effects indicative of attentional capture were reduced during audiovisual search, and cue-triggered N2pc components were attenuated and delayed. This reduction of cue-induced attentional capture effects during audiovisual search provides new evidence for the multimodal control of selective attention.

Determinants of multisensory integration in superior colliculus neurons. I. Temporal factors

DOI:10.1097/00005072-199808000-00008

URL

PMID:3668625

[本文引用: 1]

Abstract One of the most impressive features of the central nervous system is its ability to process information from a variety of stimuli to produce an integrated, comprehensive representation of the external world. In the present study, the temporal disparity among combinations of different sensory stimuli was shown to be a critical factor influencing the integration of multisensory stimuli by superior colliculus neurons. Several temporal principles that govern multisensory integration were revealed: (1) maximal levels of response enhancement were generated by overlapping the peak discharge periods evoked by each modality; (2) the magnitude of this enhancement decayed monotonically to zero as the peak discharge periods became progressively more temporally disparate; (3) with further increases in temporal disparity, the same stimulus combinations that previously produced enhancement could often produce depression; and (4) these kinds of interactions could frequently be predicted from the discharge trains initiated by each stimulus alone. Since multisensory superior colliculus neurons project to premotor areas of the brain stem and spinal cord that control the orientation of the receptor organs (eyes, pinnae, head), they are believed to influence attentive and orientation behaviors. Therefore, it is likely that the temporal relationships of different environmental stimuli that control the activity of these neurons are also a powerful determinant of superior colliculus-mediated attentive and orientation behaviors.

Statistical facilitation and the redundant signals effect: What are race and coactivation models?

DOI:10.3758/s13414-015-1017-z

URL

PMID:26555650

[本文引用: 1]

As a supplement to Gondan and Minakata’s (2015) tutorial on methods for testing the race model inequality, this theoretical note attempts to clarify further (a) the types of models that obey and viola

Evidence for the redundant signals effect in detection of categorical targets

Modality-specific selective attention attenuates multisensory integration

DOI:10.1007/s00221-007-1080-3

URL

PMID:17684735

[本文引用: 1]

Stimuli occurring in multiple sensory modalities that are temporally synchronous or spatially coincident can be integrated together to enhance perception. Additionally, the semantic content or meaning of a stimulus can influence cross-modal interactions, improving task performance when these stimuli convey semantically congruent or matching information, but impairing performance when they contain non-matching or distracting information. Attention is one mechanism that is known to alter processing of sensory stimuli by enhancing perception of task-relevant information and suppressing perception of task-irrelevant stimuli. It is not known, however, to what extent attention to a single sensory modality can minimize the impact of stimuli in the unattended sensory modality and reduce the integration of stimuli across multiple sensory modalities. Our hypothesis was that modality-specific selective attention would limit processing of stimuli in the unattended sensory modality, resulting in a reduction of performance enhancements produced by semantically matching multisensory stimuli, and a reduction in performance decrements produced by semantically non-matching multisensory stimuli. The results from two experiments utilizing a cued discrimination task demonstrate that selective attention to a single sensory modality prevents the integration of matching multisensory stimuli that is normally observed when attention is divided between sensory modalities. Attention did not reliably alter the amount of distraction caused by non-matching multisensory stimuli on this task; however, these findings highlight a critical role for modality-specific selective attention in modulating multisensory integration.

The multisensory function of the human primary visual cortex

DOI:10.1016/j.neuropsychologia.2015.08.011

URL

PMID:26275965

[本文引用: 1]

61The human primary visual cortex is multisensory in function.61This evidence emerges from a full pallet of brain imaging/mapping methods.61Haemodynamic methods show convergence and integration in primary visual cortex.61Electromagnetic methods show effects during early post-stimulus stages.61Multisensory effects in primary visual cortex directly impact behaviour/perception.

Facilitating masked visual target identification with auditory oddball stimuli

DOI:10.1007/s00221-012-3153-1

URL

PMID:22760584

[本文引用: 1]

Abstract; Vroomen and de Gelder in J Exp Psychol Hum 26:1583–1590, ). In the present study, we tested three oddball conditions: a louder tone presented amongst quieter tones, a quieter tone presented amongst louder tones, and the absence of a tone, within an otherwise identical tone sequence. Across three experiments, all three oddball conditions resulted in the crossmodal facilitation of participants’ visual target identification performance. These results therefore suggest that salient oddball stimuli in the form of deviating tones, when synchronized with the target, may be sufficient to capture participants’ attention and facilitate visual target identification. The fact that the absence of a sound in an otherwise-regular sequence of tones also facilitated performance suggests that multisensory integration cannot provide an adequate account for the ‘freezing’ effect. Instead, an attentional capture account is proposed to account for the benefits of oddball cuing in Vroomen and de Gelder’s task.

Principles of multisensory behavior

DOI:10.1523/JNEUROSCI.4678-12.2013

URL

PMID:23616552

[本文引用: 1]

The combined use of multisensory signals is often beneficial. Based on single cell recordings in the superior colliculius of cats, three basic rules were formulated to describe the effectiveness of multisensory integration: The enhancement of neuronal responses in multi- compared to uni-sensory conditions is largest when signals are presented at the same time (‘temporal rule’), occur at the same location (‘spatial rule’), and when signals are rather weak (‘principle of inverse effectiveness’). These rules are also considered to describe multisensory benefits as observed with behavioral measures, but do they capture these benefits best? To uncover the principles that rule multisensory behavior, we investigated the classical redundant signals effect, i.e., the speed-up of response times in multi- as compared to uni-sensory conditions. In a detection task, we presented both auditory and visual signals at three levels of signal strength and determined the speed-up for all nine combinations of signals. Based on a systematic analysis of empirical response time distributions as well as simulations using probability summation, we propose that two alternative rules apply. First, the ‘principle of equal effectiveness’ states that the benefit with multisensory signals (here the speed-up of reaction times) is largest when performance in the two uni-sensory conditions is similar. Second, the ‘variability rule’ states that the benefit is largest when performance in the uni-sensory conditions is variable. The generality of these rules is discussed with respect to experiments on accuracy and when maximum likelihood estimation instead of probability summation is considered as combination rule.

Alterations to multisensory and unisensory integration by stimulus competition

DOI:10.1152/jn.00509.2011

URL

[本文引用: 1]

In environments containing sensory events at competing locations, selecting a target for orienting requires prioritization of stimulus values. Although the superior colliculus (SC) is causally linked to the stimulus selection process, the manner in which SC multisensory integration operates in a competitive stimulus environment is unknown. Here we examined how the activity of visual-auditory SC neurons is affected by placement of a competing target in the opposite hemifield, a stimulus configuration that would, in principle, promote interhemispheric competition for access to downstream motor circuitry. Competitive interactions between the targets were evident in how they altered unisensory and multisensory responses of individual neurons. Responses elicited by a cross-modal stimulus (multisensory responses) proved to be substantially more resistant to competitor-induced than were unisensory responses (evoked by the component modality-specific stimuli). Similarly, when a cross-modal stimulus served as the competitor, it exerted considerably more than did its individual component stimuli, in some cases producing more than predicted by their linear sum. These findings suggest that multisensory integration can help resolve competition among multiple targets by enhancing orientation to the location of cross-modal events while simultaneously suppressing orientation to events at alternate locations.

Components of visual orienting

The costs of monitoring simultaneously two sensory modalities decrease when dividing attention in space

DOI:10.1016/j.neuroimage.2009.10.061

URL

PMID:19878728