1 引言

聆听音乐是现代人生活中的重要组成部分。声乐(即歌曲)与器乐(即纯音乐)是日常聆听中的2种音乐类型。然而, 有些人喜欢聆听器乐, 有些人则偏爱聆听声乐, 认为歌词可以帮助理解音乐意义, 如音乐的情绪意义。

那么, 听者对声乐和器乐情绪加工是否存在差异?行为研究表明, 声乐与器乐情绪加工存在差异。在Stratton和Zalanowski (1994)研究中, 大学生聆听一首表现悲伤情绪的歌曲、歌曲的纯音乐或歌词版本, 在10点等级(1 = 非常不愉快, 10 = 非常愉快)上对刺激的愉悦度进行主观评定。结果显示, 大学生对纯音乐愉悦度的评定显著高于对歌曲和歌词的评定。通过5点等级评定, Brattico等人(2011)也发现, 被试对纯音乐的愉悦度评定高于对歌曲的评定。但是, 也有矛盾的研究结果。Loui, Bachorik, Li和Schlaug (2013)让被试聆听16首歌曲及其器乐版本, 对音乐愉悦度进行5点等级评定。研究者发现, 听者对声乐与器乐版本的愉悦度评定无明显差异。

以上研究从情绪效价视角探究声乐与器乐情绪加工, 还有一些研究从情绪强度视角探讨这个问题。在Ali和Peynircioğlu (2006)研究中, 大学生聆听16首歌曲及其对应的器乐版本。在听完音乐后, 被试评定音乐表现的情绪强度(1 = 一点也不, 9 = 非常)。结果表明, 纯音乐的情绪强度高于歌曲的情绪强度。然而, Franco,Chew和Swaine (2017)得出不同的结论。研究者让3组大学生分别聆听纯音乐、有纯音乐的歌曲和无纯音乐的歌曲, 评定音乐情绪强度(1 = 一点也不, 7 = 非常)。研究表明, 被试对不同音乐情绪强度的评定基本相同。

已有研究结果的不一致可能由于行为指标比较主观、不敏感引起。那么, 使用相对客观、较为敏感的脑电指标(Kraus & Horowitz-Kraus, 2014; Luck, 2014)有可能更好揭示声乐与器乐情绪加工是否存在差异。跨通道情绪启动范式被广泛运用于情绪加工的ERP研究中(比如, Diamond & Zhang, 2016; Steinbeis & Koelsch, 2011)。这类研究主要聚焦N400和LPC成分。N400反映了语义或概念的整合加工(Kutas & Federmeier, 2011), 其峰值在400 ms左右达到最大, 通常分布在中央和顶区电极位置, 稍微偏右侧化。尽管如此, 在跨通道情绪启动范式研究中, 与启动音乐(Goerlich et al., 2012; Steinbeis & Koelsch, 2011)、言语韵律(Paulmann & Pell, 2010; Schirmer, Kotz, & Friederici, 2005)和面孔图片(Diamond & Zhang, 2016; Föcker & Röder, 2019)情绪不一致的目标刺激诱发了更大的N400。这表明, N400也反映了对情绪冲突的检测与整合(Zhang, Lawson, Guo, & Jiang, 2006; Zhang, Li, Gold, & Jiang, 2010)。LPC是情绪刺激呈现后300~1000 ms出现的正波, 峰值在500~600 ms达到最大, 通常分布在中线和顶区电极位置, 主要反映了对情绪信息的注意和整合加工(Hajcak, MacNamara, & Olvet, 2010)。具体来说, 与中性刺激相比, 情绪刺激如图片(Hajcak & Olvet, 2008)和言语韵律(Paulmann & Uskul, 2017)会诱发整个头皮产生一个较大的LPC。由于情绪刺激具有较强的动机意义, 因而引起了更多的注意(Schupp et al., 2000)。同样地, 与启动言语韵律(Pell et al., 2015; 郑志伟, 黄贤军, 2013)和面孔图片(Diamond & Zhang, 2016)情绪不一致的目标刺激诱发大脑双侧顶枕区或整个头皮产生了更大的LPC。这说明, LPC与注意分配和情绪整合相关(Herring, Taylor, White, & Crites Jr, 2011; Zhang, Kong, & Jiang, 2012; Zhang et al., 2010)。

最近, 张伟霞、王莞琪、周临舒和蒋存梅(2018)通过ERP技术考察了歌词对音乐情绪加工的影响。研究表明, 在无歌词的哼唱启动下, 大脑整个头皮产生了N400; 在带有歌词音乐启动下, 大脑整个头皮产生了LPC。迄今为止, 尚未有研究从电生理角度探究声乐与器乐情绪加工的差异。基于此, 本研究通过跨通道情绪启动范式对此进行探究。为了排除声乐与器乐曲在音乐形态上的差异, 本研究选取相同的音乐, 分别由人声演唱和小提琴演奏, 由此形成声乐和器乐2个版本。既然歌词的出现会诱发LPC, 而无歌词的哼唱诱发出N400 (张伟霞 等, 2018), 那么我们预期, 声乐和器乐情绪加工可能会分别产生LPC和N400。

2 方法

2.1 被试

根据已有研究(张伟霞 等, 2018)中情绪一致性的效应量[F(1, 15) = 5.17, ηp2 = 0.26, d = 1.19], 为了在0.01水平上达到90%的统计检验力, G*Power 3.1.9.4软件(Faul, Erdfelder, Lang, & Buchner, 2007)非参数配对t检验计算出的样本量至少为17。于是, 本实验招募了20名未受过专业音乐训练的普通大学生作为被试。由于3名被试脑电伪迹过多, 在数据分析时被剔除, 因此有效被试为17人(男生9人; 年龄 = 24.65 ± 1.31岁)。被试均报告为右利手, 听力和(矫正)视力正常, 无脑损伤和精神病史。所有被试签署了知情同意书, 并获得一定报酬。

2.2 刺激

刺激包含启动和目标刺激。启动刺激是从声乐教材中挑选的50个表达正性情绪和50个表达负性情绪的外国歌剧片段(时长10~25 s, 平均17 s)。歌剧片段分别由一名受过18年声乐或小提琴训练的研究生用中文演唱或小提琴演奏并进行录制, 由此排除了声乐和器乐在音乐形态上的差异。本实验包含100个声乐片段和100个器乐片段。这些音乐片段使用单声道采样, 采样率为22050 Hz。最后, 使用Adobe Audition CS6软件将音量标准化到-7 dB, 同时淡出1 s。

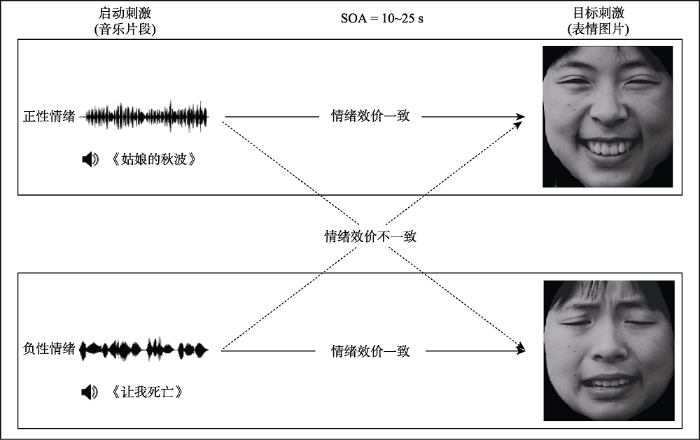

目标刺激是从《中国面孔表情图片系统》(龚栩, 黄宇霞, 王妍, 罗跃嘉, 2011)中挑选的2张女性面孔图片, 分别表现快乐和悲伤情绪; 2张图片的平均情绪认同度均为97.98%, 其平均情绪强度分别为7.24和5.88。每个音乐与2张面孔图片进行配对(见图1), 因而形成200个情绪效价一致和200个情绪效价不一致的配对。

图1

为确保刺激的有效性, 我们实施了3个前测。第一, 额外的16名普通大学生对400个音乐-图片配对的情绪效价一致性(1 = 非常不一致, 9 = 非常一致)进行评定。对于每个音乐片段, 配对t检验被用于检验情绪效价一致与不一致配对的评定是否具有显著差异。240个配对被作为正式的刺激:不管启动刺激是声乐[t(59) = 24.73, p < 0.001, d = 3.19]还是器乐[t(59) = 21.56, p < 0.001, d = 2.78], 听者对情绪效价一致配对的评定(声乐: M = 7.21, SD = 0.79; 器乐: M = 6.94, SD = 0.87)均高于对情绪效价不一致配对的评定(声乐: M = 2.72, SD = 0.80; 器乐: M = 3.15, SD = 0.77)。以情绪效价一致性(情绪效价一致、情绪效价不一致)和音乐类型(声乐、器乐)为被试内变量进行的方差分析结果显示, 情绪效价一致性的主效应[F(1, 59) = 822.81, p < 0.001, ηp2 = 0.93]以及情绪效价一致性和音乐类型的交互效应[F(1, 59) = 11.06, p = 0.002, ηp2 = 0.16]显著, 音乐类型的主效应不显著, F(1, 59) = 1.14, p = 0.290。这些结果表明实验材料是有效的, 同时, 2种音乐类型的任务难度相似。此外, 这些大学生对声乐中歌词清晰度(1 = 不清楚, 5 = 清楚)的平均评定高于4 (M = 4.45, SD = 0.23)。第二, 额外的16名音乐专业学生(均接受专业音乐训练18年以上)对声乐与器乐在弹性速度(M = 5.24, SD = 0.42)、力度(M = 5.38, SD = 0.40)、分句(M = 5.65, SD = 0.36)以及总体表演水平(M = 5.21, SD = 0.40)方面的评定具有较高的一致性(1 = 非常不一致, 7 = 非常一致), 其平均评定均高于5。第三, 额外的16名普通大学生对音乐的情绪效价(1 = 非常不愉快, 4 = 中性, 7 = 非常愉快)和唤醒程度(1 = 非常平静, 4 = 中性, 7 = 非常激动)进行了评定。就情绪效价而言, 以情绪类型(正性情绪、负性情绪)和音乐类型(声乐、器乐)为被试内变量的方差分析显示, 情绪类型[F(1, 29) = 2241.98, p < 0.001, ηp2 = 0.99]与音乐类型[F(1, 29) = 4.80, p = 0.037, ηp2 = 0.14]的主效应以及二者的交互效应[F(1, 29) = 8.37, p = 0.007, ηp2 = 0.22]显著。简单效应分析表明, 就正性情绪音乐而言, 器乐(M = 5.43, SD = 0.30)与声乐(M = 5.46, SD = 0.26)的情绪效价没有差异, F(1, 29) = 0.18, p = 0.674; 就负性情绪音乐而言, 器乐(M = 2.66, SD = 0.30)的情绪效价高于声乐(M = 2.41, SD = 0.35), F(1, 29) = 13.40, p = 0.001, ηp2 = 0.32。就唤醒程度而言, 情绪类型[F(1, 29) = 111.87, p < 0.001, ηp2 = 0.79]和音乐类型[F(1, 29) = 419.34, p < 0.001, ηp2 = 0.94]的主效应以及交互效应[F(1, 29) = 18.97, p < 0.001, ηp2 = 0.40]显著。简单效应分析表明, 无论是正性情绪[F(1, 29) = 36.07, p < 0.001, ηp2 = 0.55]还是负性情绪[F(1, 29) = 206.79, p < 0.001, ηp2 = 0.88]音乐, 器乐的唤醒程度(正性情绪: M = 4.87, SD = 0.59; 负性情绪: M = 3.32, SD = 0.41)都低于声乐(正性情绪: M = 5.54, SD = 0.47; 负性情绪: M = 4.80, SD = 0.49)。

2.3 程序

首先, 电脑屏幕中心出现一个持续时间为1 s的黑色注视点“+”。注视点消失后, 启动刺激出现, 被试通过耳机聆听音乐。在音乐播放完毕之后, 呈现目标刺激1 s。最后, 出现反应界面, 要求被试判断音乐与面孔图片所表达的情绪效价是否一致, 之后按空格键开始下一个试次。实验结束后, 要求被试报告音乐的标题, 但是他们未能报告出所有音乐标题中的任何一个关键词。

2.4 数据记录与分析

EEG信号通过NeuroScan Synamps 2系统及其64导电极帽(10-20方式排列电极)进行采集。左侧乳突为参考电极, 右侧乳突为记录电极。信号经过AC放大器放大, 滤波带通为0.05~100 Hz, 采样率为500 Hz。所有电极R < 5 kΩ。

数据采用Neuroscan 4.5软件进行预处理。首先, 将原始数据转换为双侧乳突电极的平均参考, 通过视觉观察手动排除肌电、心电、漂移等伪迹并使用软件的回归程序自动矫正眼电伪迹。其次, 进行0.1~30 Hz (24 dB/oct slope)的带通滤波。然后, 对目标刺激-200~1000 ms的脑电进行分段, 并进行基线校正(-200~0 ms)。最后, 自动剔除波幅在 ± 80 μV之外以及反应错误的试次, 对不同实验条件的试次分别进行叠加平均。

在预处理后, 为了控制由众多电极和时间点进行多重比较引起的Ⅰ类错误概率, 我们使用Fieldtrip工具箱(Oostenveld, Fries, Maris, & Schoffelen, 2011)中的基于聚类的置换检验(cluster-based permutation test)方法(Maris & Oostenveld, 2007)进行统计分析。首先, 使用相关样本t检验比较了情绪效价一致与不一致条件在每个相邻电极-时间点配对的差异。如果其差异在0.05水平上达到显著, 那么该配对被作为一个簇(cluster)。对于每个簇, 簇内每个电极-时间点t值之和作为簇水平的t值。然后, 我们混合每个被试在情绪效价一致与不一致条件的数据, 随机抽样2000次, 计算出每次抽样得到的最大的簇水平统计量, 从而构建一个假定2个实验条件没有差异的零分布。最后, 对比实际观测到的与零分布得到的最大的簇水平统计量, 使用蒙特卡罗方法估算p值(α = 0.05)。为了增加统计检验的敏感性, 我们根据已有关于N400 (Schirmer et al., 2005; Steinbeis & Koelsch, 2011)与LPC (Herring et al., 2011; Zhang et al., 2010)的研究和目测, 把面孔图片出现后的200~500 ms和400~700 ms分别作为N400和LPC的时间窗口。

3 结果

3.1 行为结果

我们以情绪效价一致性和音乐类型为被试内变量分别对正确率和反应时进行重复测量方差分析。就正确率而言, 情绪效价一致性的主效应显著, F(1, 16) = 13.50, p = 0.002, ηp2 = 0.46, 表明听者在情绪效价一致配对上的成绩(M = 92.04%, SD = 5.93)高于在情绪效价不一致配对上的成绩(M = 81.27%, SD = 14.40)。同时, 音乐类型的主效应也显著, F(1, 16) = 17.15, p = 0.001, ηp2 = 0.52, 表明听者在以声乐为启动刺激时对情绪效价一致性判断的成绩(M = 89.79%, SD = 8.94)比在以器乐为启动刺激时的成绩好(M = 83.52%, SD = 14.21)。然而, 二者的交互作用不显著, F(1, 16) = 0.02, p = 0.903。

就反应时而言, 情绪效价一致性的主效应显著, F(1, 16) = 12.54, p = 0.003, ηp2 = 0.44, 表明听者对情绪效价一致配对的反应(M = 993.40 ms, SD = 456.20)快于对情绪效价不一致配对的反应(M = 1174.97 ms, SD = 595.25)。但是, 音乐类型的主效应[F(1, 16) = 0.49, p = 0.495]和二者的交互作用[F(1, 16) = 0.27, p = 0.613]均不显著。

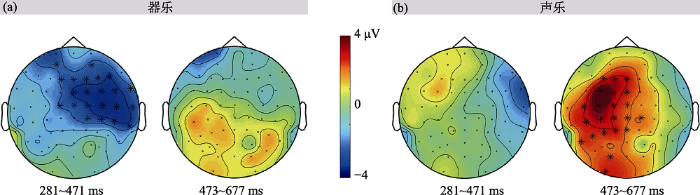

3.2 ERP结果

图2

图3

4 讨论

本研究使用ERP技术探讨声乐与器乐情绪加工的电生理反应是否存在差异。研究表明, 与一致条件相比, 与面孔情绪不一致的器乐曲诱发了N400; 而与面孔情绪不一致的声乐曲诱发了LPC。这说明, 声乐与器乐情绪加工的电生理反应存在差异。

我们发现, 启动刺激为器乐片段时, 情绪效价不一致比一致的面孔图片产生了更大的N400。该结果与一些研究结果一致:无论启动刺激是单个和弦(Steinbeis & Koelsch, 2011; Zhou, Liu, Jiang, & Jiang, 2019)还是器乐片段(Goerlich et al., 2012; Zhang et al., 2019), 情绪不一致比一致的面孔图片或具有情绪意义的单词都会诱发大脑产生一个较大的(类似)N400。本研究的N400可能主要反映了大脑对情绪冲突信息的检测与整合(Zhang et al., 2006, 2010)。具体而言, 启动刺激在概念水平上预先激活了与目标刺激有关的情绪表征, 从而使情绪一致的目标刺激诱发的N400波幅减小(Goerlich et al., 2012)。然而, 对于情绪不一致的目标刺激, 由于与其相关的情绪表征没有提前被激活, 因此被试需要更多的认知资源对情绪信息进行整合(Zhang et al., 2010), 导致N400波幅增大。由于这种激活具有自动化的性质(Zhang et al., 2006), 因而N400与情绪信息的自动化整合加工有关。

另一方面, 本研究的N400主要产生于右侧额颞和中央顶区电极, 表明N400出现了右半球偏侧化现象。这与已有研究结果一致:当被试任务是判断目标刺激与启动刺激情绪(Schirmer et al., 2005)或意义(Painter & Koelsch, 2011)是否一致时, N400出现右侧化。的确, fMRI研究也表明, 纯音乐比歌曲激活了更多的右侧脑区, 比如额中回、额下回和扣带回(Brattico et al., 2011)。但是, 本研究结果也与ERP研究结果矛盾:与启动和弦或器乐情绪不一致的目标刺激诱发的N400源于整个头皮(Steinbeis & Koelsch, 2011; Zhou et al., 2019)或前部区域(Goerlich et al., 2012)电极。这种矛盾可能源于被试任务的差异:在这些研究中, 被试的任务是判断目标刺激的情绪效价; 在本研究中, 被试任务是判断目标刺激与启动刺激情绪效价的一致性, 容易使被试较多关注启动刺激的情绪。未来研究需要对此进行探究。

我们也发现, 启动刺激为声乐片段时, 情绪效价不一致比一致的面孔图片诱发了更大的LPC。该结果与先前研究结果一致:与启动歌曲(张伟霞 等, 2018)和言语韵律(Pell et al., 2015; 郑志伟, 黄贤军, 2013)情绪不一致的面孔图片诱发了较大的LPC。本研究的LPC可能反映了注意资源的分配(Zhang et al., 2010, 2012)。当启动刺激是歌曲时, 由于歌曲本身的唤醒程度比较高, 因而会引起被试较多的注意(Schupp et al., 2000)。同时, 本研究的LPC也可能反映了情绪的整合加工(Herring et al., 2011), 因为听者需要先把歌词与音乐的情绪进行整合, 然后再与面孔图片的情绪进行匹配。因为涉及言语信息, 听者需要使用命题表征或思维有意识地对情绪信息进行加工(Strack & Deutsch, 2004), 所以体现了反思性的整合加工(Imbir, Spustek, & Żygierewicz, 2016)。然而, 声乐情绪加工并没有产生N400。该结果验证了张伟霞等人(2018)的结果:与歌曲情绪不一致的面孔图片没有诱发N400。这可能是因为N400与LPC反映了不同的情绪整合功能。这种解释与情绪二元模型的观点一致(Jarymowicz & Imbir, 2015)。该模型认为, 个体对刺激做出情绪反应的过程涉及2个独立的评价系统——自动评价系统和反思评价系统(Jarymowicz & Imbir, 2015):前者不需要以言语为基础, 后者则需要(Imbir et al., 2016)。

就LPC头皮分布而言, 本研究的LPC主要产生于左侧额中和顶枕电极, 表明了LPC的左半球偏侧化。这与已有研究结果一致:当单词情绪与反思评价系统相关(Imbir et al., 2016)或启动面孔与目标面孔情绪不一致(Cheal, Heisz, Walsh, Shedden, & Rutherford, 2014)时, 左侧电极产生了较大的LPC。fMRI研究也表明, 歌曲比纯音乐激活了更多的左侧脑区, 比如颞上回、壳核、楔叶和小脑山坡(Brattico et al., 2011)。然而, 本研究结果也与ERP研究结果不一致:与启动歌曲或言语韵律情绪不一致的面孔图片诱发的LPC产生于整个头皮(张伟霞 等, 2018)或双侧顶枕区(Pell et al., 2015; 郑志伟, 黄贤军, 2013)电极。这种矛盾可能与不同研究使用的启动刺激材料情绪效价和唤醒程度的差异有关。由于这些研究没有提供相关数据, 我们难以确定产生矛盾结果的具体原因。

致谢:

感谢上海师范大学音乐学院蒋存梅教授在本文写作中提供的帮助; 感谢上海公共卫生临床中心蔡丹超博士、中国科学院心理研究所孙丽君博士与重庆邮电大学李沛洋博士在ERP数据分析和作图方面提供的帮助。

参考文献

Songs and emotions: Are lyrics and melodies equal partners?

A functional MRI study of happy and sad emotions in music with and without lyrics

Afterimage induced neural activity during emotional face perception

Cortical processing of phonetic and emotional information in speech: A cross-modal priming study

DOI:10.1016/j.neuropsychologia.2016.01.019

URL

PMID:26796714

[本文引用: 3]

The current study employed behavioral and electrophysiological measures to investigate the timing, localization, and neural oscillation characteristics of cortical activities associated with phonetic and emotional information processing of speech. The experimental design used a cross-modal priming paradigm in which the normal adult participants were presented a visual prime followed by an auditory target. Primes were facial expressions that systematically varied in emotional content (happy or angry) and mouth shape (corresponding to /a/ or /i/ vowels). Targets were spoken words that varied by emotional prosody (happy or angry) and vowel (/a/ or /i/). In both the phonetic and prosodic conditions, participants were asked to judge congruency status of the visual prime and the auditory target. Behavioral results showed a congruency effect for both percent correct and reaction time. Two ERP responses, the N400 and late positive response (LPR), were identified in both conditions. Source localization and inter-trial phase coherence of the N400 and LPR components further revealed different cortical contributions and neural oscillation patterns for selective processing of phonetic and emotional information in speech. The results provide corroborating evidence for the necessity of differentiating brain mechanisms underlying the representation and processing of co-existing linguistic and paralinguistic information in spoken language, which has important implications for theoretical models of speech recognition as well as clinical studies on the neural bases of language and social communication deficits.

G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences

Event-related potentials reveal evidence for late integration of emotional prosody and facial expression in dynamic stimuli: An ERP study

Preschoolers’ attribution of affect to music: A comparison between vocal and instrumental performance

The nature of affective priming in music and speech

DOI:10.1162/jocn_a_00213

URL

PMID:22360592

[本文引用: 4]

The phenomenon of affective priming has caught scientific interest for over 30 years, yet the nature of the affective priming effect remains elusive. This study investigated the underlying mechanism of cross-modal affective priming and the influence of affective incongruence in music and speech on negativities in the N400 time-window. In Experiment 1, participants judged the valence of affective targets (affective categorization). We found that music and speech targets were evaluated faster when preceded by affectively congruent visual word primes, and vice versa. This affective priming effect was accompanied by a significantly larger N400-like effect following incongruent targets. In this experiment, both spreading of activation and response competition could underlie the affective priming effect. In Experiment 2, participants categorized the same affective targets based on nonaffective characteristics. However, as prime valence was irrelevant to the response dimension, affective priming effects could no longer be attributable to response competition. In Experiment 2, affective priming effects were observed neither at the behavioral nor electrophysiological level. The results of this study indicate that both affective music and speech prosody can prime the processing of visual words with emotional connotations, and vice versa. Affective incongruence seems to be associated with N400-like effects during evaluative categorization. The present data further suggest a role of response competition during the affective categorization of music, prosody, and words with emotional connotations.

Event-related potentials, emotion, and emotion regulation: An integrative review

DOI:10.1080/87565640903526504

URL

PMID:20390599

[本文引用: 1]

Progress in the study of emotion and emotion regulation has increasingly been informed by neuroscientific methods. This article focuses on two components of the event-related potential (ERP)--the P300 and the late positive potential (LPP)--and how they can be used to understand the interaction between the more automatic and controlled processing of emotional stimuli. Research is reviewed exploring: the dynamics of emotional response as indexed at early and late latencies; neurobiological correlates of emotional response; individual and developmental differences; ways in which the LPP can be utilized as a measure of emotion regulation. Future directions for the application of ERP/electroencephalogram (EEG) in achieving a more complete understanding of emotional processing and its regulation are presented.

The persistence of attention to emotion: Brain potentials during and after picture presentation

DOI:10.1037/1528-3542.8.2.250

URL

PMID:18410198

[本文引用: 1]

Emotional stimuli have been shown to elicit increased perceptual processing and attentional allocation. The late positive potential (LPP) is a sustained P300-like component of the event-related potential that is enhanced after the presentation of pleasant and unpleasant pictures as compared with neutral pictures. In this study, the LPP was measured using dense array electroencephalograph both before and after pleasant, neutral, and unpleasant images to examine the time course of attentional allocation toward emotional stimuli. Results from 17 participants confirmed that the LPP was larger after emotional than neutral images and that this effect persisted for 800 ms after pleasant picture offset and at least 1,000 ms after unpleasant picture offset. The persistence of increased attention after unpleasant compared to pleasant stimuli is consistent with the existence of a negativity bias. Overall, these results indicate that attentional capture of emotion continues well beyond picture presentation and that this can be measured with the LPP. Implications and directions for future research are discussed.

Electrophysiological responses to evaluative priming: The LPP is sensitive to incongruity

Effects of valence and origin of emotions in word processing evidenced by event related potential correlates in a lexical decision task

Toward a human emotions taxonomy (based on their automatic vs. reflective origin)

The effect of learning on feedback-related potentials in adolescents with dyslexia: An EEG-ERP study

Thirty years and counting: Finding meaning in the N400 component of the event-related brain potential (ERP)

Effects of voice on emotional arousal

Nonparametric statistical testing of EEG- and MEG-data

FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data

Can out-of-context musical sounds convey meaning? An ERP study on the processing of meaning in music

Contextual influences of emotional speech prosody on face processing: How much is enough?

Early and late brain signatures of emotional prosody among individuals with high versus low power

Preferential decoding of emotion from human non-linguistic vocalizations versus speech prosody

On the role of attention for the processing of emotions in speech: Sex differences revisited

Affective picture processing: The late positive potential is modulated by motivational relevance

DOI:10.1111/psyp.2000.37.issue-2 URL [本文引用: 2]

Affective priming effects of musical sounds on the processing of word meaning

DOI:10.1162/jocn.2009.21383

URL

[本文引用: 5]

Recent studies have shown that music is capable of conveying semantically meaningful concepts. Several questions have subsequently arisen particularly with regard to the precise mechanisms underlying the communication of musical meaning as well as the role of specific musical features. The present article reports three studies investigating the role of affect expressed by various musical features in priming subsequent word processing at the semantic level. By means of an affective priming paradigm, it was shown that both musically trained and untrained participants evaluated emotional words congruous to the affect expressed by a preceding chord faster than words incongruous to the preceding chord. This behavioral effect was accompanied by an N400, an ERP typically linked with semantic processing, which was specifically modulated by the (mis) match between the prime and the target. This finding was shown for the musical parameter of consonance/dissonance (Experiment 1) and then extended to mode (major/minor) (Experiment 2) and timbre (Experiment 3). Seeing that the N400 is taken to reflect the processing of meaning, the present findings suggest that the emotional expression of single musical features is understood by listeners as such and is probably processed on a level akin to other affective communications (i.e., prosody or vocalizations) because it interferes with subsequent semantic processing. There were no group differences, suggesting that musical expertise does not have an influence on the processing of emotional expression in music and its semantic connotations.

Reflective and impulsive determinants of social behavior

Affective impact of music vs. lyrics

The interaction of arousal and valence in affective priming: Behavioral and electrophysiological evidence

Electrophysiological correlates of visual affective priming

DOI:10.1016/j.brainresbull.2006.09.023

URL

PMID:17113962

[本文引用: 3]

The present study used event-related potentials (ERPs) to investigate the underlying neural mechanisms of visual affective priming. Eighteen young native English-speakers (6 males, 12 females) participated in the study. Two sets of 720 prime-target pairs (240 affectively congruent, 240 affectively incongruent, and 240 neutral) used either words or pictures as primes and only words as targets. ERPs were recorded from 64 scalp electrodes while participants pressed either

Neural correlates of cross-domain affective priming

The effects of timbre on neural responses to musical emotion

Impaired emotional processing of chords in congenital amusia: Electrophysiological and behavioral evidence

This study investigated whether individuals with congenital amusia, a neurogenetic disorder of musical pitch perception, were able to process musical emotions in single chords either automatically or consciously. In Experiments 1 and 2, we used a cross-modal affective priming paradigm to elicit automatic emotional processing through ERPs, in which target facial expressions were preceded by either affectively congruent or incongruent chords with a stimulus onset asynchrony (SOA) of 200msec. Results revealed automatic emotional processing of major/minor triads (Experiment 1) and consonant/dissonant chords (Experiment 2) in controls, who showed longer reaction times and increased N400 for incongruent than congruent trials, while amusics failed to exhibit such a priming effect at both behavioral and electrophysiological levels. In Experiment 3, we further examined conscious emotional evaluation of the same chords in amusia. Results showed that amusics were unable to consciously differentiate the emotions conveyed by major and minor chords and by consonant and dissonant chords, as compared with controls. These findings suggest the impairment in automatic and conscious emotional processing of music in amusia. The implications of these findings in relation to musical emotional processing are discussed.