1 引言

个体对外界事物的感知依赖于多感觉通道信息的整合。例如, 在言语交流时, 需要整合谈话者的表情、手势和声音等信息。视听时间整合(audiovisual temporal integration)是个体对一定时间间隔内输入的视听刺激进行表征的过程, 即对时间上不同步的刺激进行再加工, 将其整合为一个有意义的独立事件(Stevenson, Fister, Barnett, Nidiffer, & Wallace, 2012; Stevenson & Wallace, 2013)。整合的时间窗口模型(Time-Window-of-Integration)认为, 视觉、听觉和触觉等感觉线索是并行输入的, 其加工过程及所用加工时间彼此独立, 一旦某个通道的刺激完成加工, 整合窗口便会开启, 如果其他通道的信息输入也在该窗口内终止, 多感觉整合就会发生(Colonius & Diederich, 2004)。

孤独症谱系障碍(Autism Spectrum Disorders, ASD)是一组广泛性神经发育障碍, 感知觉异常是其症状的主要方面, 并已作为核心诊断标准纳入了美国精神障碍诊断统计手册第五版(DSM-V) (American Psychiatric Association, 2013)。早期对ASD者感知觉缺陷的研究多关注单通道信息加工的异常。例如, ASD者对音高、音强等低级声学线索过度敏感, 会出现听觉解码偏差(O’Connor, 2012)。近年来, 跨通道感觉的加工模式则倍受关注, 神经生理学的研究发现, ASD者在进行视听整合时与正常个体的神经激活模式存在差异, 如有关功能性磁共振成像(functional magnetic resonance imaging, fMRI)的研究表明, 视听情绪信息整合时, 控制组会激活额颞叶相关皮质区, 而ASD者更多激活顶额网络(Doyle-Thomas, Goldberg, Szatmari, & Hall, 2013); 关于视听语义整合时间进程的研究表明, 在时间窗为200~300 ms时, 听觉会促进视觉唇动加工, 而ASD者在该时段内两者间的调节作用不明显(Megnin et al., 2012)。同样, 行为研究结果表明, ASD者在整合视听、视触和听触等信息时均存在缺陷, 主要表现为对复杂的社会性刺激难以整合(Baum, Stevenson, & Wallace, 2015; Greenfield, Ropar, Smith, Carey, & Newport, 2015; Russo et al., 2010)。根据弱中央统合(weak central coherence)理论, “统合”需要将信息整合到相关的情境中来完成更高水平的心理完形, 该过程不仅需要注意资源的投入, 也依赖于对信息时空特性的把握, 跨通道刺激间时间线索加工的低敏感性是整合加工缺陷产生的重要原因(Glessner et al., 2009; Stevenson, Zemtsov, & Wallace, 2012)。

ASD者视听时间整合的研究可以进一步探明其跨通道整合加工缺陷的形成机制, 相关研究有利于超越其感知觉缺陷表型的多维性, 为揭示其病因的复杂性和相通性提供理论基础。例如, 时间窗口作为视听时间整合的重要衡量指标, 能够直观反映ASD者和正常被试在短时间内对视听刺激的整合加工模式差异。研究表明, ASD者时间整合窗口比正常人宽, 同等条件下, 该群体对跨通道感觉刺激输入的时间估计存在偏差, 分离异步性刺激的能力较弱(Wallace & Stevenson, 2014)。另外, 明确该群体视听整合的时间关系, 不仅有利于在后续的行为和知觉训练中对感知刺激的时间知觉进行精准干预, 还有利于说明ASD者言语障碍、社交缺陷等核心症状的形成机制。例如, 唇动和声音线索之间的时间同步性是视听言语整合的关键, ASD者对视听同步性辨别敏感性的降低会导致其言语接受能力减弱(Patten, Watson, & Baranek, 2014)。

2 孤独症者视听时间整合的研究方法

2.1 声音诱发闪光错觉任务

声音诱发闪光错觉任务(sound-induced flash illusion task)主要从内隐的角度探讨ASD者的视听时间整合。在该任务中, 呈现一个闪光和两个声音, 其中一个声音与闪光同时呈现, 另一个声音与闪光不同时呈现。要求被试忽略听觉刺激, 报告看到的闪光数量(Foss-Feig et al., 2010)。在此过程中, 闪光和声音之间的时间关系会对闪光数量的知觉产生影响, 当时间间隔变小或者对其时间关系的知觉能力降低时, 闪光数量知觉的错误率会增加(Shams, Kamitani, & Shimojo, 2002)。该任务主要使用非言语刺激进行研究, 实验中同时呈现视听刺激, 这与现实生活很相符, 因此具有较高的生态效度。

2.2 “pip-pop”任务

“pip-pop”任务是通过呈现一系列方向不同、且在两种颜色间随机变化的短线条, 其中水平或垂直的线条为靶刺激, 在靶刺激颜色变化的同时呈现一个声音。要求被试搜索靶刺激, 并记录其搜索的正确率和反应时。该任务用于探讨视听整合中时间加工机制的原理是:视觉搜索中, 在一定的时间内呈现声音时可以促进搜索效率的提高, 即产生“pop-out”效应。当视听刺激间的时间知觉能力减弱时, 该效应会随之减弱(van der Burg, Olivers, Bronkhorst, & Theeuwes, 2008; Collignon et al., 2013)。该任务从一个新的角度对ASD者的视听时间整合进行了研究, 但需要被试进行按键反应, 因此只适用于高功能ASD个体。另外, 实验要求记录反应时, 而ASD者普遍存在注意缺陷, 因此其可靠性还需进一步提高。

2.3 同时性判断任务

同时性判断任务(simultaneity judgment task, SJ)主要用于检测ASD者视听时间同步性辨别能力。在该任务中, 先呈现视觉或听觉通道刺激, 一个时间间隔(stimulus onset asynchrony, SOA)后, 再呈现另一通道的刺激。要求被试判断两者是否同时出现。通过操控SOA来测量同步性知觉敏感性, 研究中根据需要可设置不同的SOA, 一般非言语刺激为0~300 ms, 言语刺激为0~400 ms (Stevenson, Siemann, Schneider et al., 2014)。根据正确率和SOA值拟合函数, 得到时间窗和主观同时点(Baum et al., 2015)。该任务设计巧妙, 易于操作, 但需要被试听懂指导语并按键反应, 因此只适用于高功能被试。不过, 即使是高功能ASD者也存在言语理解障碍, 在研究中是否听懂指导语还需谨慎确认。

2.4 时序判断任务

时序判断任务(temporal order judgment task, TOJ)是用于测量时序辨别敏感性的方法, 依据反应通道的不同, 可分为视觉、听觉和多感觉TOJ任务, 视听时间整合的研究通常使用多感觉TOJ任务。该任务的操作方法和SJ任务基本相同, 但要求被试判断的是哪个刺激先出现。实验中, 一般视觉先于听觉刺激(visual preceding auditory, VA)和听觉先于视觉刺激(auditory preceding visual, AV)呈现的概率各占50%。时序知觉的敏感性通常根据主观同时点和最小可觉差(just noticeable differences, JND)来衡量(de Boer- Schellekens, Eussen, & Vroomen, 2013; Kwakye, Foss-Feig, Cascio, Stone, & Wallace, 2011)。相比上文提到的同时性判断任务, 该任务较少受反应偏差的影响, 精准性更好, 但需要投入更多地认知加工, 对被试也更具挑战性(Binder, 2015; Vroomen & Keetels, 2010)。

2.5 优先注视任务

优先注视任务(preferential-looking task)是基于眼动技术提出的, 主要用于检验ASD者对言语刺激的时间同步性知觉。在该任务中, 呈现两个不同步但内容相同的视频, 同时通过耳机播放与视频相匹配的声音, 它只与其中一个视频保持同步。其原理为:当视听信息之间存在固定的时间关系时, 听觉信息会影响视觉的注视模式。当声音的呈现和两个视频的同步性随机匹配时, 被试对每个视频的注视时间应分别为50%; 而当声音呈现与视频之间的时间关系固定时, 个体对视听同步呈现视频的注视时间会更长(Bebko, Weiss, Demark, & Gomez, 2006; Grossman, Steinhart, Mitchell, & McIlvane, 2015)。该任务指导语简单, 对被试的言语和认知能力要求较低, 而且不需要被试按键反应, 数据搜集方式无干扰, 实验过程趣味性较强, 适用于幼儿和低功能被试。

从上文的研究方法可以看出, ASD者视听时间整合的研究任务多样, 测量角度颇为丰富。声音诱发闪光错觉和“pip-pop”任务主要从内隐角度探讨视听整合中, 听觉对视觉加工直接调节作用的时间点和时间范围; 优先注视任务测量ASD和正常被试在视听同步性知觉中的眼动加工差异; SJ和TOJ任务从外显角度测量跨通道时序知觉的敏感性, 任务中的主要变量为刺激呈现的同步和异步, 前者只需知觉时间顺序, 后者还需辨别顺序的先后, 因此需要更多的心理加工。研究发现, ASD者对不同刺激属性的视听时间知觉受任务类型的影响, 如对于非言语刺激, 该群体在声音诱发闪光错觉任务中表现出时间窗宽的特点, 但在优先注视任务、SJ和TOJ任务中却与正常被试无显著差异(Foss-Feig et al., 2010; Bebko et al., 2006; Stevenson, Siemann, Schneider et al., 2014)。这表明ASD者跨通道时序知觉和内隐视听时间加工可能存在不同的加工机制。

3 孤独症者视听时间整合缺陷表现

3.1 视听时间整合窗口异常

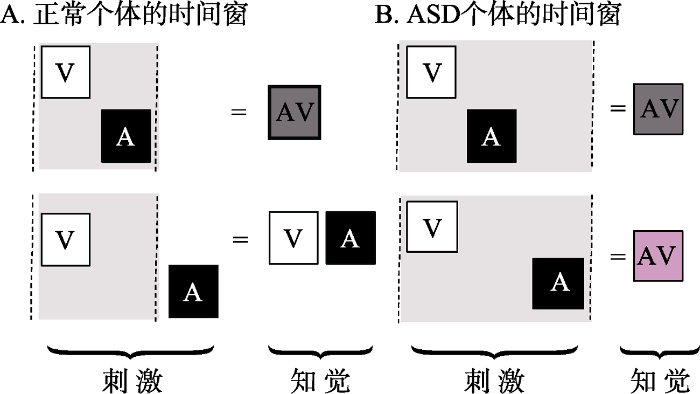

视听整合过程中, 个体的知觉能够忽略视听刺激之间可能存在的时间差, 把不同步的刺激整合为一个有意义的整体事件, 视听刺激之间的时间间隔被称为时间窗(Temporal Binding Window, TBW)。在该窗口内输入的刺激可以被整合为一个整体, 当超出这个时间间隔时, 则会被知觉为两个不同事件。它反应了大脑对视听刺激时间间隔知觉敏感性的高低, TBW越宽表明对刺激间时间差知觉的敏感性越低。当TBW变宽时, 视听刺激之间的时间关系会变得模糊, 该时间范围内输入的刺激可能被整合, 但往往对不同事件却不能区别, 致使多种感觉的知觉表征减弱, 甚至出现错误整合(Stevenson et al., 2016)。很多研究表明, 与正常被试相比, ASD者存在TBW宽的特点(Foss- Feig et al., 2010; Kwakye et al., 2011; Stevenson, Siemann, Schneider et al., 2014; Stevenson, Siemann, Woynaroski et al., 2014) (如图1)。

图1

图1

正常人和ASD者的时间窗结构

(资料来源:Stevenson et al., 2016)

首先, ASD者基于非言语刺激的TBW约为正常被试的2倍。研究者使用声音诱发闪光错觉任务对46名8~17岁的ASD和正常被试进行了研究。结果发现, 当SOA在300 ms (-150 ~ +150 ms)内时, 正常组报告两个闪光的概率会提高; 而ASD被试大约在600 ms (-300 ~ +300 ms)内都会提高。在基线条件下, 即呈现一个圆点和一个声音时, 组间差异不显著, 这表明两组差异不是由反应偏差造成的, ASD者的听觉会在更长时段内干扰其视觉加工(Foss-Feig et al., 2010)。随后, 研究者直接检验了ASD者听觉对视觉时序判断的影响。该研究在视觉TOJ任务的基础上, 分别在两个视觉刺激之前和之后呈现声音, 第一个声音与第一个视觉刺激同时呈现, 第二个声音在第二个视觉刺激出现一个SOA后呈现。结果发现, 正常被试在SOA为50~150 ms时声音会促进视觉时序判断, 而ASD被试在0~300 ms内时序判断的正确率都提高了(Kwakye et al., 2011)。

其次, 刺激类型会影响TBW的宽度, ASD者基于言语刺激的TBW较正常被试更宽, 且与麦格克效应的强度有关。该效应主要用于衡量视听言语整合能力, 其强度越强, 视听言语整合能力越弱(Stevenson, Siemann, Schneider et al., 2014; Baum et al., 2015)。一方面, 行为研究检验了ASD者时间加工与视听言语整合的关系, 研究中使用简单、复杂非言语和言语三种属性刺激。结果发现, 相比正常被试, ASD者基于言语刺激的TBW更宽, 且该群体麦格克效应的强度从简单非言语刺激、复杂非言语刺激到复杂言语刺激逐渐增强, 而正常组没有表现出这种趋势(Stevenson, Siemann, Schneider et al., 2014; Stevenson, Siemann, Woynaroski et al., 2014b)。另一方面, 神经生理学的研究表明, 后侧颞上沟(Posterior Superior Temporal Sulcus, pSTS)是视听时间和言语整合的重要神经机制, 在麦格克效应中有着重要作用, 而ASD者该脑区的激活模式和正常被试存在差异(Hocking & Price, 2008; Stevenson, VanDerKlok, Pisoni, & James, 2011; Beauchamp, Nath, & Pasalar, 2010)。有研究使用fMRI技术检验了ASD和正常被试对不同属性刺激在视听同步和异步呈现时的神经激活模式, 结果显示, 在言语刺激条件下, 当视听同步呈现时, 正常被试pSTS区域的激活显著减少, 而ASD者没有显著变化; 在非言语刺激条件下, 视听同步和异步呈现时被试间差异不显著。这表明在视听言语刺激同步呈现时, ASD者的整合效率没有提高, 其与言语刺激有关的神经加工机制存在异常(Stevenson et al., 2013)。

再次, 言语阅读障碍者也表现出了TBW宽的特点, 但与ASD者有所不同, 言语阅读障碍者在视觉和听觉时间加工方面都存在缺陷, 而ASD者只存在听觉时间加工缺陷(Laasonen, Service, & Virsu, 2001; Hairston, Burdette, Flowers, Wood, & Wallace, 2005; Kwakye et al., 2011)。研究发现, 两者的神经加工模式不同, ASD者表现为局部超联结和长距联结不足, 而言语阅读障碍者表现为近距离联结不足和末端联结过度(Rippon, Brock, Brown, & Boucher, 2007; Williams & Casanova, 2010)。

最后, ASD者TBW的分布结构更对称。目前, 对于ASD者TBW的研究主要基于实验室实验, 视听刺激之间的时间间隔受人为控制, 通常AV和VA两种条件随机呈现。然而, 由于视听信息的传播速度不同, 个体接收到听觉信息的时间应晚于视觉信息, 因此TBW为左宽右窄的不对称分布, 而ASD个体的TBW则更对称, 其分布形态似正常幼儿期(Hillock-Dunn & Wallace, 2012; Hillock, Powers, & Wallace, 2011)。

3.2 快速视听时间再校准能力不足

快速视听时间再校准(rapid audiovisual temporal recalibration)是大脑对视听刺激的时间关系再适应的结果, 当视听不同步时, 可以通过调节两者间时间关系向延迟的方向偏移, 将其整合为一个整体事件(van der Burg, Alais, & Cass, 2013)。校准能力的强弱通常用主观同时点(point of subjective simultaneity, PSS)的偏移进行衡量。PSS是指主观上认为两个刺激同时出现, 但实际却存在一定的时间间隔(Noel, De Niear, Stevenson, Alais, & Wallace, 2017)。当视听刺激绝对同时呈现时, PSS应是SOA为0时的值, 但在进行再校准时, PSS会发生偏移。其偏移量越大, 表明校准能力越强, 即对视听不同步的适应能力越强。相比正常被试, ASD者的快速视听时间再校准能力不足表现为不能快速适应视听刺激的不同步性, 且对不同刺激类型再校准能力的共性低(Turi, Karaminis, Pellicano, & Burr, 2016; Noel et al., 2017)。

研究者使用简单非言语刺激对ASD和正常成人的快速视听时间再校准进行了研究, 结果发现, 当先呈现听觉先于视觉的试次, 再呈现视觉先于听觉的试次时, 先前试次会对当前试次产生影响。表现为正常成人的PSS偏移了53 ms, 而ASD成人几乎没有发生偏移(Turi et al., 2016)。这表明当视听刺激不同步呈现时, 正常成人对优先呈现通道时序知觉的适应阈限发生了变化, 当视听刺激之间的时间间隔变大时, 其适应效应增强, 而ASD者不受影响。有研究还考察了ASD青少年的快速视听时间再校准与刺激属性的关系。研究中使用了简单非言语、复杂非言语和言语三种刺激。结果表明, 在言语刺激条件下, ASD被试的再校准能力与正常组无差异, 但在简单和复杂非言语刺激条件下, ASD被试PSS的偏移量显著少于正常组, 简单非言语条件下两组被试的偏移量分别为33.15 ms和17.96 ms, 复杂非言语条件下分别为18.06 ms和9.12 ms (Noel et al., 2017)。

值得注意的是, 有关正常成人的研究表明, 快速视听时间再校准能力与TBW存在正相关, 但在Noel等(2017)的研究中却没有发现这种相关(van der Burg et al., 2013)。这可能与年龄因素有关, 前者以成人为研究对象, 而后者则以青少年为被试。贝叶斯理论认为感觉整合的各个方面都会随着年龄的变化而变化, 且正常人的TBW到成年期才达到成熟(Lewkowicz & Flom, 2014; Hillock-Dunn & Wallace, 2012)。

3.3 听觉时间线索对视觉搜索促进作用弱

在视听整合过程中, 当视听刺激在一定的时间间隔内呈现时, 大脑会协调视听通道的信息加工使其相互影响。在此过程中, 听觉能够依赖视听刺激间的时间关联性调节视觉通道的加工(Shi, Chen, & Müller, 2010)。“Pop-out”效应是视听通道信息调节作用的一种, 该效应的发生需要对视听信息之间的时间关系进行加工(van der Burg et al., 2008)。有学者对14~31岁的ASD者和正常被试进行了研究, 研究中通过改变闪光数量设置了三种负荷水平, 分别为24、36和48。结果发现, 当呈现“pip”声音时, 正常被试在所有水平上的反应时和正确率都显著提高, 而ASD者均没有提高, 且在没有声音条件下, ASD被试的反应时快于正常被试(Collignon et al., 2013)。这表明ASD者的视听整合缺陷可能是由于其不能精确知觉视听刺激间的时间关系造成的。

然而, 有研究却得出了不同的结论。de Boer-Schellekens和Keetels等(2013)使用三种任务进行了研究:伴随声音的视觉时序判断任务、“pip-pop”任务、数字表阅读任务。结果发现, ASD个体视觉时序辨别敏感性较低, 但当呈现声音刺激时, ASD和正常被试的JND都显著降低, 即敏感性提高了, 且ASD被试JND下降的幅度大于正常被试。这表明ASD个体可以利用声音线索促进视觉时序判断。同样, 在“pip-pop”任务中 当呈现声音时, 与正常被试一样, ASD者的视觉搜索效率提高了, 这说明ASD个体能够知觉视听刺激的时间同步性。

结果的争议可能是由于ASD者的注意缺陷造成的, 研究发现分散的视觉注意有利于“pip- pop”任务中的视听同步性知觉(van der Burg et al., 2008)。在de Boer-Schellekens和Keetels等(2013)的研究中直接告诉被试声音和靶刺激颜色的变化同时呈现, 这使得ASD被试可以自由使用搜索策略, 利用自上而下的认知加工促进视觉搜索, 弥补了自下而上的视听整合缺陷。而在Collignon等(2013)的研究中则要求被试注视中心注视点, 由于ASD者弱中央统合的加工特点, 会强化其局部加工缺陷, 不利于促进视听信息的同步性知觉(Happé & Frith, 2006)。

3.4 言语刺激的视听时序知觉敏感性低

视听时序知觉是指对两个视听事件是否同时出现以及相继出现时先后顺序的知觉, 其敏感性受刺激属性的影响, 通常, 对言语刺激时序知觉的敏感性低于非言语刺激(Vatakis & Spence, 2006)。多数研究发现, 与正常被试相比, ASD者的时序辨别敏感性低, 且在刺激属性和任务差异方面存在不同(Grossman et al., 2015; de Boer- Schellekens, Eussen, et al., 2013)。

一方面, 在视听同步性知觉的研究中, ASD者对言语刺激的视听同步性辨别能力较低。学者使用优先注视任务检验了4~6岁的ASD、正常和其它发育障碍儿童在非言语、简单言语和复杂言语刺激方面的视听同步性辨别能力。结果发现, ASD儿童基于非言语刺激的时间同步性辨别与正常被试差异不显著, 而在简单和复杂言语刺激方面却显著低于正常组(Bebko et al., 2006)。随后, 有研究使用该任务进一步从内隐和外显两个角度进行了探讨, 结果发现, 控制组在外显条件下对视听同步呈现视频注视的概率高于内隐条件, 而ASD组在两种条件下差异不显著, 且注视概率都显著低于控制组(Grossman et al., 2015)。另一方面, 在视听时序判断任务中, ASD者时序辨别敏感性低但不受刺激类型的影响。研究者探讨了ASD者和正常青少年对不同刺激属性的时序知觉, 结果发现, ASD者的JND (116.6 ms)大于正常被试(88.1 ms), 但基于刺激属性的组间差异不显著(de Boer-Schellekens, Eussen, et al., 2013)。

综上所述, ASD者基于言语刺激的时间同步性辨别存在缺陷。一种可能是由于该群体对言语事件之间的时间同步性尚未建立; 另一种可能是实验中试次呈现的时间太短, 其辨别能力弱致使没有觉察到视听刺激的同步性。此外, ASD者对非言语刺激的时序知觉表现出了任务差异, 这可能是由于研究中SOA取值的不同造成的。如Bebko等(2006)的研究中SOA为3 s, 而de Boer- Schellekens和Eussen等(2013)的研究中则为40 ~ 320 ms。

4 视听时间整合缺陷的理论解释

4.1 时间绑定缺陷假说

时间绑定缺陷假说(the temporal binding deficit hypothesis)认为, ASD者的时间加工缺陷是由于其神经激活的时间异常造成的, 主要表现为大脑的神经放电在时间上缺乏可靠性, 如过度超同步化放电、神经元突发性异常放电等(Brock, Brown, Boucher, & Rippon, 2002)。其假设的前提是, 大脑是通过特征绑定进行信息加工的。在视听整合过程中, 分散于不同皮层的信息组合的关键是不同特征神经激活之间的时间关系。当一簇视听信息流在相近的时间内输入时, 会激活大量神经元, 这些神经激活之间的时间关系发生紊乱会造成知觉绑定缺陷。例如, 有研究发现, ASD者可以整合视听刺激, 但所需加工时间更长, 表现出TBW宽的特点(Foss-Feig et al., 2010; Kwakye et al., 2011)。这是由于其局部神经网络之间的时间绑定可以进行但功能较弱, 而局部神经网络内的时间绑定是完好甚至高于正常被试的。该假说是目前解释ASD者视听时间加工缺陷较核心的理论, 但由于其提出时间较早, 在有关特征捆绑的信息加工模式、时间绑定理论的有效性、对复杂刺激绑定模式的解释及γ波同步性激活等方面还存在不足(Brock et al., 2002)。

4.2 信噪比减少假说

信噪比减少假说(decreased signal-to-noise ratio hypothesis)认为, ASD者神经编码的信噪比较低, 神经系统自发放电干扰和反应变异性较大, 导致其稳定性差而减弱了信息提取能力。视听信息在一定的时间范围内呈现时会相互影响, 当单通道神经激活的时间变异增大时, 会干扰双通道的整合加工(Rubenstein & Merzenich, 2003; Dinstein et al., 2012)。通常, 当呈现一个单通道刺激时, 刺激出现的瞬间便会激活与之相关的神经, 但ASD者对两者之间时间精确性的知觉较弱。例如, 有关研究探讨了单通道时间加工异常是否会造成ASD者的视听时间整合缺陷。结果发现, 与正常被试相比, 在视觉时间加工方面没有显著差异, 但其听觉时间加工敏感性显著低于正常组(Kwakye et al., 2011)。有关事件相关电位的研究表明, ASD者在无噪音背景下对听觉刺激的反应与控制组在有噪音背景下的反应结果一样, 这表明其在基线水平下对听觉刺激的反应减弱(Russo, Zecker, Trommer, Chen, & Kraus, 2009)。该假说从神经机制角度对ASD者TBW宽的特点进行了解释, 但与时间绑定缺陷假说有所不同。前者认为ASD者主要是神经网络之间的时间加工存在异常, 而局部网络内的加工是完好的; 后者则强调是单通道的时间加工异常引起了双通道整合缺陷。

4.3 贝叶斯理论

该理论认为, 知觉是感觉刺激与内部表征最优匹配的结果, ASD者在进行信息加工时过度依赖当前感觉信息, 而忽略先前经验(Pellicano & Burr, 2012)。在进行视听时间再校准时, 个体需要合理优化内部表征和实际感觉信号之间不同步的时间关系, 最小化同一事件感觉线索之间的差异, 促进视听整合。对正常人而言, 当两者之间存在差异时, 个体会灵活调整这种不符, 但由于ASD者感觉线索权重的增加, 其内在期望和实际感觉信号之间的不符会减少, 使得再校准能力减弱。内在表征和感觉噪音期望分布之间的强度差异是两者之间不符的主要因素, 这种差异会随着刺激复杂性的不同而变化。例如, 研究发现, ASD者对言语刺激的快速视听时间再校准与正常组无差异, 而对非言语刺激的再校准能力显著减弱(Turi et al., 2016; Noel et al., 2017)。这是由于, 与言语刺激相比, 低水平刺激的感觉噪音更小。当呈现同样的感觉信号时, ASD者对低水平刺激内部表征的噪音减少, 误差信号减弱, 而不能进行再校准。但言语刺激因其内部表征的噪音较大, 使得ASD组和控制组都可以进行。该理论解释了先前经验在视听时间整合中的作用, 但主要针对ASD者的快速视听时间再校准缺陷, 且目前该方面的实证研究主要集中于即时的快速再校准, 很少涉及建立在长时适应基础上的再校准。

4.4 预测编码假说

预测编码假说(predictive coding hypothesis)认为, 多感觉整合包括视听信息同时输入、与内部表征匹配、作出辨别或反应三个过程。在此过程中, 视听刺激会依据彼此之间的时间关系相互影响, 若视觉通道先完成加工, 便会为听觉信息加工提供可靠的线索, 进而提高加工效率。如当发出声音“ba”时, 既可以利用听觉线索知觉, 也可通过视觉线索知觉, 如果先激活听觉通道, 它就会促进视觉加工(Altieri, 2014)。由于ASD个体对环境信息的统计理解能力较弱, 不能对视听刺激之间的时间关系进行精准把握。因此, 当视听刺激的阈限都在一定的时间内激活时, 其信息加工是彼此独立的, 听觉不能对视觉产生影响(Pellicano & Burr, 2012)。例如, 在“pop-out”效应中, ASD者不能利用听觉线索促进视觉加工, 致使视觉搜索效率没有提高(Collignon et al., 2013)。该理论从神经计算角度解释了ASD者的内隐时间加工缺陷, 但目前的实证研究还很少, 且大都是从间接角度进行验证, 还需要直接证据的支撑。

综上所述, 目前的理论从神经机制的异常激活模式和认知加工差异的层面对ASD者的视听时间整合缺陷进行了解释, 得到了一些行为研究的支持, 但相关的神经生理学研究还很少。此外, 视听时间整合不仅是对刺激间物理时间量的加工, 还涉及心理时间量加工, 其整合过程中的神经激活存在时间进程, ASD者和正常被试的激活规律是否相同, 目前的理论还难以解释。未来需加强相关的神经机制研究, 可以构建视听时间整合加工的理论模型, 并对行为数据加以验证。例如, 在有关视听言语整合的研究中, 学者使用神经生理学技术构建了视听言语整合的神经加工模型, 在正常被试群体中建立常模, 比较其与ASD者的加工差异。结果发现, ASD者视听言语整合缺陷不是由于其突触连接和可塑性弱, 而是注意和多感觉加工缺陷导致的(Cuppini et al., 2017)。

5 小结与展望

视听时间整合探讨了视听整合中的时间加工机制。ASD者的相关研究发现了其在视听整合的时间窗口、跨通道刺激的时序辨别、内隐时间线索加工等方面存在缺陷。研究中使用方法多样, 但主要集中于行为层面, 关注的焦点是其TBW异常, 并通过使用不同刺激类型研究其与视听言语加工的关系。相关理论从神经加工机制、先前经验作用和多感觉加工过程等角度解释了其缺陷。不过, 该领域仍存在一些问题亟待研究。

5.1 提高研究生态效度

ASD者视听时间整合的研究方法目前主要包括用于外显时序知觉研究的SJ、TOJ、优先注视任务以及基于内隐时间加工研究的声音诱发闪光错觉和“pip-pop”任务, 但这些方法还存在不足。首先, 进一步分离内隐研究中的注意因素和时间加工机制。在视听整合的研究中, 声音的呈现会影响视觉加工, 当视听刺激同时呈现时会产生整合效应, 但当声音先于视觉刺激如提前200 ms呈现时, 视觉加工效率的提高可能是由于声音引起的警觉作用而非整合(de Boer-Schellekens, Keetels, Eussen, & Vroomen, 2013)。因此ASD者视听整合缺陷是由于其不能精确知觉跨通道刺激间的时间线索, 还是注意缺陷造成的, 两者加工机制的相互作用值得深入探讨。其次, 改进研究方法以提高对被试的适用性。ASD是一组谱系障碍, 包括很多亚型, 症状差异和个体差异极大。目前的任务各具针对性, 如SJ、TOJ、“pip-pop”及声音诱发闪光错觉任务主要适用于高功能ASD被试, 甚至有些高功能ASD者也无法完成, 而优先注视任务主要用于幼儿的研究。未来需简化研究任务, 进一步开发适用于低功能及不同亚型被试的任务。

5.2 整合理论解释

目前对ASD者视听时间整合缺陷的理论解释还缺乏普适性, 如时间绑定缺陷假说和信噪比减少假说主要用于解释其TBW宽缺陷、贝叶斯理论主要对快速视听时间再校准不足进行了解释、预测编码理论解释了其内隐时间加工缺陷。但视听时间整合是一个动态的整体加工过程, 可能存在多种加工方式。例如, 有研究提出正常人对视听刺激的同步性知觉存在4种加工模式:大脑可以直接忽略视听刺激间的时间差; 中枢神经可以对时间延迟的刺激进行补偿加工; 个体会灵活调整视听刺激的不同时性使其差异最小化进而促进整合; 对一个通道刺激的时间知觉会向另一个通道偏移(Vroomen & Keetels, 2010)。因此, 有必要进一步整合相关研究, 提出较全面、系统的理论解释。

5.3 精确量化诊断指标

时间窗、主观同时点和最小可觉差是目前衡量视听时间整合的重要指标。它们都可以进行精确量化, 但要作为ASD者视听时间整合缺陷的诊断依据还需谨慎。首先, 重视TBW宽度的量化。正常人的TBW一般为300 ms, 而研究发现ASD者为600 ms (Foss-Feig et al., 2010)。但由于研究中使用任务的差异、被试反应偏差的影响、刺激材料选取的不同以及统计标准差异等因素, 不同研究中对TBW的计算还不够精确统一(Stevenson & Wallace, 2013), 如果未来可以证实其跨任务、跨年龄等的一致性, 这将会为ASD的诊断提供重要依据; 其次, 综合使用三者。除了TBW宽度的量化, 正常人适应视听刺激的时间差后PSS的偏移量表现为先增大后减小的趋势, 峰值出现在刺激延迟100~200 ms左右, 且不同个体时间知觉的JND也存在差异(Vroomen, Keetels, De Gelder, & Bertelson, 2004; Fujisaki, Shimojo, Kashino, & Nishida, 2004; Stevenson, Zemtsov, et al., 2012)。未来可以结合这三个指标, 提高诊断依据的可靠性。

5.4 开发有效干预策略

对ASD者视听时间整合的干预不仅可以提高其时间知觉能力, 促进视听整合, 还可以进一步改善其言语知觉和社交缺陷。目前对ASD者视听时间整合的干预研究还比较少, 而正常人已在行为和神经机制方面形成了两类较系统的干预模式:一种是自下而上再校准(bottom-up recalibration through exposure), 即通过从简单到复杂的任务训练逐步提高视听时间知觉敏感性; 另一种是自上而下的反馈训练(top-down feedback training), 即通过反馈不断调整对视听刺激的时间知觉(Fujisaki et al., 2004; Powers, Hillock, & Wallace, 2009; Powers, Hevey, & Wallace, 2012)。干预中主要使用知觉学习任务, 这种任务简单、易操作, 可以适用于ASD被试。此外, 电子游戏经验可以促进个体的时序知觉, 研究表明, 电子游戏经验丰富的个体在SJ和TOJ任务中视听时序辨别的敏感性更高(Donohue, Woldorff, & Mitroff, 2010)。未来可以借助多媒体技术对知觉学习任务进行改进, 开发适用于ASD者的干预策略, 这种干预方式不仅有趣、可操作性强, 可以让被试在娱乐中提升视听时间知觉能力, 还能够根据被试的年龄特征和功能症状提出有针对性的干预方案。

参考文献

Multisensory integration, learning, and the predictive coding hypothesis

DOI:10.3389/fpsyg.2014.00257

URL

PMID:2471588424715884

[本文引用: 1]

Multisensory integration, learning, and the predictive coding hypothesis

Testing sensory and multisensory function in children with autism spectrum disorder

DOI:10.3791/52677

URL

PMID:25938209

[本文引用: 3]

In addition to impairments in social communication and the presence of restricted interests and repetitive , deficits in are now recognized as a core symptom in (). Our ability to perceive and interact with the external world is rooted in . For example, listening to a conversation entails processing the auditory cues coming from the speaker (speech content, , syntax) as well as the associated visual information (facial expressions, gestures). Collectively, the "integration" of these multisensory (i.e., combined audiovisual) pieces of information results in better comprehension. Such multisensory integration has been shown to be strongly dependent upon the temporal relationship of the paired stimuli. Thus, stimuli that occur in close temporal proximity are highly likely to result in behavioral and perceptual benefits - gains believed to be reflective of the perceptual system's judgment of the likelihood that these two stimuli came from the same source. Changes in this temporal integration are expected to strongly alter perceptual processes, and are likely to diminish the ability to accurately perceive and interact with our world. Here, a battery of tasks designed to characterize various aspects of sensory and multisensory temporal processing in children with is described. In addition to its utility in , this battery has great potential for characterizing changes in sensory function in other clinical populations, as well as being used to examine changes in these processes across the lifespan.

fMRI-guided transcranial magnetic stimulation reveals that the superior temporal sulcus is a cortical locus of the Mcgurk effect

DOI:10.1523/JNEUROSCI.4865-09.2010

URL

PMID:20164324

[本文引用: 1]

A compelling example of auditory-visual multisensory integration is the McGurk effect, in which an auditory syllable is perceived very differently depending on whether it is accompanied by a visual movie of a speaker pronouncing the same syllable or a different, incongruent syllable. Anatomical and physiological studies in human and nonhuman primates have suggested that the superior temporal sulcus (STS) is involved in auditory-visual integration for both speech and nonspeech stimuli. We hypothesized that the STS plays a critical role in the creation of the McGurk percept. Because the location of multisensory integration in the STS varies from subject to subject, the location of auditory-visual speech processing in the STS was first identified in each subject with fMRI. Then, activity in this region of the STS was disrupted with single-pulse transcranial magnetic stimulation (TMS) as subjects rated their percept of McGurk and non-McGurk stimuli. Across three experiments, TMS of the STS significantly reduced the likelihood of the McGurk percept but did not interfere with perception of non-McGurk stimuli. TMS of the STS was effective at disrupting the McGurk effect only in a narrow temporal window from 100 ms before auditory syllable onset to 100 ms after onset, and TMS of a control location did not influence perception of McGurk or control stimuli. These results demonstrate that the STS plays a critical role in the McGurk effect and auditory-visual integration of speech.

Discrimination of temporal synchrony in intermodal events by children with autism and children with developmental disabilities without autism

DOI:10.1111/j.1469-7610.2005.01443.x

URL

PMID:16405645

[本文引用: 4]

This project examined the intermodal perception of temporal synchrony in 16 young children (ages 4 to 6 years) with autism compared to a group of children without impairments matched on adaptive age, and a group of children with other developmental disabilities matched on chronological and adaptive age.A preferential looking paradigm was used, where participants viewed non-linguistic, simple linguistic or complex linguistic events on two screens displaying identical video tracks, but one offset from the other by 3 seconds, and with the single audio track matched to only one of the displays.As predicted, both comparison groups demonstrated significant non-random preferential looking to violations of temporal synchrony with linguistic and non-linguistic stimuli. However, the group with autism showed an impaired, chance level of responding, except when presented with non-linguistic stimuli.Several explanations are offered for this apparently autism-specific, language-specific pattern of responding to temporal synchrony, and potential developmental sequelae are discussed.

Neural correlates of audiovisual temporal processing-Comparison of temporal order and simultaneity judgments

DOI:10.1016/j.neuroscience.2015.05.011

URL

PMID:25982561

[本文引用: 1]

Multisensory integration is one of the essential features of perception. Though the processing of spatial information is an important clue to understand its mechanisms, a complete knowledge cannot be achieved without taking into account the processing of temporal information. Simultaneity judgments (SJs) and temporal order judgments (TOJs) are the two most widely used procedures for explicit estimation of temporal relations between sensory stimuli. Behavioral studies suggest that both tasks recruit different sets of cognitive operations. On the other hand, empirical evidence related to their neuronal underpinnings is still scarce, especially with regard to multisensory stimulation. The aim of the current fMRI study was to explore neural correlates of both tasks using paradigm with audiovisual stimuli. Fifteen subjects performed TOJ and SJ tasks grouped in 18-second blocks. Subjects were asked to estimate onset synchrony or temporal order of onsets of non-semantic auditory and visual stimuli. Common areas of activation elicited by both tasks were found the bilateral fronto-parietal network, including regions whose activity can be also observed in tasks involving spatial selective attention. This can be regarded as an evidence for the hypothesis that tasks involving selection based on temporal information engage the similar regions as the attentional tasks based on spatial information. The direct contrast between the SJ task and the TOJ task did not reveal any regions showing stronger activity for SJ task than in TOJ task. The reverse contrast revealed a number of left hemisphere regions which were more active during the TOJ task than the SJ task. They were found in the prefrontal cortex, the parietal lobules (superior and inferior) and in the occipito-temporal regions. These results suggest that the TOJ task requires recruitment of additional cognitive operations in comparison to SJ task. They are probably associated with forming representations of stimuli as separate and temporally ordered sensory events.

The temporal binding deficit hypothesis of autism

Reduced multisensory facilitation in persons with autism

DOI:10.1016/j.cortex.2012.06.001

URL

PMID:22818902

[本文引用: 4]

Abstract Although the literature concerning auditory and visual perceptual capabilities in the autism spectrum is growing, our understanding of multisensory integration (MSI) is rather limited. In the present study, we assessed MSI in autism by measuring whether participants benefited from an auditory cue presented in synchrony with the color change of a target during a complex visual search task. The synchronous auditory pip typically increases search efficacy (pip and pop effect), indicative of the beneficial use of sensory input from both modalities. We found that for conditions without auditory information, autistic participants were better at visual search compared to neurotypical participants. Importantly, search efficiency was increased by the presence of auditory pip for neurotypical participants only. The simultaneous occurrence of superior unimodal performance with altered audio-visual integration in autism suggests autonomous sensory processing in this population. Copyright 2012 Elsevier Ltd. All rights reserved.

Multisensory interaction in saccadic reaction time: A time-window- of-integration model

DOI:10.1162/0898929041502733

URL

PMID:15298787

[本文引用: 1]

Saccadic reaction time to visual targets tends to be faster when stimuli from another modality (in particular, audition and touch) are presented in close temporal or spatial proximity even when subjects are instructed to ignore the accessory input (focused attention task). Multisensory interaction effects measured in neural structures involved in saccade generation (in particular, the superior colliculus) have demonstrated a similar spatio-temporal dependence. Neural network models of multisensory spatial integration have been shown to generate convergence of the visual, auditory, and tactile reference frames and the sensorimotor coordinate transformations necessary for coordinated head and eye movements. However, because these models do not capture the temporal coincidences critical for multisensory integration to occur, they cannot easily predict multisensory effects observed in behavioral data such as saccadic reaction times. This article proposes a quantitative stochastic framework, the time-window-of-integration model, to account for the temporal rules of multisensory integration. Saccadic responses collected from a visual-actile focused attention task are shown to be consistent with the time-window-of-integration model predictions.

A computational analysis of neural mechanisms underlying the maturation of multisensory speech integration in neurotypical children and those on the autism spectrum

DOI:10.3389/fnhum.2017.00518

URL

PMID:5670153

[本文引用: 1]

Failure to appropriately develop multisensory integration (MSI) of audiovisual speech may affect a child's ability to attain optimal communication. Studies have shown protracted development of MSI into late-childhood and identified deficits in MSI in children with an autism spectrum disorder (ASD). Currently, the neural basis of acquisition of this ability is not well understood. Here, we developed a computational model informed by neurophysiology to analyze possible mechanisms underlying MSI maturation, and its delayed development in ASD. The model posits that strengthening of feedforward and cross-sensory connections, responsible for the alignment of auditory and visual speech sound representations in posterior superior temporal gyrus/sulcus, can explain behavioral data on the acquisition of MSI. This was simulated by a training phase during which the network was exposed to unisensory and multisensory stimuli, and projections were crafted by Hebbian rules of potentiation and depression. In its mature architecture, the network also reproduced the well-known multisensory McGurk speech effect. Deficits in audiovisual speech perception in ASD were well accounted for by fewer multisensory exposures, compatible with a lack of attention, but not by reduced synaptic connectivity or synaptic plasticity.

Diminished sensitivity of audiovisual temporal order in autism spectrum disorder

DOI:10.3389/fnint.2013.00008

URL

PMID:23450453

[本文引用: 5]

Abstract We examined sensitivity of audiovisual temporal order in adolescents with autism spectrum disorder (ASD) using an audiovisual temporal order judgment (TOJ) task. In order to assess domain-specific impairments, the stimuli varied in social complexity from simple flash/beeps to videos of a handclap or a speaking face. Compared to typically-developing controls, individuals with ASD were generally less sensitive in judgments of audiovisual temporal order (larger just noticeable differences, JNDs), but there was no specific impairment with social stimuli. This suggests that people with ASD suffer from a more general impairment in audiovisual temporal processing.

No evidence for impaired multisensory integration of low-level audiovisual stimuli in adolescents and young adults with autism spectrum disorders

DOI:10.1016/j.neuropsychologia.2013.10.005

URL

PMID:24157536

[本文引用: 2]

Abrupt click sounds can improve the visual processing of flashes in several ways. Here, we examined this in high functioning adolescents with Autism Spectrum Disorders (ASD) using three tasks: (1) a task where clicks improve sensitivity for visual temporal order (temporal ventriloquism); (2) a task where a click improves visual search (pip-and-pop), and (3) a task where a click speeds up the visual orienting to a peripheral target (clock reading). Adolescents with ASD were, compared to adolescents with typical development (TD), impaired in judgments of visual temporal order, but they were unimpaired in visual search and orienting. Importantly, in all tasks visual performance of the ASD group improved by the presence of clicks by at least equal amounts as in the TD group. This suggests that adolescents and young adults with ASD show no generalized deficit in the multisensory integration of low-level audiovisual stimuli and/or the phasic alerting by abrupt sounds.

Unreliable evoked responses in autism

DOI:10.1016/j.neuron.2012.07.026

URL

PMID:22998867

[本文引用: 1]

Autism has been described as a disorder of general neural processing, but the particular processing characteristics that might be abnormal in autism have mostly remained obscure. Here, we present evidence of one such characteristic: poor evoked response reliability. We compared cortical response amplitude and reliability (consistency across trials) invisual, auditory, and somatosensory cortices of high-functioning individuals with autism and controls. Mean response amplitudes were statistically indistinguishable across groups, yet trial-by-trial response reliability was significantly weaker in autism, yielding smaller signal-to-noise ratios in all sensory systems. Response reliability differences were evident only in evoked cortical responses and not in ongoing resting-state activity. These findings reveal that abnormally unreliable cortical responses, even to elementary nonsocial sensory stimuli, may represent a fundamental physiological alteration of neural processing in autism. The results motivatea critical expansion of autism research to determine whether (and how) basic neural processing properties such as reliability, plasticity, and adaptation/habituation are altered in autism.

Video game players show more precise multisensory temporal processing abilities

DOI:10.3758/APP.72.4.1120

URL

PMID:20436205

[本文引用: 1]

Recent research has demonstrated enhanced visual attention and visual perception in individuals with extensive experience playing action video games. These benefits manifest in several realms, but much remains unknown about the ways in which video game experience alters perception and cognition. In the present study, we examined whether video game players' benefits generalize beyond vision to multisensory processing by presenting auditory and visual stimuli within a short temporal window to video game players and non-video game players. Participants performed two discrimination tasks, both of which revealed benefits for video game players: In a simultaneity judgment task, video game players were better able to distinguish whether simple visual and auditory stimuli occurred at the same moment or slightly offset in time, and in a temporal-order judgment task, they revealed an enhanced ability to determine the temporal sequence of multisensory stimuli. These results suggest that people with extensive experience playing video games display benefits that extend beyond the visual modality to also impact multisensory processing.

Neurofunctional underpinnings of audiovisual emotion processing in teens with autism spectrum disorders

DOI:10.3389/fpsyt.2013.00048

URL

PMID:23750139

[本文引用: 1]

Abstract Despite successful performance on some audiovisual emotion tasks, hypoactivity has been observed in frontal and temporal integration cortices in individuals with autism spectrum disorders (ASD). Little is understood about the neurofunctional network underlying this ability in individuals with ASD. Research suggests that there may be processing biases in individuals with ASD, based on their ability to obtain meaningful information from the face and/or the voice. This functional magnetic resonance imaging study examined brain activity in teens with ASD (n090009=09000918) and typically developing controls (n090009=09000916) during audiovisual and unimodal emotion processing. Teens with ASD had a significantly lower accuracy when matching an emotional face to an emotion label. However, no differences in accuracy were observed between groups when matching an emotional voice or face-voice pair to an emotion label. In both groups brain activity during audiovisual emotion matching differed significantly from activity during unimodal emotion matching. Between-group analyses of audiovisual processing revealed significantly greater activation in teens with ASD in a parietofrontal network believed to be implicated in attention, goal-directed behaviors, and semantic processing. In contrast, controls showed greater activity in frontal and temporal association cortices during this task. These results suggest that in the absence of engaging integrative emotional networks during audiovisual emotion matching, teens with ASD may have recruited the parietofrontal network as an alternate compensatory system.

An extended multisensory temporal binding window in autism spectrum disorders

DOI:10.1007/s00221-010-2240-4 URL [本文引用: 6]

Recalibration of audiovisual simultaneity

DOI:10.1038/nn1268

URL

PMID:15195098

[本文引用: 2]

Abstract To perceive the auditory and visual aspects of a physical event as occurring simultaneously, the brain must adjust for differences between the two modalities in both physical transmission time and sensory processing time. One possible strategy to overcome this difficulty is to adaptively recalibrate the simultaneity point from daily experience of audiovisual events. Here we report that after exposure to a fixed audiovisual time lag for several minutes, human participants showed shifts in their subjective simultaneity responses toward that particular lag. This 'lag adaptation' also altered the temporal tuning of an auditory-induced visual illusion, suggesting that adaptation occurred via changes in sensory processing, rather than as a result of a cognitive shift while making task responses. Our findings suggest that the brain attempts to adjust subjective simultaneity across different modalities by detecting and reducing time lags between inputs that likely arise from the same physical events.

Autism genome- wide copy number variation reveals ubiquitin and neuronal genes

DOI:10.1038/nature07953

URL

PMID:19404257

[本文引用: 1]

Abstract Autism spectrum disorders (ASDs) are childhood neurodevelopmental disorders with complex genetic origins. Previous studies focusing on candidate genes or genomic regions have identified several copy number variations (CNVs) that are associated with an increased risk of ASDs. Here we present the results from a whole-genome CNV study on a cohort of 859 ASD cases and 1,409 healthy children of European ancestry who were genotyped with approximately 550,000 single nucleotide polymorphism markers, in an attempt to comprehensively identify CNVs conferring susceptibility to ASDs. Positive findings were evaluated in an independent cohort of 1,336 ASD cases and 1,110 controls of European ancestry. Besides previously reported ASD candidate genes, such as NRXN1 (ref. 10) and CNTN4 (refs 11, 12), several new susceptibility genes encoding neuronal cell-adhesion molecules, including NLGN1 and ASTN2, were enriched with CNVs in ASD cases compared to controls (P = 9.5 x 10(-3)). Furthermore, CNVs within or surrounding genes involved in the ubiquitin pathways, including UBE3A, PARK2, RFWD2 and FBXO40, were affected by CNVs not observed in controls (P = 3.3 x 10(-3)). We also identified duplications 55 kilobases upstream of complementary DNA AK123120 (P = 3.6 x 10(-6)). Although these variants may be individually rare, they target genes involved in neuronal cell-adhesion or ubiquitin degradation, indicating that these two important gene networks expressed within the central nervous system may contribute to the genetic susceptibility of ASD.

Visuo-tactile integration in autism: Atypical temporal binding may underlie greater reliance on proprioceptive information

DOI:10.1186/s13229-015-0045-9

URL

PMID:4570750

[本文引用: 1]

Background Evidence indicates that social functioning deficits and sensory sensitivities in autism spectrum disorder (ASD) are related to atypical sensory integration. The exact mechanisms underlying...

“Look who's talking!” Gaze patterns for implicit and explicit audio-visual speech synchrony detection in children with high-functioning autism

DOI:10.1002/aur.1447

URL

PMID:25620208

[本文引用: 3]

Conversation requires integration of information from faces and voices to fully understand the speaker's message. To detect auditory-visual asynchrony of speech, listeners must integrate visual movements of the face, particularly the mouth, with auditory speech information. Individuals with autism spectrum disorder may be less successful at such multisensory integration, despite their demonstrated preference for looking at the mouth region of a speaker. We showed participants (individuals with and without high-functioning autism (HFA) aged 8-19) a split-screen video of two identical individuals speaking side by side. Only one of the speakers was in synchrony with the corresponding audio track and synchrony switched between the two speakers every few seconds. Participants were asked to watch the video without further instructions (implicit condition) or to specifically watch the in-synch speaker (explicit condition). We recorded which part of the screen and face their eyes targeted. Both groups looked at the in-synch video significantly more with explicit instructions. However, participants with HFA looked at the in-synch video less than typically developing (TD) peers and did not increase their gaze time as much as TD participants in the explicit task. Importantly, the HFA group looked significantly less at the mouth than their TD peers, and significantly more at non-face regions of the image. There were no between-group differences for eye-directed gaze. Overall, individuals with HFA spend less time looking at the crucially important mouth region of the face during auditory-visual speech integration, which is maladaptive gaze behavior for this type of task.

Altered temporal profile of visual-auditory multisensory interactions in dyslexia

DOI:10.1007/s00221-005-2387-6

URL

PMID:16028030

[本文引用: 1]

Recent studies have demonstrated that dyslexia is associated with deficits in the temporal encoding of sensory information. While most previous studies have focused on information processing within a single sensory modality, it is clear that the deficits seen in dyslexia span multiple sensory systems. Surprisingly, although the development of linguistic proficiency involves the rapid and accurate integration of auditory and visual cues, the capacity of dyslexic individuals to integrate information between the different senses has not been systematically examined. To test this, we studied the effects of task-irrelevant auditory information on the performance of a visual temporal-order-judgment (TOJ) task. Dyslexic subjects’ performance differed significantly from that of control subjects, specifically in that they integrated the auditory and visual information over longer temporal intervals. Such a result suggests an extended temporal “window” for binding visual and auditory cues in dyslexic individuals. The potential deleterious effects of this finding for rapid multisensory processes such as reading are discussed.

The weak coherence account: Detail-focused cognitive style in autism spectrum disorders

DOI:10.1007/s10803-005-0039-0

URL

PMID:16450045

[本文引用: 1]

“Weak central coherence” refers to the detail-focused processing style proposed to characterise autism spectrum disorders (ASD). The original suggestion of a core deficit in central processing resulting in failure to extract global form/meaning, has been challenged in three ways. First, it may represent an outcome of superiority in local processing. Second, it may be a processing bias , rather than deficit. Third, weak coherence may occur alongside, rather than explain, deficits in social cognition. A review of over 50 empirical studies of coherence suggests robust findings of local bias in ASD, with mixed findings regarding weak global processing. Local bias appears not to be a mere side-effect of executive dysfunction, and may be independent of theory of mind deficits. Possible computational and neural models are discussed.

Binding of sights and sounds: Age-related changes in multisensory temporal processing

DOI:10.1016/j.neuropsychologia.2010.11.041

URL

PMID:21134385

[本文引用: 1]

Abstract We live in a multisensory world and one of the challenges the brain is faced with is deciding what information belongs together. Our ability to make assumptions about the relatedness of multisensory stimuli is partly based on their temporal and spatial relationships. Stimuli that are proximal in time and space are likely to be bound together by the brain and ascribed to a common external event. Using this framework we can describe multisensory processes in the context of spatial and temporal filters or windows that compute the probability of the relatedness of stimuli. Whereas numerous studies have examined the characteristics of these multisensory filters in adults and discrepancies in window size have been reported between infants and adults, virtually nothing is known about multisensory temporal processing in childhood. To examine this, we compared the ability of 10 and 11 year olds and adults to detect audiovisual temporal asynchrony. Findings revealed striking and asymmetric age-related differences. Whereas children were able to identify asynchrony as readily as adults when visual stimuli preceded auditory cues, significant group differences were identified at moderately long stimulus onset asynchronies (150-350 ms) where the auditory stimulus was first. Results suggest that changes in audiovisual temporal perception extend beyond the first decade of life. In addition to furthering our understanding of basic multisensory developmental processes, these findings have implications on disorders (e.g., autism, dyslexia) in which emerging evidence suggests alterations in multisensory temporal function. Copyright 2010 Elsevier Ltd. All rights reserved.

Developmental changes in the multisensory temporal binding window persist into adolescence

DOI:10.1111/j.1467-7687.2012.01171.x

URL

PMID:4013750

[本文引用: 2]

We live in a world rich in sensory information, and consequently the brain is challenged with deciphering which cues from the various sensory modalities belong together. Determinations regarding the relatedness of sensory information appear to be based, at least in part, on the spatial and temporal relationships between the stimuli. Stimuli that are presented in close spatial and temporal correspondence are more likely to be associated with one another and thus 090004bound090005 into a single perceptual entity. While there is a robust literature delineating behavioral changes in perception induced by multisensory stimuli, maturational changes in multisensory processing, particularly in the temporal realm, are poorly understood. The current study examines the developmental progression of multisensory temporal function by analyzing responses on an audiovisual simultaneity judgment task in 6- to 23-year-old participants. The overarching hypothesis for the study was that multisensory temporal function will mature with increasing age, with the developmental trajectory for this change being the primary point of inquiry. Results indeed reveal an age-dependent decrease in the size of the 090004multisensory temporal binding window090005, the temporal interval within which multisensory stimuli are likely to be perceptually bound, with changes occurring over a surprisingly protracted time course that extends into adolescence.

The role of the posterior superior temporal sulcus in audiovisual processing

DOI:10.1093/cercor/bhn007

URL

PMID:2536697

[本文引用: 1]

Abstract In this study we investigate previous claims that a region in the left posterior superior temporal sulcus (pSTS) is more activated by audiovisual than unimodal processing. First, we compare audiovisual to visual-visual and auditory-auditory conceptual matching using auditory or visual object names that are paired with pictures of objects or their environmental sounds. Second, we compare congruent and incongruent audiovisual trials when presentation is simultaneous or sequential. Third, we compare audiovisual stimuli that are either verbal (auditory and visual words) or nonverbal (pictures of objects and their associated sounds). The results demonstrate that, when task, attention, and stimuli are controlled, pSTS activation for audiovisual conceptual matching is 1) identical to that observed for intramodal conceptual matching, 2) greater for incongruent than congruent trials when auditory and visual stimuli are simultaneously presented, and 3) identical for verbal and nonverbal stimuli. These results are not consistent with previous claims that pSTS activation reflects the active formation of an integrated audiovisual representation. After a discussion of the stimulus and task factors that modulate activation, we conclude that, when stimulus input, task, and attention are controlled, pSTS is part of a distributed set of regions involved in conceptual matching, irrespective of whether the stimuli are audiovisual, auditory-auditory or visual-visual.

Altered auditory and multisensory temporal processing in autism spectrum disorders

DOI:10.3389/fnint.2010.00129

URL

PMID:3024004

[本文引用: 6]

Autism spectrum disorders (ASD) are characterized by deficits in social reciprocity and communication, as well as by repetitive behaviors and restricted interests. Unusual responses to sensory input and disruptions in the processing of both unisensory and multisensory stimuli also have been reported frequently. However, the specific aspects of sensory processing that are disrupted in ASD have yet to be fully elucidated. Recent published work has shown that children with ASD can integrate low-level audiovisual stimuli, but do so over an extended range of time when compared with typically developing (TD) children. However, the possible contributions of altered unisensory temporal processes to the demonstrated changes in multisensory function are yet unknown. In the current study, unisensory temporal acuity was measured by determining individual thresholds on visual and auditory temporal order judgment (TOJ) tasks, and multisensory temporal function was assessed through a cross-modal version of the TOJ task. Whereas no differences in thresholds for the visual TOJ task were seen between children with ASD and TD, thresholds were higher in ASD on the auditory TOJ task, providing preliminary evidence for impairment in auditory temporal processing. On the multisensory TOJ task, children with ASD showed performance improvements over a wider range of temporal intervals than TD children, reinforcing prior work showing an extended temporal window of multisensory integration in ASD. These findings contribute to a better understanding of basic sensory processing differences, which may be critical for understanding more complex social and cognitive deficits in ASD, and ultimately may contribute to more effective diagnostic and interventional strategies.

Temporal order and processing acuity of visual, auditory, and tactile perception in developmentally dyslexic young adults

DOI:10.3758/CABN.1.4.394

URL

PMID:12467091

[本文引用: 1]

We studied the temporal acuity of 16 developmentally dyslexic young adults in three perceptual modalities. The control group consisted of 16 age- and IQ-matched normal readers. Two methods were used. In the temporal order judgment (TOJ) method, the stimuli were spatially separate fingertip indentations in the tactile system, tone bursts of different pitches in audition, and light flashes in vision. Participants indicated which one of two stimuli appeared first. To test temporal processing acuity (TPA), the same 8-msec nonspeech stimuli were presented as two parallel sequences of three stimulus pulses. Participants indicated, without order judgments, whether the pulses of the two sequences were simultaneous or nonsimultaneous. The dyslexic readers were somewhat inferior to the normal readers in all six temporal acuity tasks on average. Thus, our results agreed with the existence of a pansensory temporal processing deficit associated with dyslexia in a language with shallow orthography (Finnish) and in well-educated adults. The dyslexic and normal readers' temporal acuities overlapped so much, however, that acuity deficits alone would not allow dyslexia diagnoses. It was irrelevant whether or not the acuity task required order judgments. The groups did not differ in the nontemporal aspects of our experiments. Correlations between temporal acuity and reading-related tasks suggested that temporal acuity is associated with phonological awareness.

The audiovisual temporal binding window narrows in early childhood

DOI:10.1111/cdev.12142

URL

PMID:23888869

[本文引用: 1]

Binding is key in multisensory perception. This study investigated the audio-visual (A-V) temporal binding window in 4-, 5-, and 6-year-old children (total N = 120). Children watched a person uttering a syllable whose auditory and visual components were either temporally synchronized or desynchronized by 366, 500, or 666 ms. They were asked whether the voice and face went together (Experiment 1) or whether the desynchronized videos differed from the synchronized one (Experiment 2). Four-year-olds detected the 666-ms asynchrony, 5-year-olds detected the 666- and 500-ms asynchrony, and 6-year-olds detected all asynchronies. These results show that the A-V temporal binding window narrows slowly during early childhood and that it is still wider at 6 years of age than in older children and adults.

Audiovisual speech integration in autism spectrum disorders: ERP evidence for atypicalities in lexical-semantic processing

DOI:10.1002/aur.231

URL

PMID:3586407

[本文引用: 1]

In typically developing (TD) individuals, behavioral and event-related potential (ERP) studies suggest that audiovisual (AV) integration enables faster and more efficient processing of speech. However, little is known about AV speech processing in individuals with autism spectrum disorders (ASD). This study examined ERP responses to spoken words to elucidate the effects of visual speech (the lip movements accompanying a spoken word) on the range of auditory speech processing stages from sound onset detection to semantic integration. The study also included an AV condition, which paired spoken words with a dynamic scrambled face in order to highlight AV effects specific to visual speech. Fourteen adolescent boys with ASD (1509000917 years old) and 14 age- and verbal IQ-matched TD boys participated. The ERP of the TD group showed a pattern and topography of AV interaction effects consistent with activity within the superior temporal plane, with two dissociable effects over frontocentral and centroparietal regions. The posterior effect (200090009300?ms interval) was specifically sensitive to lip movements in TD boys, and no AV modulation was observed in this region for the ASD group. Moreover, the magnitude of the posterior AV effect to visual speech correlated inversely with ASD symptomatology. In addition, the ASD boys showed an unexpected effect (P2 time window) over the frontocentral region (pooled electrodes F3, Fz, F4, FC1, FC2, FC3, FC4), which was sensitive to scrambled face stimuli. These results suggest that the neural networks facilitating processing of spoken words by visual speech are altered in individuals with ASD. Autism Res2011,4:xxx090009xxx. 0008 2011 International Society for Autism Research, Wiley Periodicals, Inc.

Atypical rapid audio-visual temporal recalibration in autism spectrum disorders

DOI:10.1002/aur.1633

URL

PMID:27156926

[本文引用: 4]

Scientific Abstract Changes in sensory and multisensory function are increasingly recognized as a common phenotypic characteristic of Autism Spectrum Disorders (ASD). Furthermore, much recent evidence suggests that sensory disturbances likely play an important role in contributing to social communication weaknesses—one of the core diagnostic features of ASD. An established sensory disturbance observed in ASD is reduced audiovisual temporal acuity. In the current study, we substantially extend these explorations of multisensory temporal function within the framework that an inability to rapidly recalibrate to changes in audiovisual temporal relations may play an important and under-recognized role in ASD. In the paradigm, we present ASD and typically developing (TD) children and adolescents with asynchronous audiovisual stimuli of varying levels of complexity and ask them to perform a simultaneity judgment (SJ). In the critical analysis, we test audiovisual temporal processing on trial t as a condition of trial t 6561651. The results demonstrate that individuals with ASD fail to rapidly recalibrate to audiovisual asynchronies in an equivalent manner to their TD counterparts for simple and non-linguistic stimuli (i.e., flashes and beeps, hand-held tools), but exhibit comparable rapid recalibration for speech stimuli. These results are discussed in terms of prior work showing a speech-specific deficit in audiovisual temporal function in ASD, and in light of current theories of autism focusing on sensory noise and stability of perceptual representations. Lay Abstract The integration of information across the different sensory modalities constitutes a fundamental step toward building a cohesive and comprehensive perceptual representation of the world. This integration and perceptual “binding” is highly dependent on the temporal structure of the multisensory cues. In ASD, multisensory temporal acuity has been found to be impaired, most notably for the integration of audiovisual speech stimuli, a finding that is confirmed in the current study. In addition, we show a striking difference in how those with ASD recalibrate their audiovisual temporal judgments based on prior trial history relative to those who are typically-developing. Most notable is the finding that whereas recalibration for speech stimuli fails to differ between ASD and TD participants, those with ASD fail to recalibrate when making judgments concerning non-speech audiovisual stimuli. These results not only expand our understanding of multisensory temporal function in ASD, but also have important implications for models suggesting changes in predictive coding and sensory priors in autism. Autism Res 2016 . 08 2016 International Society for Autism Research, Wiley Periodicals, Inc.

Auditory processing in autism spectrum disorder: A review

DOI:10.1016/j.neubiorev.2011.11.008

URL

PMID:22155284

[本文引用: 1]

Abstract For individuals with autism spectrum disorder or 'ASD' the ability to accurately process and interpret auditory information is often difficult. Here we review behavioural, neurophysiological and imaging literature pertaining to this field with the aim of providing a comprehensive account of auditory processing in ASD, and thus an effective tool to aid further research. Literature was sourced from peer-reviewed journals published over the last two decades which best represent research conducted in these areas. Findings show substantial evidence for atypical processing of auditory information in ASD at behavioural and neural levels. Abnormalities are diverse, ranging from atypical perception of various low-level perceptual features (i.e. pitch, loudness) to processing of more complex auditory information such as prosody. Trends across studies suggest auditory processing impairments in ASD are most likely to present during processing of complex auditory information and are more severe for speech than for non-speech stimuli. The interpretation of these findings with respect to various cognitive accounts of ASD is discussed and suggestions offered for further research. Copyright 2011 Elsevier Ltd. All rights reserved.

Temporal synchrony detection and associations with language in young children with ASD

DOI:10.1155/2014/678346

URL

PMID:4295130

[本文引用: 1]

Abstract Temporally synchronous audio-visual stimuli serve to recruit attention and enhance learning, including language learning in infants. Although few studies have examined this effect on children with autism, it appears that the ability to detect temporal synchrony between auditory and visual stimuli may be impaired, particularly given social-linguistic stimuli delivered via oral movement and spoken language pairings. However, children with autism can detect audio-visual synchrony given nonsocial stimuli (objects dropping and their corresponding sounds). We tested whether preschool children with autism could detect audio-visual synchrony given video recordings of linguistic stimuli paired with movement of related toys in the absence of faces. As a group, children with autism demonstrated the ability to detect audio-visual synchrony. Further, the amount of time they attended to the synchronous condition was positively correlated with receptive language. Findings suggest that object manipulations may enhance multisensory processing in linguistic contexts. Moreover, associations between synchrony detection and language development suggest that better processing of multisensory stimuli may guide and direct attention to communicative events thus enhancing linguistic development.

When the world becomes ‘too real’: A Bayesian explanation of autistic perception

DOI:10.1016/j.tics.2012.08.009

URL

PMID:22959875

[本文引用: 2]

Perceptual experience is influenced both by incoming sensory information and prior knowledge about the world, a concept recently formalised within Bayesian decision theory. We propose that Bayesian models can be applied to autism - a neurodevelopmental condition with atypicalities in sensation and perception - to pinpoint fundamental differences in perceptual mechanisms. We suggest specifically that attenuated Bayesian priors - 'hypo-priors' - may be responsible for the unique perceptual experience of autistic people, leading to a tendency to perceive the world more accurately rather than modulated by prior experience. In this account, we consider how hypo-priors might explain key features of autism - the broad range of sensory and other non-social atypicalities--in addition to the phenomenological differences in autistic perception.

Neural correlates of multisensory perceptual learning

DOI:10.1523/JNEUROSCI.6138-11.2012

URL

PMID:3366559

[本文引用: 1]

The brain's ability to bind incoming auditory and visual stimuli depends critically on the temporal structure of this information. Specifically, there exists a temporal window of audiovisual integration within which stimuli are highly likely to be perceived as part of the same environmental event. Several studies have described the temporal bounds of this window, but few have investigated its malleability. Recently, our laboratory has demonstrated that a perceptual training paradigm is capable of eliciting a 40% narrowing in the width of this window that is stable for at least 1 week after cessation of training. In the current study, we sought to reveal the neural substrates of these changes. Eleven human subjects completed an audiovisual simultaneity judgment training paradigm, immediately before and after which they performed the same task during an event-related 3T fMRI session. The posterior superior temporal sulcus (pSTS) and areas of auditory and visual cortex exhibited robust BOLD decreases following training, and resting state and effective connectivity analyses revealed significant increases in coupling among these cortices after training. These results provide the first evidence of the neural correlates underlying changes in multisensory temporal binding likely representing the substrate for a multisensory temporal binding window.

Perceptual training narrows the temporal window of multisensory binding

DOI:10.1523/JNEUROSCI.3501-09.2009

URL

PMID:2771316

[本文引用: 1]

The brain's ability to bind incoming auditory and visual stimuli depends critically on the temporal structure of this information. Specifically, there exists a temporal window of audiovisual integration within which stimuli are highly likely to be bound together and perceived as part of the same environmental event. Several studies have described the temporal bounds of this window, but few have investigated its malleability. Here, the plasticity in the size of this temporal window was investigated using a perceptual paradigm in which participants were given feedback during a two-alternative forced choice (2-AFC) audiovisual simultaneity judgment task. Training resulted in a marked (i.e., approximately 40%) narrowing in the size of the window. To rule out the possibility that this narrowing was the result of changes in cognitive biases, a second experiment using a two-interval forced choice (2-IFC) paradigm was undertaken during which participants were instructed to identify a simultaneously presented audiovisual pair presented within one of two intervals. The 2-IFC paradigm resulted in a narrowing that was similar in both degree and dynamics to that using the 2-AFC approach. Together, these results illustrate that different methods of multisensory perceptual training can result in substantial alterations in the circuits underlying the perception of audiovisual simultaneity. These findings suggest a high degree of flexibility in multisensory temporal processing and have important implications for interventional strategies that may be used to ameliorate clinical conditions (e.g., , ) in which multisensory temporal function may be impaired.

Disordered connectivity in the autistic brain: Challenges for the ‘new psychophysiology’

DOI:10.1016/j.ijpsycho.2006.03.012

URL

PMID:16820239

[本文引用: 1]

In 2002, we published a paper [Brock, J., Brown, C., Boucher, J., Rippon, G., 2002. The temporal binding deficit hypothesis of autism. Development and Psychopathology 142, 209–224] highlighting the parallels between the psychological model of ‘central coherence’ in information processing [Frith, U., 1989. Autism: Explaining the Enigma. Blackwell, Oxford] and the neuroscience model of neural integration or ‘temporal binding’. We proposed that autism is associated with abnormalities of information integration that is caused by a reduction in the connectivity between specialised local neural networks in the brain and possible overconnectivity within the isolated individual neural assemblies. The current paper updates this model, providing a summary of theoretical and empirical advances in research implicating disordered connectivity in autism. This is in the context of changes in the approach to the core psychological deficits in autism, of greater emphasis on ‘interactive specialisation’ and the resultant stress on early and/or low-level deficits and their cascading effects on the developing brain [Johnson, M.H., Halit, H., Grice, S.J., Karmiloff-Smith, A., 2002. Neuroimaging of typical and atypical development: a perspective from multiple levels of analysis. Development and Psychopathology 14, 521–536].We also highlight recent developments in the measurement and modelling of connectivity, particularly in the emerging ability to track the temporal dynamics of the brain using electroencephalography (EEG) and magnetoencephalography (MEG) and to investigate the signal characteristics of this activity. This advance could be particularly pertinent in testing an emerging model of effective connectivity based on the balance between excitatory and inhibitory cortical activity [Rubenstein, J.L., Merzenich M.M., 2003. Model of autism: increased ratio of excitation/inhibition in key neural systems. Genes, Brain and Behavior 2, 255–267; Brown, C., Gruber, T., Rippon, G., Brock, J., Boucher, J., 2005. Gamma abnormalities during perception of illusory figures in autism. Cortex 41, 364–376]. Finally, we note that the consequence of this convergence of research developments not only enables a greater understanding of autism but also has implications for prevention and remediation.

Model of autism: Increased ratio of excitation/inhibition in key neural systems

DOI:10.1034/j.1601-183X.2003.00037.x

URL

PMID:14606691

[本文引用: 1]

Autism is a severe neurobehavioral syndrome, arising largely as an inherited disorder, which can arise from several diseases. Despite recent advances in identifying some genes that can cause autism, its underlying neurological mechanisms are uncertain. Autism is best conceptualized by considering the neural systems that may be defective in autistic individuals. Recent advances in understanding neural systems that process sensory information, various types of memories and social and emotional behaviors are reviewed and compared with known abnormalities in autism. Then, specific genetic abnormalities that are linked with autism are examined. Synthesis of this information leads to a model that postulates that some forms of autism are caused by an increased ratio of excitation/inhibition in sensory, mnemonic, social and emotional systems. The model further postulates that the increased ratio of excitation/inhibition can be caused by combinatorial effects of genetic and environmental variables that impinge upon a given neural system. Furthermore, the model suggests potential therapeutic interventions.

Multisensory processing in children with autism: High-density electrical mapping of auditory-somatosensory integration

DOI:10.1002/aur.152

URL

PMID:20730775

[本文引用: 1]

Successful integration of signals from the various sensory systems is crucial for normal sensory090009perceptual functioning, allowing for the perception of coherent objects rather than a disconnected cluster of fragmented features. Several prominent theories of autism suggest that automatic integration is impaired in this population, but there have been few empirical tests of this thesis. A standard electrophysiological metric of multisensory integration (MSI) was used to test the integrity of auditory090009somatosensory integration in children with autism (N=17, aged 609000916 years), compared to age- and IQ-matched typically developing (TD) children. High-density electrophysiology was recorded while participants were presented with either auditory or somatosensory stimuli alone (unisensory conditions), or as a combined auditory090009somatosensory stimulus (multisensory condition), in randomized order. Participants watched a silent movie during testing, ignoring concurrent stimulation. Significant differences between neural responses to the multisensory auditory090009somatosensory stimulus and the unisensory stimuli (the sum of the responses to the auditory and somatosensory stimuli when presented alone) served as the dependent measure. The data revealed group differences in the integration of auditory and somatosensory information that appeared at around 175090009ms, and were characterized by the presence of MSI for the TD but not the autism spectrum disorder (ASD) children. Overall, MSI was less extensive in the ASD group. These findings are discussed within the framework of current knowledge of MSI in typical development as well as in relation to theories of ASD.

Effects of background noise on cortical encoding of speech in autism spectrum disorders

DOI:10.1007/s10803-009-0737-0

URL

PMID:19353261

[本文引用: 1]

This study provides new evidence of deficient auditory cortical processing of speech in noise in autism spectrum disorders (ASD). Speech-evoked responses (~100–30002ms) in quiet and background noise were evaluated in typically-developing (TD) children and children with ASD. ASD responses showed delayed timing (both conditions) and reduced amplitudes (quiet) compared to TD responses. As expected, TD responses in noise were delayed and reduced compared to quiet responses. However, minimal quiet-to-noise response differences were found in children with ASD, presumably because quiet responses were already severely degraded. Moreover, ASD quiet responses resembled TD noise responses, implying that children with ASD process speech in quiet only as well as TD children do in background noise.

Visual illusion induced by sound

DOI:10.1016/S0926-6410(02)00069-1

URL

PMID:12063138

[本文引用: 1]

We present the first cross-modal modification of visual perception which involves a phenomenological change in the quality—as opposed to a small, gradual, or quantitative change—of the percept of a non-ambiguous visual stimulus. We report a visual illusion which is induced by sound: when a single flash of light is accompanied by multiple auditory beeps, the single flash is perceived as multiple flashes. We present two experiments as well as several observations which establish that this alteration of the visual percept is due to cross-modal perceptual interactions as opposed to cognitive, attentional, or other origins. The results of the second experiment also reveal that the temporal window of these audio–visual interactions is approximately 100 ms.

Auditory temporal modulation of the visual Ternus effect: The influence of time interval

DOI:10.1007/s00221-010-2286-3

URL

PMID:20473749

[本文引用: 1]

Research on multisensory interactions has shown that the perceived timing of a visual event can be captured by a temporally proximal sound. This effect has been termed ‘temporal ventriloquism effect.’ Using the Ternus display, we systematically investigated how auditory configurations modulate the visual apparent-motion percepts. The Ternus display involves a multielement stimulus that can induce either of two different percepts of apparent motion: ‘element motion’ or ‘group motion’. We found that two sounds presented in temporal proximity to, or synchronously with, the two visual frames, respectively, can shift the transitional threshold for visual apparent motion (Experiments 1 and 3). However, such effects were not evident with single-sound configurations (Experiment 2). A further experiment (Experiment 4) provided evidence that time interval information is an important factor for crossmodal interaction of audiovisual Ternus effect. The auditory interval was perceived as longer than the same physical visual interval in the sub-second range. Furthermore, the perceived audiovisual interval could be predicted by optimal integration of the visual and auditory intervals.

Interactions between the spatial and temporal stimulus factors that influence multisensory integration in human performance

DOI:10.1007/s00221-012-3072-1

URL

PMID:3526341

[本文引用: 2]

In natural environments, human sensory systems work in a coordinated and integrated manner to perceive and respond to external events. Previous research has shown that the spatial and temporal relationships of sensory signals are paramount in determining how information is integrated across sensory modalities, but in ecologically plausible settings, these factors are not independent. In the current study, we provide a novel exploration of the impact on behavioral performance for systematic manipulations of the spatial location and temporal synchrony of a visual-auditory stimulus pair. Simple auditory and visual stimuli were presented across a range of spatial locations and stimulus onset asynchronies (SOAs), and participants performed both a spatial localization and simultaneity judgment task. Response times in localizing paired visual-auditory stimuli were slower in the periphery and at larger SOAs, but most importantly, an interaction was found between the two factors, in which the effect of SOA was greater in peripheral as opposed to central locations. Simultaneity judgments also revealed a novel interaction between space and time: individuals were more likely to judge stimuli as synchronous when occurring in the periphery at large SOAs. The results of this study provide novel insights into (a) how the speed of spatial localization of an audiovisual stimulus is affected by location and temporal coincidence and the interaction between these two factors and (b) how the location of a multisensory stimulus impacts judgments concerning the temporal relationship of the paired stimuli. These findings provide strong evidence for a complex interdependency between spatial location and temporal structure in determining the ultimate behavioral and perceptual outcome associated with a paired multisensory (i.e., visual-auditory) stimulus.

Keeping time in the brain: Autism spectrum disorder and audiovisual temporal processing

DOI:10.1002/aur.1566

URL

PMID:26402725

[本文引用: 2]

A growing area of interest and relevance in the study of () focuses on the relationship between multisensory temporal function and the behavioral, perceptual, and observed in . Atypical is becoming increasingly recognized as a core component of , with evidence of atypical processing across a number of sensory modalities. These deviations from typical processing underscore the value of interpreting within a multisensory framework. Furthermore, converging evidence illustrates that these differences in audiovisual processing may be specifically related to temporal processing. This review seeks to bridge the connection between temporal processing and audiovisual perception, and to elaborate on emerging data showing differences in audiovisual temporal function in . We also discuss the consequence of such changes, the specific impact on the processing of different classes of audiovisual stimuli (e.g. speech vs. nonspeech, etc.), and the presumptive brain processes and networks underlying audiovisual temporal integration. Finally, possible downstream behavioral implications, and possible remediation strategies are outlined. 2015. 2015 International Society for Research, Wiley Periodicals, Inc.

Atypical multisensory integration in Autism Spectrum Disorders: Cascading impacts of altered temporal processing

DOI:10.1163/22134808-000S0015

URL

[本文引用: 2]

react-text: 133 Background: Accounts of unusual responses to sensory stimuli abound in the ASD literature (Iarocci and McDonald, 2006). Reports of sensory disturbance have motivated modern theories proposing children with ASD have difficulty integrating information to derive meaning from their experiences, potentially due to atypical temporal binding (Frith and Happ, 1994; Brock et al., 2002). Recent research... /react-text react-text: 134 /react-text [Show full abstract]

Multisensory temporal integration in autism spectrum disorders

DOI:10.1523/JNEUROSCI.3615-13.2014

URL

PMID:3891950

[本文引用: 5]

The new DSM-5 diagnostic criteria for autism spectrum disorders (ASDs) include sensory disturbances in addition to the well-established language, communication, and social deficits. One sensory disturbance seen in ASD is an impaired ability to integrate multisensory information into a unified percept. This may arise from an underlying impairment in which individuals with ASD have difficulty perceiving the temporal relationship between cross-modal inputs, an important cue for multisensory integration. Such impairments in multisensory processing may cascade into higher-level deficits, impairing day-to-day functioning on tasks, such as speech perception. To investigate multisensory temporal processing deficits in ASD and their links to speech processing, the current study mapped performance on a number of multisensory temporal tasks (with both simple and complex stimuli) onto the ability of individuals with ASD to perceptually bind audiovisual speech signals. High-functioning children with ASD were compared with a group of typically developing children. Performance on the multisensory temporal tasks varied with stimulus complexity for both groups; less precise temporal processing was observed with increasing stimulus complexity. Notably, individuals with ASD showed a speech-specific deficit in multisensory temporal processing. Most importantly, the strength of perceptual binding of audiovisual speech observed in individuals with ASD was strongly related to their low-level multisensory temporal processing abilities. Collectively, the results represent the first to illustrate links between multisensory temporal function and speech processing in ASD, strongly suggesting that deficits in low-level sensory processing may cascade into higher-order domains, such as language and communication.

Evidence for diminished multisensory integration in autism spectrum disorders

DOI:10.1007/s10803-014-2179-6

URL

PMID:25022248

[本文引用: 2]

Individuals with autism spectrum disorders (ASD) exhibit alterations in sensory processing, including changes in the integration of information across the different sensory modalities. In the current study, we used the sound-induced flash illusion to assess multisensory integration in children with ASD and typically-developing (TD) controls. Thirty-one children with ASD and 31 age and IQ matched TD children (average age=12years) were presented with simple visual (i.e., flash) and auditory (i.e., beep) stimuli of varying number. In illusory conditions, a single flash was presented with 2-4 beeps. In TD children, these conditions generally result in the perception of multiple flashes, implying a perceptual fusion across vision and audition. In the present study, children with ASD were significantly less likely to perceive the illusion relative to TD controls, suggesting that multisensory integration and cross-modal binding may be weaker in some children with ASD. These results are discussed in the context of previous findings for multisensory integration in ASD and future directions for research.

Discrete neural substrates underlie complementary audiovisual speech integration processes

DOI:10.1016/j.neuroimage.2010.12.063

URL

PMID:3057325

[本文引用: 1]