1 引言

口语产生是指将思想通过发音器官进行表达的过程(张清芳, 杨玉芳, 2003b), 通常经历概念化(conceptualization)、言语组织(formulation)以及发音运动(articulation)三个阶段。首先, 讲话者需要在概念层面明确自己想要表达的内容, 其次, 对特定的概念信息进行组织并建立相应的发音运动程序, 包括词条选择(lexical selection), 词素音位编码(morphophonological encoding), 音韵编码(phonological encoding)以及语音编码(phonetic encoding)。最后, 通过声带运动将发音目标以声音的形式输出(Levelt, Roelofs, & Meyer, 1999; Roelofs, 1997)。音韵编码阶段的加工单元是口语产生研究的争论焦点之一。

1.1 口语产生中音韵编码单元的跨语言差异

研究表明印欧语系中音素是音韵编码阶段首先被提取的加工单元。Dell (1986)发现在字母语言中发生的语误现象主要为音素遗漏或音素替换。采用内隐启动范式, 研究者发现连续命名首音素相同的目标词(例如cible-cintre-cerf), 其反应时快于首音素不同的条件, 出现了首音素促进效应(Alario, Perre, Castel, & Ziegler, 2007; Damian & Bowers, 2003; Jacobs & Dell, 2014; Meyer, 1991)。采用形容词-名词命名任务, Damian与Dumay (2007)发现被试命名首音素相同的词对(例如green goat)快于首音素不同的词对(例如green rug)。另外, 利用图词干扰范式(Damian & Martin, 1999)与掩蔽启动范式(Forster & Davis, 1991; Schiller, 2008), 研究者都发现了首音素促进效应。

然而, 研究表明汉语口语产生中的音韵编码单元是音节而非音素。汉语中的语误现象主要为音节交换错误(Chen, 2000)。Chen, Chen和Dell (2002)采用内隐启动范式, 发现音节同质条件比音节异质条件的命名反应时更短, 但音素同质条件与音素异质条件之间无显著差异。利用掩蔽启动范式(Chen, O’Seaghdha, & Chen, 2016)和图词干扰范式(张清芳, 杨玉芳, 2005), 研究者发现了相同模式的结果。岳源和张清芳(2015)结合图词干扰范式与不同的实验任务(即时命名, 延时命名, 延时命名与发音抑制相结合), 发现音节促进效应发生在音韵编码阶段, 并且该促进作用是一个稳定可靠的效应(Cohen d = 0.85 > 0.8) (Cohen, 1988)。上述研究结果一致地表明音节是汉语口语词汇产生中音韵编码阶段最先被提取的单元。

根据跨语言研究的不同结果, O’Seaghdha, Chen和Chen (2010)提出了有关音韵编码的合适单元假说(proximate units principle)。合适编码单元(proximate units)指的是激活词汇词素之后首先被加工的音韵编码单元, 最先选择的单元存在语言上的差异:印欧语系如英语或荷兰语中最先选择的单元是音素, 而在汉语中则为音节。在印欧语系中, 讲话者在选择音素后, 结合节律信息进行音节化过程, 从心理音节表中提取音节准备发音运动程序。汉语口语产生中讲话者在选择音节后进一步分解为音素或音段信息(音韵编码), 准备发音运动程序(语音编码), 最后进行发音, 输出口语产生的结果(发音运动) (同见Roelofs, 2015)。

音韵编码单元的跨语言差异与不同语言各自的特点密切相关。在汉语中, 音节是受具体语言的语义和结构制约的最小自然发音单位, 对口语产生具有重要意义(张清芳, 2005)。一方面, 汉语的音节数量远远低于其他字母语言(张清芳, 杨玉芳, 2005), 另一方面, 汉语的音节边界相对清晰, 不存在字母语言中重新音节化的现象。因此, 对汉语而言, 更加经济高效的加工方式是将词条的音节信息储存在长时记忆中, 并在音韵编码的早期进行直接提取。而在印欧语系中, 音节的数量巨大, 在发音过程中存在大量重新音节化的现象(Levelt et al., 1999), 因此, 讲话者在音韵编码阶段首先提取的加工单元是音素。

研究者采用事件相关电位(event-related potential, ERP)技术对该问题进行了研究。Qu, Damian和Kazanina (2012)采用首音素重复范式(如首音素重复的“黄盒子”和首音素不重复的“绿盒子”), 在图画呈现后的200~300 ms之间发现了首音素重复效应, 表明音素信息在音韵编码阶段也会被激活(同见Yu, Mo, & Mo, 2014)。利用图图干扰范式和延迟图画命名任务, Wang, Wong, Wang与Chen (2017)发现音节效应发生在目标图呈现后的200~400 ms (音韵编码)以及400~600 ms (语音编码); 采用掩蔽启动范式, Zhang和Damian (2019)发现在目标图呈现后的300~400 ms (音韵编码), 音节相关条件诱发了更小的ERP波幅。虽然ERP能够区分不同实验效应的时间进程, 但上述研究都忽视了口语产生过程中的神经振荡活动(neural oscillations)。传统的ERP分析是对相同实验条件下的神经信号进行叠加平均(Rugg & Coles, 1995)。然而, 脑电信号在经过多次叠加后, 会减弱甚至消除非相位锁定的神经振荡活动(Bidelman, 2015)。本研究拟采用脑电时频分析探索汉语母语者音韵编码加工过程中神经振荡的特点, 特别关注的是对应于音节和音素效应的神经振荡。

1.2 θ频段(4~8 Hz)神经振荡与音节加工之间的关系

神经振荡被认为是大脑神经元的节律性反应, 包括了delta (δ, < 4 Hz), theta (θ, 4~8 Hz), alpha (α, 8~13 Hz), beta (β, 13~30 Hz), gamma (γ, > 30 Hz) 等频段(Ward, 2003)。研究表明神经振荡与个体的注意、记忆、决策等认知过程有着密切的联系(Fell & Axmacher, 2011; Jensen, Kaiser, & Lachaux, 2007; Klimesch, 2012; Siegel, Donner, & Engel, 2012)。人类的语言活动同样会引发特定频段的神经振荡(Giraud & Poeppel, 2012; Lewis, Wang, & Bastiaansen, 2015)。例如, 当被试加工存在句法错误的材料时, 大脑β频段的神经振荡能量会显著降低(Bastiaansen, Magyari, & Hagoort, 2009)。

针对语音信息加工的认知神经机制, 不对称时间采样理论(asymmetric sampling in time, AST)提出大脑的听觉腹侧通路(颞上回STG—颞上沟STS—颞下沟ITS)将按照刺激的声学属性进一步分化成两条平行的加工通路, 分别负责提取音节和音素水平的信息 (Poeppel, 2003)。一段连续语流中所包含的音节信息在时程上的变化速率相对音素而言是比较缓慢的, 而大脑低频的θ活动则表征了对音节的加工(Doelling, Arnal, Ghitza, & Poeppel, 2014; Howard & Poeppel, 2012; Luo & Poppel, 2007; Peelle, Gross, & Davis, 2013)。虽然大脑神经元的放电频率和语音信号自身的音节变化频率不是简单的直接对应关系, 但这仍然提示我们θ频段的神经振荡活动与音节的加工紧密相关。

第一, 大脑θ活动的相位信息表征了对音节的追踪(Ghinst et al., 2016; Gross et al., 2013; Molinaro, Lizarazu, Lallier, Bourguignon, & Carreiras, 2016)。在Pefkou, Arnal, Fontolan和Giraud (2017)的实验中, 研究者首先对人们自然状态下产生的实验材料(句子)音频进行包络分析, 得到该声音信号中所包含的音节总数, 再将其除以自身的总持续时间, 计算出这段音频的音节速率, 最后通过对材料进行时程上的压缩, 以获得不同音节速率的实验刺激。进入正式实验后, 主试在记录被试脑电信号的同时, 向被试播放不同音节速率的句子。回归分析结果发现, 个体的θ相位一致性随着音节速率的升高而降低, 也就是说, 当句子的播放速度越快, 被试越难追踪其中的音节信息时, 大脑θ活动的相位一致性就越差。此外, 研究发现当被试接受连续的音节序列(syllable sequences)刺激时, θ神经振荡的相位一致性会提高(Power, Mead, Barnes, & Goswami, 2012)。

第二, 大脑θ活动的能量信息反映了对音节的识别。在一项跨语言的研究中, Peña和Melloni (2012)采用日语, 西班牙语, 意大利语三种不同语言的口语句子作为实验材料考察西班牙语母语者和意大利语母语者语言理解的动态过程。结果发现不管是哪种母语类型的被试, 当听到正序播放的句子时, θ频段神经振荡的能量显著高于逆序播放句子的条件。并且, 当被试听非本国语的材料时, 对比正序播放和逆序播放两种实验条件, θ频段的能量活动表现出加工本国语言材料时相同的模式。正序播放和逆序播放的材料虽然保持了基本声学属性的高度一致, 但倒放的材料会造成严重的语音扭曲(phonological distortions), 导致个体无法理解材料的意义(Binder et al., 2000; Gross et al., 2013; Saur et al., 2010)。Peña和Melloni (2012)指出, 实验材料在时程上的反向破坏了各个单词原有的语音结构, 造成个体难以切分单词的音节, 从而使个体θ频段的神经振荡活动变弱。在一项关于汉语的研究中, 当研究者向被试播放音节刺激时, 正放的条件相比于倒放的条件同样诱发了更强的θ频段能量活动(Ding, Melloni, Zhang, Tian, & Poeppel, 2015)。

综上, 行为和ERP研究均表明音节在汉语口语产生过程中扮演了重要角色, 语言知觉或理解的研究表明θ频段的神经活动与音节加工密切相关。目前尚未有研究考察汉语口语产生中的音节启动效应是否与特定频段的神经振荡活动相关, 而脑电时频分析有助于我们更深入地了解汉语口语产生过程中对于音节的加工机制。本研究中我们采用掩蔽启动范式, 操纵启动词和目标图名称之间的语音相关关系(音节相关-音节无关, 音素相关-音素无关), 要求被试完成图画命名任务, 同时记录其脑电信号, 最后对EEG数据进行时频分析, 考察音节效应和音素效应发生时的神经振荡。在掩蔽启动范式中, 启动词的呈现时间非常短(50 ms左右), 被试对启动刺激的加工通常是阈下的, 该范式排除了个体命名策略等无关因素对实验结果的影响(Chen et al., 2016; You, Zhang, & Verdonschot, 2012)。由于θ频段和音节的加工存在密切联系(Ghinst et al., 2016; Gross et al., 2013; Power et al., 2012; Peña & Melloni, 2012), 我们预期会发现显著的音节启动效应, 但不会发现显著的音素启动效应, 相应地, θ频段的能量仅在音节相关和无关条件中会存在显著差异。

2 方法

2.1 被试

23名大学生和研究生(11名男生, 平均年龄22岁)。所有被试均为右利手, 无任何精神疾病病史, 母语为汉语, 讲标准普通话, 视力或矫正视力正常。在参加实验之前被试阅读知情同意书并签字, 实验之后获得一定报酬。

2.2 材料

64幅由黑白线条组成的图片, 选自张清芳和杨玉芳(2003a)建立的汉语图片库, 其中用于正式实验的图片60幅, 练习试次图片4幅。每张图片的名称为双音节名词, 第一个字分别与4种实验条件匹配。例如, 图片名称为“鼻子” (/bi2zi5/), 音节相关条件的启动字为“彼” (/bi3/), 与图片名称音节完全相同但声调不同; 音素相关条件的启动字为“柏” (/bai3/), 与图片名称的首音素相同, 声调不同。音节相关与音素相关的条件下启动词的词频(Cai & Brysbaert, 2010)没有显著差异(t = 0.027, p = 0.978)。音节相关条件与音素相关条件的启动字在随机打乱后重新与图片进行匹配形成音节无关条件与音素无关条件。检查后, 两种无关条件下的启动字与目标图名称之间不存在语音相关。4种实验条件下启动词和图片名称之间不存在语义和正字法相关。

2.3 设计

研究采用2×2×2被试内设计, 自变量包括相关类型(音节, 音素)、相关条件(相关, 无关)和重复次数(第一次, 第二次)。每幅图片与4种不同类型启动字匹配, 因此每组测试中包括了240个试次。每组测试重复两次, 因此每个被试共完成480个试次。每个被试在每组测试中的试次呈现顺序都是不同的, 通过伪随机的方式呈现, 保证相同的图片之间至少间隔5个试次, 图片名称首音素相同的试次不会连续出现。

2.4 实验仪器

E-prime 2.0编写实验程序, PST SRBOX反应盒, 麦克风与计算机。实验图片均通过计算机呈现在屏幕中央, 被试的反应通过反应盒连接的麦克风记录。实验材料的呈现和被试的反应时由电脑控制与收集。主试记录被试是否进行正确反应。采用国际通用10-20系统的64导脑电帽, NeuroScan系统记录被试的脑电信号。

2.5 程序

实验分为3个阶段, 学习阶段、测试阶段和正式实验阶段。在学习阶段, 屏幕中央会依次呈现每幅图片及其对应的名称2 s。主试告知被试接下来正式实验中会出现这些图片并要求被试记住图片的内容及其对应的名称。在测试阶段, 呈现图片要求被试说出图片名称, 当被试对所有图片都能正确命名时, 方可进入正式实验。所有材料都是日常生活中常见的且命名一致性较高的图片, 所有被试均能顺利完成对图片名称的学习。

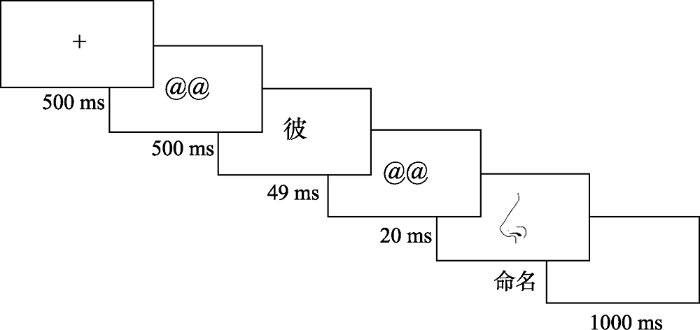

正式实验中每个试次的流程如下(见图1):首先呈现500 ms的注视点(“+”), 然后是500 ms的前掩蔽(@@), 接着会呈现49 ms的启动词, 随后是20 ms的后掩蔽(@@), 掩蔽消失后, 屏幕中央会呈现目标图片。被试需要在2000 ms内又快又准地对图片进行命名, 做出反应的同时图片会立刻消失, 间隔1000 ms后开始下一次测试。正式实验之前有4次练习使被试熟悉实验任务。所有的图片刺激均标准化为统一大小, 启动字为28号宋体, 掩蔽刺激(@@)为36号宋体。完成对所有试次的命名需要100分钟。

图1

2.6 脑电记录与分析

在线记录以左侧乳突作为参考电极, 离线分析时重参考为双侧乳突。同时记录双眼的水平眼电(HEOG)和左眼的垂直眼电(VEOG)。电极与头皮之间的阻抗均小于5 kΩ, 滤波带通为0.05~70 Hz, 信号采样率为500 Hz。

预处理采用EEGLAB工具包进行(Delorme & Makeig, 2004)。首先, 对于信号采集时与头部接触不良或已损坏的电极(数量未超过总电极数的5%), 采用EEGLAB中自带函数进行坏导替换, 通过“球面插值算法” (spherical interpolation), 利用被替换导联周围电极的数据, 对信号重新估计后进行替换(Perrin, Pernier, Bertrand, & Echallier, 1989; Pivik et al., 1993)。第二, 对数据进行0.1~30 Hz的滤波以及独立成分分析(independent component analysis, ICA)。ICA基于盲源信号分离技术, 基本思路是将多通道观察的信号按照统计独立的原则分解为若干成分(ICs)。ICA对脑电信号中由于眨眼, 肌肉运动引发的伪迹有较高的识别度(Makeig, Bell, Jung, & Sejnowski, 1995)。眼电伪迹的判断标准为:成分排序靠前, 成分的头部活动集中在前额区, 能量活动随频率升高缓慢衰减, 单试次能量大。肌电伪迹的判断标准为:成分的头部活动分散在外侧的局部地区, 能量活动随频率升高而升高, 单试次能量较大。按上述标准对每个成分依次进行检查, 并结合EEGLAB识别伪迹成分的插件ADJUST, 对伪迹进行排除。第三, 按图片出现前1000 ms以及出现后1500 ms对脑电信号进行分段, 在排除波幅超过 ± 100 μV的试次以及肉眼确认后, 将数据保存。

利用Fieldtrip工具包对数据进行时频分析, 采用小波变换的方法(Oostenveld, Fries, Maris, & Schoffelen, 2011)。单试次总时长为2500 ms, 时间分辨率为10 ms; 分析频段范围为3~30 Hz, 频率分辨率为1 Hz。小波周期以线性方式递增, 最低频率处周期为3, 最高频率处周期为8 (同样的标准见Li, Shao, Xia, & Xu, 2019)。随后将不同周期的正弦小波与脑电时域信号进行卷积, 进而获得不同频率范围内各个时间点脑电信号的神经振荡能量值(Goupillaud, Grossmann, & Morlet, 1984)。分析过程中首先对单个试次的活动能量进行估计, 再完成多试次之间的平均。以刺激出现前300~100 ms作为基线, 采用分贝量尺对能量活动进行校正:dB = 10 × log10 (基线后能量/基线平均能量)。简明起见, 事件相关频谱扰动(event-related spectral perturbation, ERSP)呈现的频率范围为4~20 Hz。

根据电极点分布的空间位置, 研究选取了6个兴趣区(regions of interest, ROI)并对每个兴趣区内的神经振荡能量进行平均, 分别为:左前区(F3, FC3, FC5), 中前区(Fz, FCz, Cz), 右前区(F4, FC4, FC6), 左后区(P5, P3, PO3), 中后区(CPz, Pz, POz)与右后区(P6, P4, PO4)。采用2(相关类型:音节, 音素) × 2(相关条件:相关, 无关) × 2(重复:第一次, 第二次) × 6(兴趣区:左前, 中前, 右前, 左后, 中后, 右后)重复测量方差分析, 统计结果非球形性时利用Greenhouse-Geisser法对p值进行校正。

在比较音节相关和无关条件, 音素相关和无关条件的差异是否显著时, 我们采用了基于簇的置换检验(cluster-based permutation test)对数据进行统计分析, 该方法能够有效地对多重比较下的p值进行校正(Maris & Oostenveld, 2007)。进行置换检验的时间窗口为刺激出现后的600 ms, 步长为10 ms, 共6个兴趣区, 对感兴趣的每两个实验条件之间的数据(时间×频率×电极)进行重复测量t检验, p值小于0.05且在时间和空间位置上邻近的数据点将被合并为同一个簇。随后, 计算每个簇内t值的和以确定簇水平(cluster level)的统计信息, 通过蒙特卡洛法(Monte Carlo method)进行统计显著性检验, 随机抽样的次数为1000次。

3 结果

3.1 行为结果

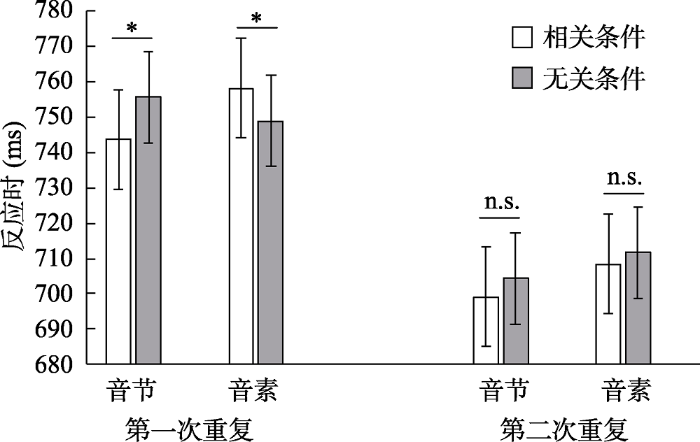

删除两个错误率大于10%以及两个反应时均值在3个标准差之外的项目。删除设备未记录到的数据(2.5%)以及命名错误的数据(1.8%)。删除反应时小于200 ms以及大于1500 ms的数据, 包含偏离平均值3个标准差之外的数据在内, 占总试次的2.3%。图2所示为不同实验条件变化下命名反应时的均值和95%置信区间(confidence interval, CI)。

图2

对反应时数据进行2(相关类型:音节, 音素) × 2(相关条件:相关, 无关) × 2(重复次数:第一次, 第二次)重复测量方差分析, 被试分析(F1)与项目分析(F2)结果表明, 相关类型的主效应在被试分析中显著, 项目分析中边缘显著:被试在音节条件下的命名反应时更快, F1(1, 22) = 7.67, p = 0.01, ŋp2 = 0.26, F2(1, 55) = 3.46, p = 0.07, ŋp2 = 0.06。重复次数的主效应显著:被试在第二次重复时命名反应时更快, F1(1, 22) = 48.24, p < 0.001, ŋp2 = 0.69, F2(1, 55) = 282.59, p < 0.001, ŋp2 = 0.84。相关类型和相关条件的交互作用显著:F1(1, 22) = 5.51, p = 0.03, ŋp2 = 0.20, F2(1, 55) = 7.04, p = 0.01, ŋp2 = 0.11。简单效应分析表明, 被试在音节相关条件下命名反应时显著快于音节无关条件, F1(1, 22) = 6.12, p = 0.02, ŋp2 = 0.22, F2(1, 55) = 5.87, p = 0.02, ŋp2 = 0.10; 音素相关条件与音素无关条件之间命名反应时差异不显著, F1(1, 22) = 0.85, p = 0.37, ŋp2 = 0.04, F2(1, 55) = 1.45, p = 0.23, ŋp2 = 0.03。相关类型, 相关条件与重复次数之间的三重交互作用在被试分析中显著, 项目分析中边缘显著:F1(1, 22) = 7.88, p = 0.01, ŋp2 = 0.26, F2(1, 55) = 3.75, p = 0.06, ŋp2 = 0.06。第一次重复时, 相关类型与相关条件的交互作用显著, F1(1, 22) = 13.38, p = 0.001, ŋp2 = 0.38, F2(1, 55) = 8.53, p = 0.005, ŋp2 = 0.13。具体表现为被试在音节相关条件下命名反应时显著快于音节无关条件, F1(1, 22) = 5.88, p = 0.02, ŋp2 = 0.21, F2(1, 55) = 6.01, p = 0.02, ŋp2 = 0.10; 而音素相关条件的命名反应时显著慢于音素无关条件, F1(1, 22) = 5.04, p = 0.04, ŋp2 = 0.19, F2(1, 55) = 4.53, p = 0.04, ŋp2 = 0.08。第二次重复时, 相关类型与相关条件的交互作用不显著, F1 < 1, F2 < 1。其他的主效应和交互作用均不显著。

3.2 时频分析结果

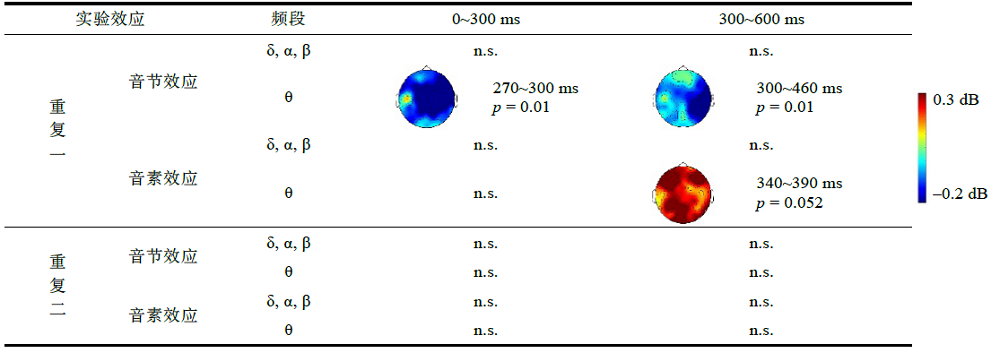

一名被试由于信号伪迹过大未纳入后续分析。删除反应时小于500 ms (5.1%), 大于1500 ms (1.0%)以及伪迹较大的试次(5.3%)。我们根据相关研究确定了6个连续的时间窗口:0~100 ms, 100~200 ms, 200~300 ms, 300~400 ms, 400~500 ms, 500~600 ms (类似的窗口划分见Qu et al., 2012; Zhang & Damian, 2019), 每个时间窗口内θ能量活动方差分析的结果见表1。

表1 以相关条件、相关类型、重复次数以及兴趣区为自变量θ能量活动在0~600 ms时间窗内的方差分析

| 变异来源 | 0~100 ms | 100~200 ms | 200~300 ms | 300~400 ms | 400~500 ms | 500~600 ms | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F | ŋp2 | F | ŋp2 | F | ŋp2 | F | ŋp2 | F | ŋp2 | F | ŋp2 | ||

| 相关类型(1, 21) | 8.08* | 0.28 | 11.03** | 0.35 | 5.18* | 0.20 | n.s. | n.s. | n.s. | ||||

| 相关条件(1, 21) | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | |||||||

| 重复次数(1, 21) | n.s. | n.s | n.s. | n.s. | n.s. | n.s. | |||||||

| 相关类型×相关条件(1, 21) | n.s. | n.s | n.s. | 5.13* | 0.20 | 6.41* | 0.23 | 6.00* | 0.22 | ||||

| 相关类型×相关条件×重复次数 (1, 21) | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | |||||||

| 相关条件×相关类型×重复次数× 兴趣区(5, 105) | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | |||||||

注:** p < 0.01, * p < 0.05, n.s. 不显著。

在0~100 ms, 100~200 ms和200~300 ms时间窗内, 相关类型的主效应显著, 音节条件诱发了更大的能量活动, F(1, 21)0~100 ms = 8.08, p = 0.01, ŋp2 = 0.28, F(1, 21)100~200 ms = 11.03, p = 0.003, ŋp2 = 0.35, F(1, 21)200~300 ms = 5.18, p = 0.03, ŋp2 = 0.20。在300~400 ms时间窗内, 相关类型和相关条件的交互作用显著, F(1, 21) = 5.13, p = 0.03, ŋp2 = 0.20。音节相关条件的平均能量小于音节无关条件, F(1, 21) = 4.43, p = 0.047, ŋp2 = 0.17; 音素相关与音素无关条件之间无显著差异, p = 0.14。在400~500 ms时间窗内, 相关类型和相关条件的交互作用显著, F(1, 21) = 6.41, p = 0.02, ŋp2 = 0.23。音节相关条件的平均能量小于音节无关条件, F(1, 21) = 5.81, p = 0.03, ŋp2 = 0.22; 音素相关与音素无关条件之间无显著差异, p = 0.38。在500~600 ms时间窗口内, 相关类型和相关条件交互作用显著, F(1, 21) = 6.00, p = 0.02, ŋp2 = 0.22。音节相关条件的平均能量小于音节无关条件(边缘显著), F(1, 21) = 3.57, p = 0.07, ŋp2 = 0.15; 音素相关与音素无关条件之间无显著差异, p = 0.16。其他频段的能量活动在各个时间窗内的交互作用都不显著。

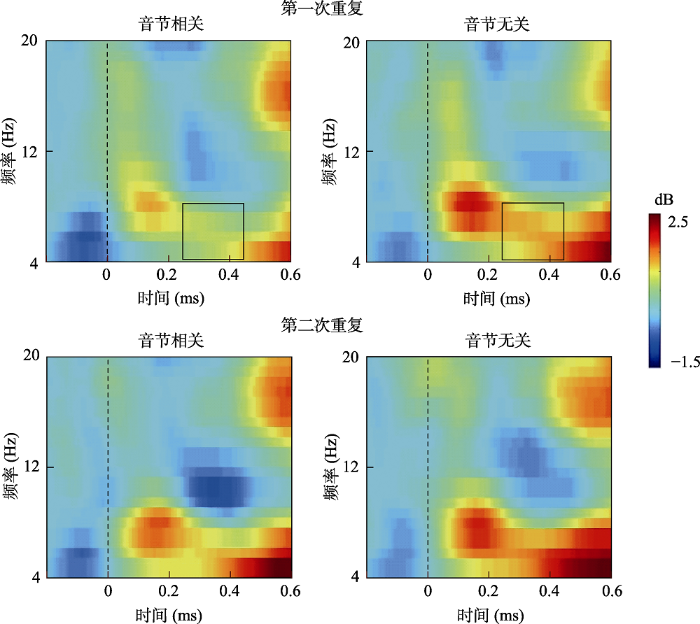

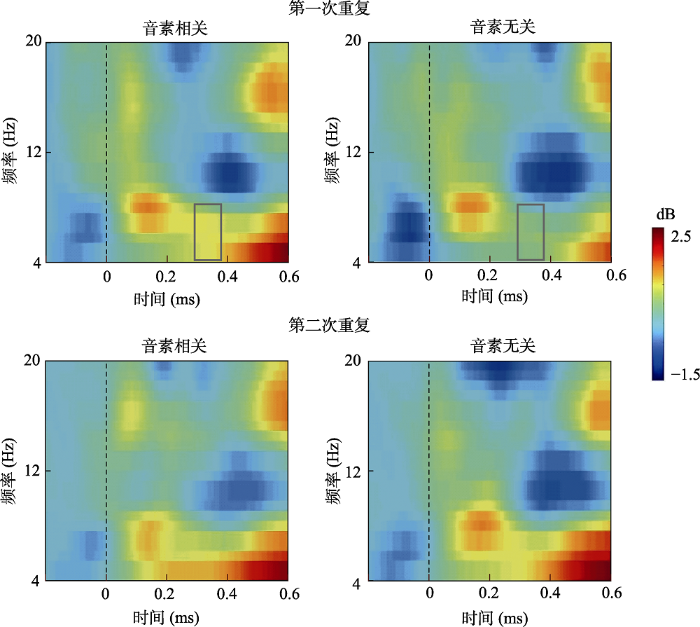

针对音节效应和音素效应所进行的基于簇的置换检验结果显示, 第一次重复时, 右前兴趣区(F4, FC4, FC6)音节相关条件比音节无关条件在刺激出现后的270~460 ms之间诱发了更低的θ频段能量活动(p = 0.01, 4~8 Hz); 左前兴趣区(F3, FC3, FC5)音素相关条件比音素无关条件在刺激出现后的340~390 ms诱发了更高的θ频段能量活动(边缘显著, p = 0.052, 4~8 Hz)。第二次重复时, 各个兴趣区的音节效应, 音素效应均不显著。在3~30 Hz之间其他的频段(δ, α, β)的能量活动上, 感兴趣的实验条件之间差异不显著(见表2)。图3a与3b分别呈现了不同重复次数下音节效应与音素效应的ERSP。

图3a

图3b

图3b

FC3点音素相关和无关条件的事件相关频谱扰动

注:虚线代表图片出现的时间, 灰色方框表示条件之间差异边缘显著, 0.05 < p < 0.1, 经簇水平校正

4 讨论

本研究采用掩蔽启动范式考察了汉语口语产生中音节与音素的加工过程与θ频段能量活动之间的关系。行为结果发现个体在音节相关条件下对图片的命名快于音节无关条件, 而在音素相关条件下命名反应时慢于音素无关条件。脑电时频分析结果表明, 在图片出现后的270~460 ms, θ频段的能量活动在音节相关与音节无关条件之间差异显著, 表现为音节相关条件下个体θ频段的能量更低, 音素相关条件与音素无关条件相比无显著差异。研究结果表明, 汉语口语产生过程中, θ频段的活动反映了个体对音节信息的加工。

在行为层面, 与Zhang和Wang (2014)的研究结果一致, 由于试次重复带来的练习效应不仅总体降低了第二次命名的反应时, 也使得实验关注的效应消失了。重要的是, 在第一次命名时, 本研究成功重复了以往利用内隐启动范式(Chen et al., 2002), 图词干扰范式(岳源, 张清芳, 2015)以及掩蔽启动范式(Chen et al., 2016)发现的音节促进效应; 同时, 我们发现个体在音素相关条件下命名潜伏期更长, 表现出音素抑制效应(Chen et al., 2016)。需要注意的是, 在以往针对字母语言的研究中, 呈现首音素相关的启动刺激会降低个体对目标刺激的命名反应时(Alario et al., 2007; Damian & Bowers, 2003; Damian & Martin, 1999; Forster & Davis, 1991; Schiller, 2008)。这提示我们字母语言和汉语的口语产生过程是不同的, 具体而言, 字母语言需要在音韵编码阶段通过加工刺激的重音、音素等信息确定其音节结构(Levelt et al., 1999); 而汉语的音节数量少, 音节边界清晰, 不存在重新音节化的特点, 这使得汉语母语者在音韵编码阶段可以直接提取刺激的音节信息(O’Seaghdha et al., 2010)。结合本研究的反应时结果来看, 个体表现出的音节促进效应表明汉语口语产生中音节能够作为独立的信息表征单元, 在音节相关条件下通过提前加工目标刺激的音节信息降低了命名反应时; 而音素抑制效应很有可能是相同首音素的音节协同激活(co-activation)造成的竞争导致的。在音素相关条件下, 由于启动刺激与目标刺激的首音素是相同的, 部分重合的信息可能导致以该音素开头的音节都得到了激活, 致使个体在提取目标词的音节信息时, 受到了来自其他音节信息的干扰, 延长了命名反应时(后文将结合θ频段的神经振荡活动对音素抑制效应的竞争机制展开具体讨论)。类似的, 在一项针对汉语的听觉词汇判断任务中, Sereno和Lee (2015)同样发现了音素抑制效应。尽管如此, 相比于音节促进效应而言, 我们认为本研究的音素抑制效应是较为微弱的:个体在第二次测试时没有表现出显著的音节促进效应和音素抑制效应, 但相关类型与相关条件的两因素交互作用依旧是显著的, 个体在音节相关条件下命名反应时更快, 而音素条件之间差异并不显著。这至少说明再次命名时, 被试的行为表现更倾向于音节促进效应, 而非音素抑制效应(见图2)。

在神经层面, 我们发现θ频段的神经振荡活动在相关类型与相关条件之间存在交互作用:音节相关条件相比于音节无关条件, 个体θ频段的能量更低。更重要的是, 音节效应发生在刺激出现后大约270~460 ms, 与以往图片命名ERP研究中音节效应的时间窗口一致(Cai, Yin, & Zhang, 2020; Dell’acqua et al., 2010; Wang et al., 2017; Yu et al., 2014; Zhu, Damian, & Zhang, 2015)。根据元分析的结果(Indefrey & Levelt, 2004), 个体通常在看到图片之后的250 ms进入音韵编码阶段, 此时汉语母语者需要提取目标词的音节信息并为后续加工做准备。θ频段的能量在音节相关条件下比无关条件下更低, 这存在两种可能的解释。第一, 音节重复导致相同音节所引起的能量活动下降。GrillSpector, Henson和Martin (2006);指出, 个体在加工重复的刺激时神经元活动会减弱, 而这种去激活反应表现为神经振荡能量的降低。Gruber和Müller (2002);在客体识别的任务中发现, 在图片呈现后的220~350 ms, 重复的刺激比非重复的刺激诱发了更低的γ频段神经振荡能量。采用任务切换范式, 研究者发现当被试完成的任务前后保持一致时(重复条件), 相比不一致(非重复条件), β与γ的能量显著下降(Gruber, Giabbiconi, Trujillobarreto, & Müller, 2006)。刺激的重复加工引起神经振荡能量减弱这一结果在面孔识别的研究中也得到了重复(Engell & Mccarthy, 2014)。Brookes等(2005);利用功能磁共振(functional magnetic resonance imaging, fMRI)的技术, 发现由重复引起的神经振荡能量下降与皮层血氧依赖水平(blood oxygenation level dependent, BOLD)的衰减密切相关。本研究中, 音节相关条件下启动词的音节与目标图名称首字的音节完全一致, 这种语音上的相似性极有可能引起“重复效应”, 造成θ频段能量活动的降低。第二, 两种条件下认知加工负荷的差异引起了能量的变化。来自工作记忆的研究证据表明, 对于认知加工负荷较重的任务, 神经元释放的能量相对较高, 而认知加工负荷较轻的任务, 神经元释放的能量则相对较低(Roux & Uhlhaas, 2014)。在音节相关条件下, 由于对目标图名称的音节信息进行了提前加工, 当被试看到目标刺激并提取相应的音节进行音韵编码时, 认知加工的负荷是相对较低的。相反, 音节无关条件下, 被试无法通过启动刺激直接提取目标图片的音节信息, 认知加工负荷相对较高。因此, 对比音节无关条件, θ神经振荡活动的能量在音节相关条件下会更弱, 这与行为反应时的模式一致:被试在音节相关条件下认知负荷更低, 命名更快。

需要指出的是, 在言语知觉过程中, 研究者比较自然的语音材料和逆序播放的声音刺激时, 他们发现了在自然的语音材料知觉过程中引发了更高的θ频段能量活动, 这与本研究发现的模式不一致。这是由于比较条件的不同引起的:Peña和Melloni (2012);以及Ding等(2015);的研究发现在言语知觉过程中, 当被试听逆序播放的材料时, 相比于正序播放的条件, θ频段的活动能量更低。研究者认为逆序播放破坏了语言本身正常的语音结构, 人们不能识别材料中的音节, 相应皮层的神经元活动处于抑制状态, 其神经振荡的能量相对较低。在正序播放材料的情况下, 被试能够顺利地加工材料的音节信息, 相应皮层的神经活动处于兴奋状态, 其神经振荡的能量相对较高。我们研究中比较的是音节相关与无关, 是两类兴奋状态下的比较, 而言语知觉研究中比较的是兴奋状态和非兴奋状态, 因此产生了不同的模式。

与音节效应相比, 在340~390 ms的时间窗内, 我们发现了音素相关条件与音素无关的能量差异(边缘显著水平), 表现为第一次重复时音素相关条件下θ频段能量活动高于音素无关条件, 其能量活动模式与音节相关条件下不同。当启动词和目标图名称存在音素相关时, 二者享有相同的首音素(启动词:柏/bai3/, 目标图:鼻子/bi2//zi5/), 在加工过程中首音素的重叠激活了以该音素开头的所有音节(如:/ba/, /bang/等), 对目标音节的产生造成了竞争, 表现为θ频段的能量活动在音素相关条件与音素无关条件对比时更强。这与Chen等(2016);的发现一致, 他们采用与本研究完全相同的实验设计和任务, 发现音素相关与无关条件相比出现了微弱的抑制效应。

在汉语口语词汇产生中, 音节效应θ频段能量变化的时间早于音素效应, 与已有研究中相关效应的时间进程一致, 从能量变化的角度为“音节是音韵编码的合适单元”提供了证据。例如, Feng, Yue和Zhang (2019)采用图画-词汇干扰任务, 操纵了干扰词与目标图名称之间的相关关系, 发现音节相关效应出现在图画呈现后的320 ms左右, 音素相关效应在368 ms左右, 这两类效应均发生于音韵编码阶段。在Zhang和Damian (2019);的研究中, 除了发生在300~400 ms的音节效应, 研究者还探测到了音素效应:在目标图呈现后的500~600 ms, 音素相关条件比音素无关条件诱发了更小的ERP波幅。

虽然目前没有研究者利用脑电技术直接对比印欧语母语者音素效应与音节效应的时间进程, 但研究者对于音节频率效应(syllable frequency effect)的研究也许能提供部分的证据。音节频率效应是指个体在语言产生过程中对使用频次较高的音节加工速度更快, 反应时更短, 该效应与音节水平的加工密切相关(Levelt et al., 1999)。利用ERP反应锁时(response locked)的分析方法, Burki, Cheneval和Laganaro (2015);发现音节频率效应出现在被试出声命名前的180~150 ms之间, 该时间窗正好对应口语产生的语音编码阶段。Dell’Acqua等(2010);通过对比意大利母语者口语产生过程中的语义效应和语音效应, 发现首音素重合引发的促进效应发生在刺激出现后的250~400 ms之间, 即音韵编码阶段(Indefrey & Levelt, 2004)。以上的两个研究在脑电时间进程上为字母语言先加工音素后加工音节提供了间接证据。相比而言, 我们发现的汉语口语词汇产生中能量变化上的先后关系为音节效应在前, 音素效应在后。汉语和印欧语系中音节和音素的提取在时间进程上表现出完全相反的模式。上述对比表明不同的语言, 音韵编码中首先提取的单元不同, 与“合适单元假说”的跨语言假设一致(O’Seaghdha et al., 2010)。

音节相关条件缩短图画命名时间, 引起θ频段能量的减弱, 而音素相关条件可能会延长图画命名时间, 引起θ频段能量的增强。本研究和言语知觉的结果都表明无论是语言理解任务还是语言产生任务, θ频段的能量活动与音节的加工相关。尽管如此, 研究中微弱的处于边缘显著水平的音素效应提示θ频段的能量活动可能和语音加工相关。第二次重复时在反应时上音节和音素效应都消失, 这与已有的研究一致(Chen et al., 2016), 本研究的时频结果与反应时结果一致, 表明人类的大脑能够迅速对加工过的刺激作出反应。下一步的研究中可以采用英语作为目标语言考察在行为结果上出现显著的音素效应时, θ频段的能量活动是否在相关条件和无关条件下存在差异, 进行跨语言之间的对比, 深入考察θ频段的能量活动的认知涵义。

需要注意的是, 虽然传统的ERP分析方法在时间进程上区分了音节和音素的加工顺序, 但ERP的幅值在不同研究中表现出了完全相反的模式:与Zhang和Damian (2019)的结果不同, Dell’Acqua等(2010)发现语音相关条件比语音无关条件诱发了被试更大的ERP波幅。与语义违反的“N400效应”存在矛盾的实验结果类似(van Petten & Luka, 2012), ERP波形方向和波幅大小在实验间的不一致使研究者很难进一步解释语言加工的脑机制。即使ERP的结果表现出较高的跨实验一致性, 由于差异波并不与特定的认知加工过程对应, 我们仍然无法将条件之间不同的ERP模式完全归因于口语产生过程中加工机制的不同。例如, 在Wang等(2017)的研究中, 音节相关条件在刺激属性上重叠度相对较大(剪刀/jian3dao1/—键盘/jian4pan2/), 音素相关条件在刺激属性上重叠度相对较小(西瓜/xi1gua1/—信封/xin4feng1/), 被试进行图片命名时, 完全有可能在阈下水平探测到了不同实验条件之间重叠度的差异, 因此音节相关条件与音节无关条件对比时差异更大, 而音素相关条件与音素无关条件对比时差异更小, 甚至消失。虽然本研究在实验条件的设置上与前人研究类似, 但时频分析是对不同时间点, 不同频率区间能量大小的计算, 这一指标直接反映了不同活动速率的神经元激活/抑制情况(Cohen, 2017)。对于本研究而言, 我们没有发现3~30 Hz区间内其他频段的能量活动在音节相关条件与音素相关条件之间存在显著差异, 表明音节相关和音素相关两种条件之间存在的重叠度差异并没有混淆实验的主要结果。而且, 相比音节无关条件, 个体在音节相关条件下θ频段的活动能量更低; 但对音素而言, 相关条件下θ频段的活动能量有升高的趋势。这种实验条件之间相反的神经振荡模式反映的是个体在口语产生过程中对音节和音素的加工存在不同的认知神经机制。

综上, 我们认为汉语口语产生中θ频段的活动反映了对音节的加工, 从频段能量变化的角度为音节是汉语口语词汇产生中音韵编码的单元提供了支持证据。我们的研究也表明人类的语言理解和语言产生过程在神经振荡上引发了相同的能量活动变化。未来还需结合不同的实验范式, 进一步探索汉语口语产生中各个频段能量活动的认知意义。

参考文献

The role of orthography in speech production revisited

DOI:10.1016/j.cognition.2006.02.002

URL

PMID:16545792

[本文引用: 2]

The language production system of literate adults comprises an orthographic system (used during written language production) and a phonological system (used during spoken language production). Recent psycholinguistic research has investigated possible influences of the orthographic system on the phonological system. This research has produced contrastive results, with some studies showing effects of orthography in the course of normal speech production while others failing to show such effects. In this article, we review the available evidence and consider possible explanations for the discrepancy. We then report two form-preparation experiments which aimed at testing for the effects of orthography in spoken word-production. Our results provide clear evidence that the orthographic properties of the words do not influence their spoken production in picture naming. We discuss this finding in relation to psycholinguistic and neuropsychological investigations of the relationship between written and spoken word-production.

Syntactic unification operations are reflected in oscillatory dynamics during on-line sentence comprehension

DOI:10.1162/jocn.2009.21283

URL

PMID:19580386

[本文引用: 1]

There is growing evidence suggesting that synchronization changes in the oscillatory neuronal dynamics in the EEG or MEG reflect the transient coupling and uncoupling of functional networks related to different aspects of language comprehension. In this work, we examine how sentence-level syntactic unification operations are reflected in the oscillatory dynamics of the MEG. Participants read sentences that were either correct, contained a word category violation, or were constituted of random word sequences devoid of syntactic structure. A time-frequency analysis of MEG power changes revealed three types of effects. The first type of effect was related to the detection of a (word category) violation in a syntactically structured sentence, and was found in the alpha and gamma frequency bands. A second type of effect was maximally sensitive to the syntactic manipulations: A linear increase in beta power across the sentence was present for correct sentences, was disrupted upon the occurrence of a word category violation, and was absent in syntactically unstructured random word sequences. We therefore relate this effect to syntactic unification operations. Thirdly, we observed a linear increase in theta power across the sentence for all syntactically structured sentences. The effects are tentatively related to the building of a working memory trace of the linguistic input. In conclusion, the data seem to suggest that syntactic unification is reflected by neuronal synchronization in the lower-beta frequency band.

Induced neural beta oscillations predict categorical speech perception abilities

Human temporal lobe activation by speech and nonspeech sounds

DOI:10.1093/cercor/10.5.512

URL

PMID:10847601

[本文引用: 1]

Functional organization of the lateral temporal cortex in humans is not well understood. We recorded blood oxygenation signals from the temporal lobes of normal volunteers using functional magnetic resonance imaging during stimulation with unstructured noise, frequency-modulated (FM) tones, reversed speech, pseudowords and words. For all conditions, subjects performed a material-nonspecific detection response when a train of stimuli began or ceased. Dorsal areas surrounding Heschl's gyrus bilaterally, particularly the planum temporale and dorsolateral superior temporal gyrus, were more strongly activated by FM tones than by noise, suggesting a role in processing simple temporally encoded auditory information. Distinct from these dorsolateral areas, regions centered in the superior temporal sulcus bilaterally were more activated by speech stimuli than by FM tones. Identical results were obtained in this region using words, pseudowords and reversed speech, suggesting that the speech-tones activation difference is due to acoustic rather than linguistic factors. In contrast, previous comparisons between word and nonword speech sounds showed left-lateralized activation differences in more ventral temporal and temporoparietal regions that are likely involved in processing lexical-semantic or syntactic information associated with words. The results indicate functional subdivision of the human lateral temporal cortex and provide a preliminary framework for understanding the cortical processing of speech sounds.

GLM-beamformer method demonstrates stationary field, alpha ERD and gamma ERS co-localisation with fMRI BOLD response in visual cortex

DOI:10.1016/j.neuroimage.2005.01.050

URL

PMID:15862231

[本文引用: 1]

Recently, we introduced a new 'GLM-beamformer' technique for MEG analysis that enables accurate localisation of both phase-locked and non-phase-locked neuromagnetic effects, and their representation as statistical parametric maps (SPMs). This provides a useful framework for comparison of the full range of MEG responses with fMRI BOLD results. This paper reports a 'proof of principle' study using a simple visual paradigm (static checkerboard). The five subjects each underwent both MEG and fMRI paradigms. We demonstrate, for the first time, the presence of a sustained (DC) field in the visual cortex, and its co-localisation with the visual BOLD response. The GLM-beamformer analysis method is also used to investigate the main non-phase-locked oscillatory effects: an event-related desynchronisation (ERD) in the alpha band (8-13 Hz) and an event-related synchronisation (ERS) in the gamma band (55-70 Hz). We show, using SPMs and virtual electrode traces, the spatio-temporal covariance of these effects with the visual BOLD response. Comparisons between MEG and fMRI data sets generally focus on the relationship between the BOLD response and the transient evoked response. Here, we show that the stationary field and changes in oscillatory power are also important contributors to the BOLD response, and should be included in future studies on the relationship between neuronal activation and the haemodynamic response.

Do speakers have access to a mental syllabary? ERP comparison of high frequency and novel syllable production

DOI:10.1016/j.bandl.2015.08.006

URL

PMID:26367062

[本文引用: 1]

The transformation of an abstract phonological code into articulation has been hypothesized to involve the retrieval of stored syllable-sized motor plans. Accordingly, gestural scores for frequently used syllables are retrieved from memory whereas gestural scores for novel and possibly low frequency syllables are assembled on-line. The present study was designed to test this hypothesis. Participants produced disyllabic pseudowords with high frequency, low frequency and non-existent (novel) initial syllables. Behavioral results revealed slower production latencies for novel than for high frequency syllables. Event-related potentials diverged in waveform amplitudes and global topographic patterns between high frequency and low frequency/novel syllables around 170 ms before the onset of articulation. These differences indicate the recruitment of different brain networks during the production of frequent and infrequent/novel syllables, in line with the hypothesis that speakers store syllabic-sized motor programs for frequent syllables and assemble these motor plans on-line for low frequency and novel syllables.

SUBTLEX-CH: Chinese word and character frequencies based on film subtitles

The roles of syllables and phonemes during phonological encoding in Chinese spoken word production: A topographic ERP study

Syllable errors from naturalistic slips of the tongue in Mandarin Chinese

Word-form encoding in Mandarin Chinese as assessed by the implicit priming task

The primacy of abstract syllables in Chinese word production

Statistical power analysis for the behavioral sciences (2nd ed.)

Where Does EEG Come From and What Does It Mean

DOI:10.1016/j.tins.2017.02.004

URL

PMID:28314445

[本文引用: 1]

Electroencephalography (EEG) has been instrumental in making discoveries about cognition, brain function, and dysfunction. However, where do EEG signals come from and what do they mean? The purpose of this paper is to argue that we know shockingly little about the answer to this question, to highlight what we do know, how important the answers are, and how modern neuroscience technologies that allow us to measure and manipulate neural circuits with high spatiotemporal accuracy might finally bring us some answers. Neural oscillations are perhaps the best feature of EEG to use as anchors because oscillations are observed and are studied at multiple spatiotemporal scales of the brain, in multiple species, and are widely implicated in cognition and in neural computations.

Effects of orthography on speech production in a form-preparation paradigm

Time pressure and phonological advance planning in spoken production

Semantic and phonological codes interact in single word production

ERP evidence for ultra-fast semantic processing in the picture-word interference paradigm

A spreading-activation theory of retrieval in sentence production

EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis

Cortical tracking of hierarchical linguistic structures in connected speech

Acoustic landmarks drive delta-theta oscillations to enable speech comprehension by facilitating perceptual parsing

DOI:10.1016/j.neuroimage.2013.06.035

URL

PMID:23791839

[本文引用: 1]

Repetition suppression of face-selective evoked and induced EEG recorded from human cortex

The role of phase synchronization in memory processes

DOI:10.1038/nrn2979

URL

PMID:21248789

[本文引用: 1]

In recent years, studies ranging from single-unit recordings in animals to electroencephalography and magnetoencephalography studies in humans have demonstrated the pivotal role of phase synchronization in memory processes. Phase synchronization - here referring to the synchronization of oscillatory phases between different brain regions - supports both working memory and long-term memory and acts by facilitating neural communication and by promoting neural plasticity. There is evidence that processes underlying working and long-term memory might interact in the medial temporal lobe. We propose that this is accomplished by neural operations involving phase-phase and phase-amplitude synchronization. A deeper understanding of how phase synchronization supports the flexibility of and interaction between memory systems may yield new insights into the functions of phase synchronization in general.

Syllables are Retrieved before Segments in the Spoken Production of Mandarin Chinese: An ERP Study

The density constraint on form-priming in the naming task: Interference effects from a masked prime

Left superior temporal gyrus is coupled to attended speech in a cocktail-party auditory scene

DOI:10.1523/JNEUROSCI.1730-15.2016

URL

PMID:26843641

[本文引用: 2]

UNLABELLED: Using a continuous listening task, we evaluated the coupling between the listener's cortical activity and the temporal envelopes of different sounds in a multitalker auditory scene using magnetoencephalography and corticovocal coherence analysis. Neuromagnetic signals were recorded from 20 right-handed healthy adult humans who listened to five different recorded stories (attended speech streams), one without any multitalker background (No noise) and four mixed with a

Cortical oscillations and speech processing: Emerging computational principles and operations

DOI:10.1038/nn.3063

URL

PMID:22426255

[本文引用: 1]

Neuronal oscillations are ubiquitous in the brain and may contribute to cognition in several ways: for example, by segregating information and organizing spike timing. Recent data show that delta, theta and gamma oscillations are specifically engaged by the multi-timescale, quasi-rhythmic properties of speech and can track its dynamics. We argue that they are foundational in speech and language processing, 'packaging' incoming information into units of the appropriate temporal granularity. Such stimulus-brain alignment arguably results from auditory and motor tuning throughout the evolution of speech and language and constitutes a natural model system allowing auditory research to make a unique contribution to the issue of how neural oscillatory activity affects human cognition.

Cycle- octave and related transforms in seismic signal analysis

Repetition and the brain: Neural models of stimulus-specific effects

Speech rhythms and multiplexed oscillatory sensory coding in the human brain

DOI:10.1371/journal.pbio.1001732

URL

PMID:24339748

[本文引用: 3]

Plants are continually exposed to pathogen attack but usually remain healthy because they can activate defences upon perception of microbes. However, pathogens have evolved to overcome plant immunity by delivering effectors into the plant cell to attenuate defence, resulting in disease. Recent studies suggest that some effectors may manipulate host transcription, but the specific mechanisms by which such effectors promote susceptibility remain unclear. We study the oomycete downy mildew pathogen of Arabidopsis, Hyaloperonospora arabidopsidis (Hpa), and show here that the nuclear-localized effector HaRxL44 interacts with Mediator subunit 19a (MED19a), resulting in the degradation of MED19a in a proteasome-dependent manner. The Mediator complex of approximately 25 proteins is broadly conserved in eukaryotes and mediates the interaction between transcriptional regulators and RNA polymerase II. We found MED19a to be a positive regulator of immunity against Hpa. Expression profiling experiments reveal transcriptional changes resembling jasmonic acid/ethylene (JA/ET) signalling in the presence of HaRxL44, and also 3 d after infection with Hpa. Elevated JA/ET signalling is associated with a decrease in salicylic acid (SA)-triggered immunity (SATI) in Arabidopsis plants expressing HaRxL44 and in med19a loss-of-function mutants, whereas SATI is elevated in plants overexpressing MED19a. Using a PR1::GUS reporter, we discovered that Hpa suppresses PR1 expression specifically in cells containing haustoria, into which RxLR effectors are delivered, but not in nonhaustoriated adjacent cells, which show high PR1::GUS expression levels. Thus, HaRxL44 interferes with Mediator function by degrading MED19, shifting the balance of defence transcription from SA-responsive defence to JA/ET-signalling, and enhancing susceptibility to biotrophs by attenuating SA-dependent gene expression.

Effects of picture repetition on induced gamma band responses, evoked potentials, and phase synchrony in the human EEG

Repetition suppression of induced gamma band responses is eliminated by task switching

DOI:10.1111/j.1460-9568.2006.05130.x

URL

PMID:17100853

[本文引用: 1]

The formation of cortical object representations requires the activation of cell assemblies, correlated by induced oscillatory bursts of activity > 20 Hz (induced gamma band responses; iGBRs). One marker of the functional dynamics within such cell assemblies is the suppression of iGBRs elicited by repeated stimuli. This effect is commonly interpreted as a signature of 'sharpening' processes within cell-assemblies, which are behaviourally mirrored in repetition priming effects. The present study investigates whether the sharpening of primed objects is an automatic consequence of repeated stimulus processing, or whether it depends on task demands. Participants performed either a 'living/non-living' or a 'bigger/smaller than a shoebox' classification on repeated pictures of everyday objects. We contrasted repetition-related iGBR effects after the same task was used for initial and repeated presentations (no-switch condition) with repetitions after a task-switch occurred (switch condition). Furthermore, we complemented iGBR analysis by examining other brain responses known to be modulated by repetition-related memory processes (evoked gamma oscillations and event-related potentials; ERPs). The results obtained for the 'no-switch' condition replicated previous findings of repetition suppression of iGBRs at 200-300 ms after stimulus onset. Source modelling showed that this effect was distributed over widespread cortical areas. By contrast, after a task-switch no iGBR suppression was found. We concluded that iGBRs reflect the sharpening of a cell assembly only within the same task. After a task switch the complete object representation is reactivated. The ERP (220-380 ms) revealed suppression effects independent of task demands in bilateral posterior areas and might indicate correlates of repetition priming in perceptual structures.

The neuromagnetic response to spoken sentences: Co-modulation of theta band amplitude and phase

The spatial and temporal signatures of word production components

‘Hotdog’, not ‘Hot’ ‘dog’: The phonological planning of compound words

DOI:10.1080/23273798.2014.892144

URL

PMID:24910853

[本文引用: 1]

Do we say dog when we say hotdog? In five experiments using the implicit priming paradigm, we assessed whether nominal compounds composed of two free morphemes like sawdust or fishbowl are prepared for production at the segmental level in the same way that two-syllable monomorphemic words (e.g. bandit) are, or instead as sequences of separable words (e.g. full bowl or grey dust). The experiments demonstrated that nominal compounds are planned as a single sequence, not as two sequences. Specifically, the onset of the second component of the compound (e.g. /d/ in sawdust) did not act as a primeable starting point, although comparable onsets did when that component was an independent word (grey dust). We conclude that there may be a dog in hotdog at the morpheme level, but not when phonological segments are prepared for production.

Human gamma-frequency oscillations associated with attention and memory

Alpha-band oscillations, attention, and controlled access to stored information

A theory of lexical access in speech production

DOI:10.1017/s0140525x99001776

URL

PMID:11301520

[本文引用: 4]

Preparing words in speech production is normally a fast and accurate process. We generate them two or three per second in fluent conversation; and overtly naming a clear picture of an object can easily be initiated within 600 msec after picture onset. The underlying process, however, is exceedingly complex. The theory reviewed in this target article analyzes this process as staged and feed-forward. After a first stage of conceptual preparation, word generation proceeds through lexical selection, morphological and phonological encoding, phonetic encoding, and articulation itself. In addition, the speaker exerts some degree of output control, by monitoring of self-produced internal and overt speech. The core of the theory, ranging from lexical selection to the initiation of phonetic encoding, is captured in a computational model, called WEAVER++. Both the theory and the computational model have been developed in interaction with reaction time experiments, particularly in picture naming or related word production paradigms, with the aim of accounting for the real-time processing in normal word production. A comprehensive review of theory, model, and experiments is presented. The model can handle some of the main observations in the domain of speech errors (the major empirical domain for most other theories of lexical access), and the theory opens new ways of approaching the cerebral organization of speech production by way of high-temporal-resolution imaging.

Fast oscillatory dynamics during language comprehension: Unification versus maintenance and prediction?

DOI:10.1016/j.bandl.2015.01.003

URL

PMID:25666170

[本文引用: 1]

The role of neuronal oscillations during language comprehension is not yet well understood. In this paper we review and reinterpret the functional roles of beta- and gamma-band oscillatory activity during language comprehension at the sentence and discourse level. We discuss the evidence in favor of a role for beta and gamma in unification (the unification hypothesis), and in light of mounting evidence that cannot be accounted for under this hypothesis, we explore an alternative proposal linking beta and gamma oscillations to maintenance and prediction (respectively) during language comprehension. Our maintenance/prediction hypothesis is able to account for most of the findings that are currently available relating beta and gamma oscillations to language comprehension, and is in good agreement with other proposals about the roles of beta and gamma in domain-general cognitive processing. In conclusion we discuss proposals for further testing and comparing the prediction and unification hypotheses.

The cognitive and neural oscillatory mechanisms underlying the facilitating effect of rhythm regularity on speech comprehension

DOI:10.1016/j.jneuroling.2018.05.004 URL [本文引用: 1]

Phase patterns of neuronal responses reliably discriminate speech in human auditory cortex

DOI:10.1016/j.neuron.2007.06.004

URL

PMID:17582338

[本文引用: 1]

SummaryHow natural speech is represented in the auditory cortex constitutes a major challenge for cognitive neuroscience. Although many single-unit and neuroimaging studies have yielded valuable insights about the processing of speech and matched complex sounds, the mechanisms underlying the analysis of speech dynamics in human auditory cortex remain largely unknown. Here, we show that the phase pattern of theta band (4–8 Hz) responses recorded from human auditory cortex with magnetoencephalography (MEG) reliably tracks and discriminates spoken sentences and that this discrimination ability is correlated with speech intelligibility. The findings suggest that an ∼200 ms temporal window (period of theta oscillation) segments the incoming speech signal, resetting and sliding to track speech dynamics. This hypothesized mechanism for cortical speech analysis is based on the stimulus-induced modulation of inherent cortical rhythms and provides further evidence implicating the syllable as a computational primitive for the representation of spoken language.]]>

Independent component analysis of electroencephalographic data

Nonparametric statistical testing of EEG- and MEG-data

DOI:10.1016/j.jneumeth.2007.03.024

URL

PMID:17517438

[本文引用: 1]

In this paper, we show how ElectroEncephaloGraphic (EEG) and MagnetoEncephaloGraphic (MEG) data can be analyzed statistically using nonparametric techniques. Nonparametric statistical tests offer complete freedom to the user with respect to the test statistic by means of which the experimental conditions are compared. This freedom provides a straightforward way to solve the multiple comparisons problem (MCP) and it allows to incorporate biophysically motivated constraints in the test statistic, which may drastically increase the sensitivity of the statistical test. The paper is written for two audiences: (1) empirical neuroscientists looking for the most appropriate data analysis method, and (2) methodologists interested in the theoretical concepts behind nonparametric statistical tests. For the empirical neuroscientist, a large part of the paper is written in a tutorial-like fashion, enabling neuroscientists to construct their own statistical test, maximizing the sensitivity to the expected effect. And for the methodologist, it is explained why the nonparametric test is formally correct. This means that we formulate a null hypothesis (identical probability distribution in the different experimental conditions) and show that the nonparametric test controls the false alarm rate under this null hypothesis.

The time course of phonological encoding in language production: Phonological encoding inside a syllable

DOI:10.1016/0749-596X(91)90011-8 URL [本文引用: 1]

Out-of-synchrony speech entrainment in developmental dyslexia

DOI:10.1002/hbm.23206

URL

PMID:27061643

[本文引用: 1]

Developmental dyslexia is a reading disorder often characterized by reduced awareness of speech units. Whether the neural source of this phonological disorder in dyslexic readers results from the malfunctioning of the primary auditory system or damaged feedback communication between higher-order phonological regions (i.e., left inferior frontal regions) and the auditory cortex is still under dispute. Here we recorded magnetoencephalographic (MEG) signals from 20 dyslexic readers and 20 age-matched controls while they were listening to approximately 10-s-long spoken sentences. Compared to controls, dyslexic readers had (1) an impaired neural entrainment to speech in the delta band (0.5-1 Hz); (2) a reduced delta synchronization in both the right auditory cortex and the left inferior frontal gyrus; and (3) an impaired feedforward functional coupling between neural oscillations in the right auditory cortex and the left inferior frontal regions. This shows that during speech listening, individuals with developmental dyslexia present reduced neural synchrony to low-frequency speech oscillations in primary auditory regions that hinders higher-order speech processing steps. The present findings, thus, strengthen proposals assuming that improper low-frequency acoustic entrainment affects speech sampling. This low speech-brain synchronization has the strong potential to cause severe consequences for both phonological and reading skills. Interestingly, the reduced speech-brain synchronization in dyslexic readers compared to normal readers (and its higher-order consequences across the speech processing network) appears preserved through the development from childhood to adulthood. Thus, the evaluation of speech-brain synchronization could possibly serve as a diagnostic tool for early detection of children at risk of dyslexia. Hum Brain Mapp 37:2767-2783, 2016. (c) 2016 Wiley Periodicals, Inc.

Fieldtrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data

DOI:10.1155/2011/363565

URL

PMID:21687575

[本文引用: 1]

This paper presents

Proximate units in word production: Phonological encoding begins with syllables in Mandarin Chinese but with segments in English

DOI:10.1016/j.cognition.2010.01.001

URL

PMID:20149354

[本文引用: 3]

In Mandarin Chinese, speakers benefit from fore-knowledge of what the first syllable but not of what the first phonemic segment of a disyllabic word will be (Chen, Chen, & Dell, 2002), contrasting with findings in English, Dutch, and other Indo-European languages, and challenging the generality of current theories of word production. In this article, we extend the evidence for the language difference by showing that failure to prepare onsets in Mandarin (Experiment 1) applies even to simple monosyllables (Experiments 2-4), and confirm the contrast with English for comparable materials (Experiments 5 and 6). We also provide new evidence that Mandarin speakers do reliably prepare tonally unspecified phonological syllables (Experiment 7). To account for these patterns, we propose a language general proximate units principle whereby intentional preparation for speech as well as phonological-lexical coordination are grounded at the first phonological level below the word at which explicit unit selection occurs. The language difference arises because syllables are proximate units in Mandarin Chinese, whereas segments are proximate in English and other Indo-European languages. The proximate units perspective reconciles the aspiration toward a language general account of word production with the reality of substantial cross-linguistic differences.

Phase-locked responses to speech in human auditory cortex are enhanced during comprehension

DOI:10.1093/cercor/bhs118

URL

PMID:22610394

[本文引用: 1]

A growing body of evidence shows that ongoing oscillations in auditory cortex modulate their phase to match the rhythm of temporally regular acoustic stimuli, increasing sensitivity to relevant environmental cues and improving detection accuracy. In the current study, we test the hypothesis that nonsensory information provided by linguistic content enhances phase-locked responses to intelligible speech in the human brain. Sixteen adults listened to meaningful sentences while we recorded neural activity using magnetoencephalography. Stimuli were processed using a noise-vocoding technique to vary intelligibility while keeping the temporal acoustic envelope consistent. We show that the acoustic envelopes of sentences contain most power between 4 and 7 Hz and that it is in this frequency band that phase locking between neural activity and envelopes is strongest. Bilateral oscillatory neural activity phase-locked to unintelligible speech, but this cerebro-acoustic phase locking was enhanced when speech was intelligible. This enhanced phase locking was left lateralized and localized to left temporal cortex. Together, our results demonstrate that entrainment to connected speech does not only depend on acoustic characteristics, but is also affected by listeners ability to extract linguistic information. This suggests a biological framework for speech comprehension in which acoustic and linguistic cues reciprocally aid in stimulus prediction.

θ-band and β-band neural activity reflects independent syllable tracking and comprehension of time-compressed speech

DOI:10.1523/JNEUROSCI.2882-16.2017

URL

PMID:28729443

[本文引用: 1]

Recent psychophysics data suggest that speech perception is not limited by the capacity of the auditory system to encode fast acoustic variations through neural gamma activity, but rather by the time given to the brain to decode them. Whether the decoding process is bounded by the capacity of theta rhythm to follow syllabic rhythms in speech, or constrained by a more endogenous top-down mechanism, e.g., involving beta activity, is unknown. We addressed the dynamics of auditory decoding in speech comprehension by challenging syllable tracking and speech decoding using comprehensible and incomprehensible time-compressed auditory sentences. We recorded EEGs in human participants and found that neural activity in both theta and gamma ranges was sensitive to syllabic rate. Phase patterns of slow neural activity consistently followed the syllabic rate (4-14 Hz), even when this rate went beyond the classical theta range (4-8 Hz). The power of theta activity increased linearly with syllabic rate but showed no sensitivity to comprehension. Conversely, the power of beta (14-21 Hz) activity was insensitive to the syllabic rate, yet reflected comprehension on a single-trial basis. We found different long-range dynamics for theta and beta activity, with beta activity building up in time while more contextual information becomes available. This is consistent with the roles of theta and beta activity in stimulus-driven versus endogenous mechanisms. These data show that speech comprehension is constrained by concurrent stimulus-driven theta and low-gamma activity, and by endogenous beta activity, but not primarily by the capacity of theta activity to track the syllabic rhythm.SIGNIFICANCE STATEMENT Speech comprehension partly depends on the ability of the auditory cortex to track syllable boundaries with theta-range neural oscillations. The reason comprehension drops when speech is accelerated could hence be because theta oscillations can no longer follow the syllabic rate. Here, we presented subjects with comprehensible and incomprehensible accelerated speech, and show that neural phase patterns in the theta band consistently reflect the syllabic rate, even when speech becomes too fast to be intelligible. The drop in comprehension, however, is signaled by a significant decrease in the power of low-beta oscillations (14-21 Hz). These data suggest that speech comprehension is not limited by the capacity of theta oscillations to adapt to syllabic rate, but by an endogenous decoding process.

Brain oscillations during spoken sentence processing

DOI:10.1162/jocn_a_00144

URL

PMID:21981666

[本文引用: 4]

Spoken sentence comprehension relies on rapid and effortless temporal integration of speech units displayed at different rates. Temporal integration refers to how chunks of information perceived at different time scales are linked together by the listener in mapping speech sounds onto meaning. The neural implementation of this integration remains unclear. This study explores the role of short and long windows of integration in accessing meaning from long samples of speech. In a cross-linguistic study, we explore the time course of oscillatory brain activity between 1 and 100 Hz, recorded using EEG, during the processing of native and foreign languages. We compare oscillatory responses in a group of Italian and Spanish native speakers while they attentively listen to Italian, Japanese, and Spanish utterances, played either forward or backward. The results show that both groups of participants display a significant increase in gamma band power (55-75 Hz) only when they listen to their native language played forward. The increase in gamma power starts around 1000 msec after the onset of the utterance and decreases by its end, resembling the time course of access to meaning during speech perception. In contrast, changes in low-frequency power show similar patterns for both native and foreign languages. We propose that gamma band power reflects a temporal binding phenomenon concerning the coordination of neural assemblies involved in accessing meaning of long samples of speech.

Spherical splines for scalp potential and current density mapping

DOI:10.1016/0013-4694(89)90180-6

URL

PMID:2464490

[本文引用: 1]

Description of mapping methods using spherical splines, both to interpolate scalp potentials (SPs), and to approximate scalp current densities (SCDs). Compared to a previously published method using thin plate splines, the advantages are a very simple derivation of the SCD approximation, faster computing times, and greater accuracy in areas with few electrodes.

Guidelines for the recording and quantitative analysis of electroencephalographic activity in research contexts

DOI:10.1111/j.1469-8986.1993.tb02081.x

URL

PMID:8248447

[本文引用: 1]

Developments in technologic and analytical procedures applied to the study of brain electrical activity have intensified interest in this modality as a means of examining brain function. The impact of these new developments on traditional methods of acquiring and analyzing electroencephalographic activity requires evaluation. Ultimately, the integration of the old with the new must result in an accepted standardized methodology to be used in these investigations. In this paper, basic procedures and recent developments involved in the recording and analysis of brain electrical activity are discussed and recommendations are made, with emphasis on psychophysiological applications of these procedures.

The analysis of speech in different temporal integration windows: Cerebral lateralization as 'asymmetric sampling in time'

DOI:10.1016/S0167-6393(02)00107-3 URL [本文引用: 1]

Neural entrainment to rhythmically presented auditory, visual, and audio-visual speech in children

DOI:10.3389/fpsyg.2012.00216

URL

PMID:22833726

[本文引用: 2]

Auditory cortical oscillations have been proposed to play an important role in speech perception. It is suggested that the brain may take temporal

Sound-sized segments are significant for Mandarin speakers

DOI:10.1073/pnas.1200632109

URL

PMID:22891321

[本文引用: 2]

Do speakers of all languages use segmental speech sounds when they produce words? Existing models of language production generally assume a mental representation of individual segmental units, or phonemes, but the bulk of evidence comes from speakers of European languages in which the orthographic system codes explicitly for speech sounds. By contrast, in languages with nonalphabetical scripts, such as Mandarin Chinese, individual speech sounds are not orthographically represented, raising the possibility that speakers of these languages do not use phonemes as fundamental processing units. We used event-related potentials (ERPs) combined with behavioral measurement to investigate the role of phonemes in Mandarin production. Mandarin native speakers named colored line drawings of objects using color adjective-noun phrases; color and object name either shared the initial phoneme or were phonologically unrelated. Whereas naming latencies were unaffected by phoneme repetition, ERP responses were modulated from 200 ms after picture onset. Our ERP findings thus provide strong support for the claim that phonemic segments constitute fundamental units of phonological encoding even for speakers of languages that do not encode such units orthographically.

The weaver model of word-form encoding in speech production

DOI:10.1016/s0010-0277(97)00027-9

URL

PMID:9426503

[本文引用: 1]

Lexical access in speaking consists of two major steps: lemma retrieval and word-form encoding. In Roelofs (Roelofs, A. 1992a. Cognition 42. 107-142; Roelofs. A. 1993. Cognition 47, 59-87.), I described a model of lemma retrieval. The present paper extends this work by presenting a comprehensive model of the second access step, word-form encoding. The model is called WEAVER (Word-form Encoding by Activation and VERification). Unlike other models of word-form generation, WEAVER is able to provide accounts of response time data, particularly from the picture-word interference paradigm and the implicit priming paradigm. Its key features are (1) retrieval by spreading activation, (2) verification of activated information by a production rule, (3) a rightward incremental construction of phonological representations using a principle of active syllabification, syllables are constructed on the fly rather than stored with lexical items, (4) active competitive selection of syllabic motor programs using a mathematical formalism that generates response times and (5) the association of phonological speech errors with the selection of syllabic motor programs due to the failure of verification.

Modeling of phonological encoding in spoken word production: From Germanic languages to Mandarin Chinese and Japanese

DOI:10.1111/jpr.2015.57.issue-1 URL [本文引用: 1]

Working memory and neural oscillations: Alpha-gamma versus theta-gamma codes for distinct WM information?

DOI:10.1016/j.tics.2013.10.010

URL

PMID:24268290

[本文引用: 1]

Neural oscillations at different frequencies have recently been related to a wide range of basic and higher cognitive processes. One possible role of oscillatory activity is to assure the maintenance of information in working memory (WM). Here we review the possibility that rhythmic activity at theta, alpha, and gamma frequencies serve distinct functional roles during WM maintenance. Specifically, we propose that gamma-band oscillations are generically involved in the maintenance of WM information. By contrast, alpha-band activity reflects the active inhibition of task-irrelevant information, whereas theta-band oscillations underlie the organization of sequentially ordered WM items. Finally, we address the role of cross-frequency coupling (CFC) in enabling alpha-gamma and theta-gamma codes for distinct WM information.

Combining functional and anatomical connectivity reveals brain networks for auditory language comprehension

DOI:10.1016/j.neuroimage.2009.11.009

URL

[本文引用: 1]

AbstractCognitive functions are organized in distributed, overlapping, and interacting brain networks. Investigation of those large-scale brain networks is a major task in neuroimaging research.Here, we introduce a novel combination of functional and anatomical connectivity to study the network topology subserving a cognitive function of interest. (i) In a given network, direct interactions between network nodes are identified by analyzing functional MRI time series with the multivariate method of directed partial correlation (dPC). This method provides important improvements over shortcomings that are typical for ordinary (partial) correlation techniques. (ii) For directly interacting pairs of nodes, a region-to-region probabilistic fiber tracking on diffusion tensor imaging data is performed to identify the most probable anatomical white matter fiber tracts mediating the functional interactions. This combined approach is applied to the language domain to investigate the network topology of two levels of auditory comprehension: lower-level speech perception (i.e., phonological processing) and higher-level speech recognition (i.e., semantic processing).For both processing levels, dPC analyses revealed the functional network topology and identified central network nodes by the number of direct interactions with other nodes. Tractography showed that these interactions are mediated by distinct ventral (via the extreme capsule) and dorsal (via the arcuate/superior longitudinal fascicle fiber system) long- and short-distance association tracts as well as commissural fibers.Our findings demonstrate how both processing routines are segregated in the brain on a large-scale network level. Combining dPC with probabilistic tractography is a promising approach to unveil how cognitive functions emerge through interaction of functionally interacting and anatomically interconnected brain regions.]]>

The masked onset priming effect in picture naming

DOI:10.1016/j.cognition.2007.03.007

URL

PMID:17442296

[本文引用: 2]

Reading aloud is faster when targets (e.g., PAIR) are preceded by visually masked primes sharing just the onset (e.g., pole) compared to all different primes (e.g., take). This effect is known as the masked onset priming effect (MOPE). One crucial feature of this effect is its presumed non-lexical basis. This aspect of the MOPE is tested in the current study. Dutch participants named pictures having bisyllabic names, which were preceded by visually masked primes. Picture naming was facilitated by first-segment but not last-segment primes, and by first-syllable as well as last-syllable primes. Whole-word primes with first or last segment overlap slowed down picture naming latencies significantly. The first-segment priming effect (i.e., MOPE) cannot be accounted for by non-lexical response competition since pictures cannot be named via the non-lexical route. Instead, the effects obtained in this study can be accommodated by a speech-planning account of the MOPE.

The Contribution of Segmental and Tonal Information in Mandarin Spoken Word Processing

DOI:10.1177/0023830914522956 URL [本文引用: 1]

Spectral fingerprints of large-scale neuronal interactions

DOI:10.1038/nrn3137

URL

[本文引用: 1]

Cognition results from interactions among functionally specialized but widely distributed brain regions; however, neuroscience has so far largely focused on characterizing the function of individual brain regions and neurons therein. Here we discuss recent studies that have instead investigated the interactions between brain regions during cognitive processes by assessing correlations between neuronal oscillations in different regions of the primate cerebral cortex. These studies have opened a new window onto the large-scale circuit mechanisms underlying sensorimotor decision-making and top-down attention. We propose that frequency-specific neuronal correlations in large-scale cortical networks may be 'fingerprints' of canonical neuronal computations underlying cognitive processes.

Prediction during language comprehension: Benefits, costs, and ERP components

DOI:10.1016/j.ijpsycho.2011.09.015

URL

[本文引用: 1]

Because context has a robust influence on the processing of subsequent words, the idea that readers and listeners predict upcoming words has attracted research attention, but prediction has fallen in and out of favor as a likely factor in normal comprehension. We note that the common sense of this word includes both benefits for confirmed predictions and costs for disconfirmed predictions. The N400 component of the event-related potential (ERP) reliably indexes the benefits of semantic context Evidence that the N400 is sensitive to the other half of prediction - a cost for failure - is largely absent from the literature. This raises the possibility that "prediction" is not a good description of what comprehenders do. However, it need not be the case that the benefits and costs of prediction are evident in a single ERP component Research outside of language processing indicates that late positive components of the ERP are very sensitive to disconfirmed predictions. We review late positive components elicited by words that are potentially more or less predictable from preceding sentence context. This survey suggests that late positive responses to unexpected words are fairly common, but that these consist of two distinct components with different scalp topographies, one associated with semantically incongruent words and one associated with congruent words. We conclude with a discussion of the possible cognitive correlates of these distinct late positivities and their relationships with more thoroughly characterized ERP components, namely the P300, P600 response to syntactic errors, and the "old/new effect" in studies of recognition memory. (C) 2011 Elsevier B.V.

Primary phonological planning units in spoken word production are language-specific: Evidence from an ERP study

DOI:10.1038/s41598-017-06186-z

URL

PMID:28724982

[本文引用: 3]

It is widely acknowledged in Germanic languages that segments are the primary planning units at the phonological encoding stage of spoken word production. Mixed results, however, have been found in Chinese, and it is still unclear what roles syllables and segments play in planning Chinese spoken word production. In the current study, participants were asked to first prepare and later produce disyllabic Mandarin words upon picture prompts and a response cue while electroencephalogram (EEG) signals were recorded. Each two consecutive pictures implicitly formed a pair of prime and target, whose names shared the same word-initial atonal syllable or the same word-initial segments, or were unrelated in the control conditions. Only syllable repetition induced significant effects on event-related brain potentials (ERPs) after target onset: a widely distributed positivity in the 200- to 400-ms interval and an anterior positivity in the 400- to 600-ms interval. We interpret these to reflect syllable-size representations at the phonological encoding and phonetic encoding stages. Our results provide the first electrophysiological evidence for the distinct role of syllables in producing Mandarin spoken words, supporting a language specificity hypothesis about the primary phonological units in spoken word production.

Synchronous neural oscillations and cognitive processes

DOI:10.1016/j.tics.2003.10.012

URL

PMID:14643372

[本文引用: 1]

The central problem for cognitive neuroscience is to describe how cognitive processes arise from brain processes. This review summarizes the recent evidence that synchronous neural oscillations reveal much about the origin and nature of cognitive processes such as memory, attention and consciousness. Memory processes are most closely related to theta and gamma rhythms, whereas attention seems closely associated with alpha and gamma rhythms. Conscious awareness may arise from synchronous neural oscillations occurring globally throughout the brain rather than from the locally synchronous oscillations that occur when a sensory area encodes a stimulus. These associations between the dynamics of the brain and cognitive processes indicate progress towards a unified theory of brain and cognition.

Masked syllable priming effects in word and picture naming in Chinese

DOI:10.1371/journal.pone.0046595

URL

PMID:23056360

[本文引用: 1]

Four experiments investigated the role of the syllable in Chinese spoken word production. Chen, Chen and Ferrand (2003) reported a syllable priming effect when primes and targets shared the first syllable using a masked priming paradigm in Chinese. Our Experiment 1 was a direct replication of Chen et al.'s (2003) Experiment 3 employing CV (e.g., ,/ba2.ying2/, strike camp) and CVG (e.g., ,/bai2.shou3/, white haired) syllable types. Experiment 2 tested the syllable priming effect using different syllable types: e.g., CV (,/qi4.qiu2/, balloon) and CVN (,/qing1.ting2/, dragonfly). Experiment 3 investigated this issue further using line drawings of common objects as targets that were preceded either by a CV (e.g., ,/qi3/, attempt), or a CVN (e.g., ,/qing2/, affection) prime. Experiment 4 further examined the priming effect by a comparison between CV or CVN priming and an unrelated priming condition using CV-NX (e.g., ,/mi2.ni3/, mini) and CVN-CX (e.g., ,/min2.ju1/, dwellings) as target words. These four experiments consistently found that CV targets were named faster when preceded by CV primes than when they were preceded by CVG, CVN or unrelated primes, whereas CVG or CVN targets showed the reverse pattern. These results indicate that the priming effect critically depends on the match between the structure of the prime and that of the first syllable of the target. The effect obtained in this study was consistent across different stimuli and different tasks (word and picture naming), and provides more conclusive and consistent data regarding the role of the syllable in Chinese speech production.

The role of phoneme in Mandarin Chinese production: Evidence from ERPs

DOI:10.1371/journal.pone.0106486

URL

PMID:25191857

[本文引用: 2]

Established linguistic theoretical frameworks propose that alphabetic language speakers use phonemes as phonological encoding units during speech production whereas Mandarin Chinese speakers use syllables. This framework was challenged by recent neural evidence of facilitation induced by overlapping initial phonemes, raising the possibility that phonemes also contribute to the phonological encoding process in Chinese. However, there is no evidence of non-initial phoneme involvement in Chinese phonological encoding among representative Chinese speakers, rendering the functional role of phonemes in spoken Chinese controversial. Here, we addressed this issue by systematically investigating the word-initial and non-initial phoneme repetition effect on the electrophysiological signal using a picture-naming priming task in which native Chinese speakers produced disyllabic word pairs. We found that overlapping phonemes in both the initial and non-initial position evoked more positive ERPs in the 180- to 300-ms interval, indicating position-invariant repetition facilitation effect during phonological encoding. Our findings thus revealed the fundamental role of phonemes as independent phonological encoding units in Mandarin Chinese.

Syllable and Segments Effects in Mandarin Chinese Spoken Word Production

DOI:10.3724/SP.J.1041.2015.00319

URL

[本文引用: 2]

Speaking involves stages of conceptual preparation, lemma selection, word-form encoding and articulation. Furthermore, process of word-form encoding can be divided into morphological encoding process, phonological encoding process and phonetic encoding. What is the function unit at the stage of word-form encoding remains a controversial issue in speech production theories. The present study investigated syllable and segments effects at the stages of phonological encoding, phonetic encoding, and articulation in Mandarin spoken word production. Using Picture-Word Interference (PWI) Paradigm, we compared the effects generated in immediately naming (experiment 1), delayed naming (experiment 2), and delayed naming combined with articulation suppression (experiment 3) tasks. Eighteen black and white line drawings were applied as stimuli, and their names were monosyllabic words. Each target picture was paired with four distractor words: A CVC-related (C: Consonant, V: Vowel) distractor word was chosen that shared a syllable which always differed in tone with the picture name (i.e.,羊 /yang2/ as target name -央/yang1/ as distractor word). A CV-related distractor word was chosen that shared the onset consonant and the core vowel with the picture name (i.e., 羊/yang2/-药/yao4/). A VC-related distractor was chosen that shared the rhymes with the picture name (i.e., 羊/yang2/-让/rang4/). An unrelated distractor was selected that stood in no obvious semantic, phonological or orthographic relation with the picture name. We found syllable and segments facilitation effects in immediate naming, whereas syllable and segments inhibition effects in a delayed naming and a combination task of delayed naming and articulation suppression. An immediate naming involves stages of phonological encoding, phonetic encoding, and articulation, a delayed naming involves articulation only, while a combination task of delayed naming and articulation suppression involves phonetic encoding and articulation processes. By comparing these effects among three tasks, we suggest that syllable and segments facilitation effects localized at the stage of phonological encoding, whereas syllable and segments inhibition effects localized at the stage of phonetic encoding and (or) articulation. These findings indicated that syllable plays a more important role in phonological encoding whereas segments play their roles in phonetic encoding and articulation for motor programming. Our findings provide support for Proximate Unit Principle and the assumption of independence of premotor- (phonological encoding) and motor stages (phonetic encoding and articulation).

汉语口语产生中音节和音段的促进和抑制效应

The syllable’s role in language production

The present paper addresses the syllable’s role in language production. Firstly the concept of syllable is introduced. Secondly the syllable’s position in language production theories is discussed. Then the syllable’s studies are summarized from the following two aspects: (1) the influential experimental paradigms, and (2) three important issues in this field. The main experimental paradigms include masked priming, repetition priming, implicit priming and picture-word interference paradigms. The main important issues are: (1) whether syllable is a functional unit in language production or not, (2) how syllable play important role in speech production, and (3) where is syllable priming effect. Finally, corresponding to the unique characteristics of syllable in Chinese, the syllable’s studies in Chinese word production and the perspectives of further research are discussed

音节在语言产生中的作用

Syllables constitute proximate units for Mandarin speakers: Electrophysiological evidence from a masked priming task

DOI:10.1111/psyp.13317

URL

PMID:30657602

[本文引用: 4]

Languages may differ regarding the primary mental unit of phonological encoding in spoken production, with models of speakers of Indo-European languages generally assuming a central role for phonemes, but spoken Chinese production potentially attributing a more prominent role to syllables. In the present study, native Mandarin Chinese speakers named objects that were preceded by briefly presented and masked prime words, which were form related and either matched or mismatched concerning their syllabic structure, or were unrelated. Behavioral results showed a previously reported interaction between prime and target syllable type. Concurrently recorded EEG also exhibited this interaction and further revealed that syllable overlap modulated ERPs mainly in the time window of 300-400 ms after picture onset. By contrast, phonemic overlap modulated ERPs from 500 ms to 600 ms. This pattern might suggest that speakers retrieved syllables before phonemes and strengthens the claim that for Chinese individuals syllables constitute primary functional representations (

Syllable frequency and word frequency effects in spoken and written word production in a non-alphabetic script

DOI:10.3389/fpsyg.2014.00120

URL

PMID:24600420

[本文引用: 1]

The effects of word frequency (WF) and syllable frequency (SF) are well-established phenomena in domain such as spoken production in alphabetic languages. Chinese, as a non-alphabetic language, presents unique lexical and phonological properties in speech production. For example, the proximate unit of phonological encoding is syllable in Chinese but segments in Dutch, French or English. The present study investigated the effects of WF and SF, and their interaction in Chinese written and spoken production. Significant facilitatory WF and SF effects were observed in spoken as well as in written production. The SF effect in writing indicated that phonological properties (i.e., syllabic frequency) constrain orthographic output via a lexical route, at least, in Chinese written production. However, the SF effect over repetitions was divergent in both modalities: it was significant in the former two repetitions in spoken whereas it was significant in the second repetition only in written. Due to the fragility of the SF effect in writing, we suggest that the phonological influence in handwritten production is not mandatory and universal, and it is modulated by experimental manipulations. This provides evidence for the orthographic autonomy hypothesis, rather than the phonological mediation hypothesis. The absence of an interaction between WF and SF showed that the SF effect is independent of the WF effect in spoken and written output modalities. The implications of these results on written production models are discussed.

The determiners of picturenaming latency

This paper explored the determinants of picture-naming latency. Using a picture naming task and 5-point scale assessment method, we measured name agreement, familiarity, image agreement, visual complexity and word length of 311 pictures. Correlation analyses indicated that concept agreement, familiarity, image agreement and word frequency were the major determinants of picture-naming latency. Stepwise multiple regressions were carried out on the picture-naming latency. The R square of regression equation was 55.5%. On the basis of the naming latency, the pictures were divided into quintiles. These measures will prove valuable information in future studies of picture naming and other cognitive processes

影响图画命名时间的因素

The lexical access theory in speech production

言语产生中的词汇通达理论

The phonological planning unit in Chinese monosyllabic word production

汉语单音节词汇产生中音韵编码的单元

Seriality of semantic and phonological processes during overt speech in Mandarin as revealed by event-related brain potentials

DOI:10.1016/j.bandl.2015.03.007

URL

PMID:25880902

[本文引用: 1]

How is information transmitted across semantic and phonological levels in spoken word production? Recent evidence from speakers of Western languages such as English and Dutch suggests non-discrete transmission, but it is not clear whether this view can be generalized to other languages such as Mandarin, given potential differences in phonological encoding across languages. The present study used Mandarin speakers and combined a behavioral picture-word interference task with event-related potentials. The design factorially crossed semantic and phonological relatedness. Results showed semantic and phonological effects both in behavioral and electrophysiological measurements, with statistical additivity in latencies, and discrete time signatures (250-450 ms and 450-600 ms after picture onset for the semantic and phonological condition, respectively). Overall, results suggest that in Mandarin spoken production, information is transmitted from semantic to phonological levels in a sequential fashion. Hence, temporal signatures associated with spoken word production might differ depending on target language.