1 前言

音乐和语言是人类社会独有的, 属于人类交流的两种重要手段。早在1871年, Darwin就提出了原始母语(protolanguage)假说, 认为语言和音乐可能有相同的起源。对于远古人类来说, 语言和音乐的主要功能可能在于情绪表达(Thompson, Marin, & Stewart, 2012)。随着人类社会化程度的提高, 语言和音乐逐渐分化, 并朝着不同的进化方向发展(Mithen, 2006; Perlovsky, 2010)。语言发展成为具有明确语义的符号交流系统, 音乐则成为情绪表达的重要手段(Jackendoff, 2009)。

音乐可能先于语言产生, 在语言形成之前, 人类可能就能够通过类似音乐的方式进行交流(Darwin, 1871; Levman, 1992)。那么, 具有明确语义的语言信息是否有助于音乐的情绪表现?或者说, 音乐作为一种独立的交流手段, 其情绪表现并不依赖于语义信息, 语义信息反而影响其情绪表现?歌曲包含歌词和旋律, 兼具语言和音乐的特征。在音乐作品中, 演唱者可以仅呈现歌曲的旋律(比如, 以无意义音节“la”演唱), 也可以同时呈现旋律与歌词。因此, 已有很多研究从歌曲入手, 试图揭示具有明确语义的语言信息对音乐情绪加工的影响, 该研究问题将有助于阐明语言与音乐在情绪加工方面的关系。

已有研究主要从行为层面对此进行了探讨。研究表明, 歌词的确影响了听者对音乐情绪的加工(Ali & Peynircioğlu, 2006; Mori & Iwanaga, 2013; Stratton & Zalanowski, 1994)。比如, Stratton和Zalanowski (1994)以歌曲《Why I was born》为原始刺激, 制作了纯歌词、纯音乐以及歌词加钢琴伴奏三种版本。实验中要求听者评价刺激的愉悦度, 并通过量表测量听者在实验前后的情绪变化。结果表明, 听者对无歌词版本的音乐愉悦度评价显著高于带有歌词的两个版本。无歌词音乐使得听者的负性情绪减少, 正性情绪增强。带有歌词的音乐则呈现出完全相反的趋势:听者的负性情绪增强而正性情绪减弱。该结果表明, 无论歌词是独立呈现, 还是伴随旋律呈现都能影响听者对音乐的情绪加工。歌词对音乐情绪加工的影响也得到了计算机算法研究的证实。研究发现, 算法中是否包含歌词信息会影响该算法对音乐情绪自动分类的准确率(Laurier, Grivolla, & Herrera, 2008)及击中率(Hu, Downie, & Ehmann, 2009)。

然而, 也有研究发现, 无论歌词的表现形式是说出来, 还是唱出来, 听者在聆听带有歌词音乐前后的情绪体验并没有发生非常显著的改变(Galizio & Hendrick, 1972)。类似地, 通过测量听者聆听音乐前后的心境变化, Sousou (1997)发现, 歌词并不影响听者对音乐的情绪体验。已有研究结果之间的差异可能来源于两个方面。一方面是实验中对比条件的设置存在差异。具体来说, Galizio和Hendrick (1972)与Sousou (1997)的研究并未设置无歌词的对比条件, 而另一些研究(Ali & Peynircioğlu, 2006; Brattico et al., 2011; Stratton & Zalanowski, 1994)均设置了无歌词条件。另一方面可能在于, 研究者没有使用客观的指标量化听者对音乐情绪的加工。在以上研究中, 研究者大都使用了主观评定的方法。比如, Stratton和Zalanowski (1994)使用多元情感形容词检核表修订版(Multiple Affect Adjective Check List-R), 而Sousou (1997)与Mori和Iwanaga (2013)则使用自编的等级评定条目; 同时, 各研究的评定内容从愉悦度(Stratton & Zalanowski, 1994; Mori & Iwanaga, 2013)到强度(Ali & Peynircioğlu, 2006)也不尽相同。这些都导致了已有研究结果之间对比的困难。

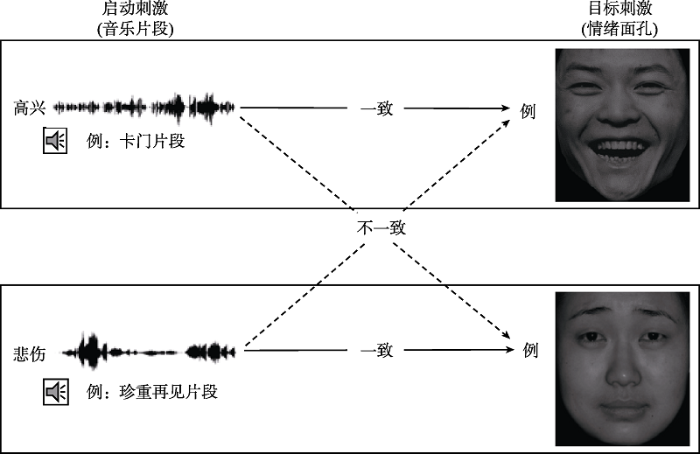

基于此, 本研究试图在行为研究的基础上, 通过电生理手段探究歌词对音乐情绪加工的影响。实验1通过认知行为方法考察听者能否加工有/无歌词音乐所传达的情绪。如果实验1发现, 无论音乐是否带有歌词, 听者都能加工其情绪, 实验2则进一步通过电生理手段考察大脑加工这两类音乐所传递的情绪是否存在差异。两个实验均采用情感启动范式, 音乐刺激都是120条由声乐家演唱的音乐片段, 形成两种条件:带有歌词条件, 即演唱中文歌词; 无歌词条件, 即以无意义音节“la”演唱。这两种条件的音乐刺激都作为启动刺激, 与音乐情绪效价一致或不一致的面孔图片作为目标刺激。如果音乐能够启动听者对情绪面孔的加工, 则意味着听者能加工音乐所传达的情绪信息。如果带有歌词条件下的音乐情绪加工模式与无歌词条件下存在差异, 则意味着歌词对音乐情绪加工具有影响。

2 实验1:歌词影响音乐情绪加工的行为研究

实验1从行为层面上探讨听者能否加工带有歌词与无歌词音乐的情绪信息。为了排除熟悉性的影响, 本实验的音乐刺激均选自欧洲歌剧片段。这是因为, 本研究所招募的被试均是未受过专业音乐训练的普通大学生。对中国大学生而言, 他们日常主要聆听流行音乐, 较少主动接触欧洲歌剧。此外, 为排除音色的影响, 本实验的音乐刺激均由人声演唱。在无歌词版本中, 演唱者以无意义音节“la”演唱; 在带有歌词版本中, 演唱者以中文歌词(公开出版的中译本)演唱。为了确保两个演唱版本的有效性, 我们实施了3个前测(具体内容见下文刺激与程序部分)。如果带有歌词和无歌词音乐都能启动听者对情绪面孔的加工, 那么, 说明听者能够加工这两种音乐所传达的情绪信息。

2.1 方法

2.1.1 被试

40名未接受专业音乐训练的普通大学生自愿参加了本实验。所有被试均为右利手, 听力正常, 视力或矫正视力正常, 无神经或精神方面的病史, 且在实验前签署了知情同意书。因为错误理解指导语, 8名被试的数据被剔除。最终剩余32名有效被试(24.47 ± 1.65岁, 15男)。

2.1.2 刺激与程序

120条原始音乐刺激均选自欧洲歌剧片段, 表达高兴与悲伤情绪的音乐各半。所有音乐刺激均由一名声乐表演者(接受过18年专业的美声歌唱训练)演唱。每一原始音乐片断均用中文歌词及无意义音节“la”演唱, 分别形成带有歌词和无歌词音乐两个版本。音乐录制前, 要求该声乐表演者仔细斟酌每一音乐片段的表演方式, 力求两个版本在力度(即表演者对音响强弱变化的处理方式)、弹性速度(rubato, 即表演者在一定范围内对速度自由变化的把握)、分句(即乐句的断句或演唱的呼吸口)及整体表演水平(即音响整体质量的好坏)等方面的一致性。录制后的音乐统一使用Adobe Audition CS6 (Adobe Systems Inc)和Goldwave剪辑处理。音乐刺激平均时长17 s (范围:10~25 s), 皆为单频道, 采样率22.050 kHz, 16位分辨率, 平均响度均标准化为-7 dB, 同时淡出1 s。

为确保实验材料的有效性, 本实验实施了三个前测。第一个前测是为了保证两个版本的音乐刺激在表演水平和表演方式上不存在显著差异。我们招募16名音乐专业大学生(均接受了18年专业音乐训练), 要求其评定带有歌词与无歌词音乐在弹性速度, 力度, 分句处理方式以及总体表演水平上的一致性(1 = 非常不同, 4 = 不确定, 7 = 非常相同)。只有平均分高于4的音乐刺激才被采用。第二个前测是为了确保听者能听清带有歌词音乐中的歌词内容。我们招募16名不参与正式实验的普通大学生, 要求其评定音乐中歌词的清晰度(1 = 不清楚, 3 = 不确定, 5 = 清楚)。只有平均分高于4的音乐刺激才被采用。经过两个前测筛选, 带有歌词与无歌词音乐刺激中各有80条符合要求。第三个前测是为了确保启动音乐和目标面孔在情绪效价上的关系是一致或不一致。我们从《中国化面孔情绪图片系统》(龚栩, 黄宇霞, 王妍, 罗跃嘉, 2011)中选取悲伤和高兴面孔各80张, 作为潜在的目标刺激, 男女面孔各半。通过Adobe Photoshop CS调整面孔图片像素为102 × 768, 16位分辨率。160条音乐刺激均呈现两次, 分别和情绪一致及情绪不一致的面孔匹配, 形成320对音乐-面孔配对。招募16名不参加正式实验的普通大学生, 要求其评定音乐-面孔配对的一致性(1 = 非常不一致, 5 = 不确定, 9 = 非常一致)。得分高于7的配对视作情绪一致的刺激, 得分低于3的配对视作情绪不一致的刺激。最终带有歌词音乐和无歌词音乐启动条件下各有60条刺激符合标准, 形成4种条件:带有歌词音乐-图片一致、带有歌词音乐-图片不一致、无歌词音乐-图片一致, 以及无歌词音乐-图片不一致(详见图1), 每个实验条件各有60个配对。我们进一步对以上配对的情绪一致性评定结果进行2(一致性: 一致, 不一致) × 2(歌词: 带有歌词音乐, 无歌词音乐)重复测量方差分析。结果表明, 一致性主效应显著, F(1, 59) = 2318.45, p < 0.001, ηp2 = 0.98。无论带有歌词音乐(一致: M = 7.37, SD = 0.53; 不一致: M = 2.70, SD = 0.57), 还是无歌词音乐(一致: M = 7.33, SD = 0.55; 不一致: M = 2.66, SD = 0.56), 听者对一致的评定均显著高于不一致。其它效应均不显著(ps > 0.09)。该研究结果表明, 无论音乐刺激是否带有歌词, 情绪一致与不一致条件均存在差异。此外, 我们还计算了第3个前测中筛选出的带有歌词音乐的清晰度: 歌词的清晰度平均得分为4.42 (SD = 0.23); 带有歌词音乐与无歌词音乐在表情因素方面的处理方式以及总体表演水平的一致性得分如下: 弹性速度(5.44 ± 0.48)、力度(5.56 ± 0.47)、分句(5.56 ± 0.47)、总体表演水平(5.52 ± 0.44)。

图1

我们将240个配对以拉丁方的方式分配到2套刺激中, 每套刺激各有120个试次, 包含4个实验条件, 每个被试只需完成其中一套刺激。刺激以伪随机方式呈现, 同一启动或目标刺激之间至少间隔8个试次。在实验中, 1000 ms黑色注视点消失后通过飞利浦头戴式SHM1900耳机播放启动音乐。音乐播放完毕后呈现情绪面孔图片。被试任务是又快又准地判断图片情绪, 高兴按F悲伤按J。情绪类型(高兴/悲伤)与按键(F/J)的对应关系在被试间平衡。判断结束后, 按空格键开始下一个试次。正式实验开始前, 我们提供4个练习试次以确保被试熟悉实验流程。为进一步排除熟悉的影响, 正式实验结束后, 我们要求被试报告实验中音乐片段对应的标题。如果被试能报告出标题中一个以上的关键词, 表明被试熟悉该音乐片段。在该实验中, 没有被试能报告出所有音乐名称中的任何一个关键词。

2.2 结果与讨论

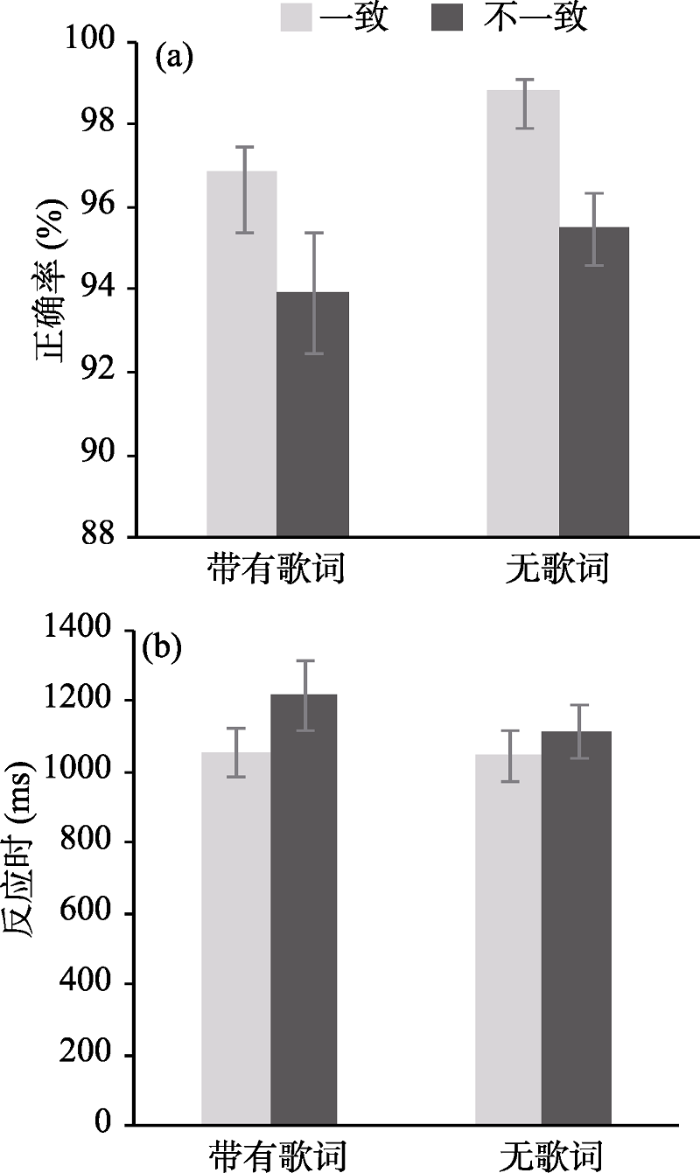

图2显示了4种实验条件下的平均正确率(a)和反应时(b)。在正确率方面, 2(歌词: 带有歌词音乐, 无歌词音乐) × 2(一致性: 一致, 不一致)重复测量方差分析结果发现:一致性主效应显著, F(1, 31)= 9.80, p = 0.004, ηp2= 0.24, 表明一致条件(M = 97.86%, SD = 2.74%)的正确率显著高于不一致条件(M = 94.71%, SD = 6.79%); 歌词主效应显著(F(1, 31) = 7.64, p = 0.01, ηp2 = 0.20), 表明无歌词音乐条件(M = 97.17%, SD = 4.03%)的正确率显著高于带有歌词音乐条件(M = 95.40%, SD = 6.39%), 交互作用不显著(p > 0.05)。

图2

在反应时方面, 2(歌词: 带有歌词音乐, 无歌词音乐) × 2(一致性: 一致, 不一致)重复测量方差分析结果表明:一致性主效应显著(F(1, 31) = 14.38, p = 0.001, ηp2 = 0.32), 情绪一致条件下的反应时(M = 1051.83 ms, SD = 389.35 ms)显著低不一致条件(M = 1166.45 ms, SD = 503.22 ms); 歌词主效应显著(F(1, 31) = 4.42, p = 0.04, ηp2 = 0.13), 带有歌词音乐条件下的反应时(M = 1136.59 ms, SD = 486.83 ms)显著长于无歌词音乐条件(M = 1081.69 ms, SD = 415.88)。歌词与一致性交互作用不显著(p > 0.05)。

与已有研究的行为结果一致(Goerlich, Witteman, Aleman, & Martens, 2011; Wang & Qin, 2016; Zhang, Li, Gold, & Jiang, 2010), 在本研究中, 相对于情绪不一致条件, 听者在情绪一致条件下的反应更快更准。这说明, 带有歌词和无歌词音乐都能启动听者对情绪面孔的加工。也就是说, 听者能加工带有歌词和无歌词音乐的情绪(Morton & Trehub, 2007)。

3 实验2:歌词影响音乐情绪加工的ERP研究

实验1结果表明, 无论是否带有歌词, 听者都能够加工音乐的情绪信息。由于行为实验无法反映出大脑加工音乐情绪在时间进程上的动态变化, 因此, 实验2试图通过电生理手段进一步探究歌词影响音乐情绪加工的神经机制。在已有研究中, 仅有一个研究借助功能核磁共振成像(fMRI)技术探讨了歌词影响音乐情绪加工的神经机制, 遗憾的是, 研究者使用带有歌词的声乐曲和无歌词的器乐曲为实验刺激, 这种实验操纵无法排除音色在音乐情绪加工中的作用(Brattico et al, 2011)。的确, 众多研究表明, 音色对音乐情绪加工具有重要的影响(Behrens & Green, 1993; Hailstone et al., 2009; Franco, Chew, & Swaine, 2017)。那么, 控制音色之后, 歌词是否影响听者对音乐情绪的神经加工?这是本实验要探究的问题。

先前使用情感启动范式的研究主要关注N400与晚期正成分(late positive component, LPC)。研究表明, 情绪不一致条件比一致条件诱发了更大的N400, 该成分反映了大脑对情绪冲突的检测(Schirmer, Kotz, & Friederici, 2002; Zhang, Lawson, Guo, & Jiang, 2006)以及对情绪信息的整合加工(Kamiyama, Abla, Iwanaga, & Okanoya, 2013; Zhang et al., 2010)。类似地, 情绪不一致条件诱发的LPC比情绪一致条件更大(Herring, Taylor, White, & Crites, 2011; Werheid, Alpay, Jentzsch, & Sommer, 2005; Zhang et al., 2010), 表明大脑对情绪不一致条件的加工需要更多的注意参与(Zhang, Kong, & Jiang, 2012; Zhang et al, 2010)。如果听者对带有歌词音乐和无歌词音乐所传达的情绪信息都能进行加工(Morton & Trehub, 2007), 且歌词会影响听者对音乐情绪的加工(Ali & Peynircioğlu, 2006; Stratton & Zalanowski, 1994)。我们预期, 听者对带有歌词音乐和无歌词音乐情绪信息的加工都会产生启动效应, 也就是N400或LPC效应。但是, 有歌词音乐和无歌词音乐启动条件下所产生的启动效应可能存在差异。

3.1 方法

3.1.1 被试

被试是20名没有受过专业音乐训练的普通大学生, 4名被试因脑电伪迹过多被剔除, 有效被试为16名(23.88 ± 1.36岁, 7男)。所有被试均为右利手, 听力正常, 视力或矫正视力正常, 无精神病史, 无大脑损伤。所有被试均签署了知情同意书, 并在实验结束后获得一定的报酬。

3.1.2 刺激与程序

刺激材料与实验1相同。为避免行为反应对脑电信号的干扰, 实验2在程序上与实验1略有不同。在实验2中, 音乐播放完毕后呈现的情绪面孔图片不是按键消失, 而是固定的1000 ms。事件相关脑电的标记打在情绪面孔呈现的时间点上。在面孔消失后立即出现反应界面, 要求被试判断音乐与面孔所表达的情绪是否一致, 一致按F不一致按J。一致性(一致/不一致)与按键(F/J)的对应关系在被试间平衡。反应时间没有限定, 判断结束后, 按空格键开始下一个试次。正式实验开始前, 被试完成6个练习刺激以熟悉实验流程。与实验1相同, 为进一步排除熟悉度可能对实验造成的干扰, 在正式实验结束后, 要求被试报告实验中音乐片段的标题。在该实验中, 没有被试能报告出所有音乐标题中的任何一个关键词。

3.1.3 脑电记录与数据分析

我们采用Biosemi 64导Active Two电极帽, 在2048 Hz采样率下记录EEG信号。外接电极分别放置于左、右外眼角处及左眼上、下以分别记录水平眼电和垂直眼电。脑电采集中控制电极与头皮接触的电阻在20 kΩ以下。离线分析时, 将参考转为双侧乳突平均参考, 进行0.1~30 Hz (24 dB/oct)带通滤波, 并通过BESA分析软件自动矫正眼电伪迹。分段为目标刺激呈现前200 ms到目标刺激呈现后1000 ms, 基线为目标刺激出现前的200 ms时间段。删除波幅变化超过±120 μV及反应错误的试次。

根据半球和区域, 我们选取9个感兴趣区(interest of region, ROI)的电极点(左前:FP1, AF3, F3, F5, F7; 中前:FPz, AFz, Fz; 右前:FP2, AF4, F4, F6, F8; 左中:C1, CP1, FC3, C3, CP3; 中中:FCz, Cz, CPz; 右中:C2, CP2, FC4, C4, CP4; 左后:P3, P5, PO3, PO7, O1; 中后:Pz, POz, Oz; 右后:P4, P6, PO4, PO8, O2)分别进行中线和两侧的重复测量方差分析。就中线电极点而言, 一致性(一致, 不一致), 歌词(带有歌词音乐, 无歌词音乐)和脑区(前, 中, 后)为被试内因素。两侧分析在中线分析的基础上增加了半球(左, 右)作为被试内因素。计算每个兴趣区内所有电极点的平均值并做进一步分析。本研究只报告显著或边缘显著的主要实验变量(歌词, 一致性)的统计结果。交互作用显著后进行简单效应分析, 所有的成对比较均使用Bonferroni correction矫正。球形假设不成立时, 使用Greenhouse-Geisser矫正p值。

3.2 结果与讨论

3.2.1 行为结果

我们以正确率为因变量, 进行2(歌词: 带有歌词音乐, 无歌词音乐) × 2(一致性:一致, 不一致)的重复测量方差分析。结果没有发现任何显著的效应(ps > 0.05)。带有歌词条件下的正确率为85.83%, 无歌词条件下的正确率为83.02%, 这表明, 无论是带有歌词条件还是无歌词条件, 听者都能认真完成实验任务。

3.2.2 脑电结果

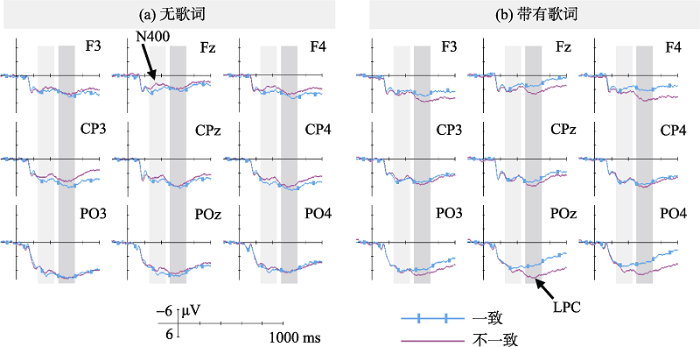

图3呈现了无歌词(a)与带有歌词(b)音乐启动条件下产生的ERP波形图。图4呈现的是在250~ 450 ms (a)和500~700 ms (b)时间窗口内无歌词与带有歌词音乐条件下的差异波(情绪不一致减情绪一致)地形图。基于图形观察, 以及已有研究发现(Daltrozzo & Schön, 2009; Herring et al., 2011; Kamiyama et al., 2013; Werheid et al., 2005; Zhang et al., 2010)的研究, 我们将目标刺激出现后的250~450 ms及500~700 ms分别作为N400和LPC的时间窗。

图3

图4

我们以N400平均波幅为因变量, 分别进行中线和两侧分析。中线分析中, 2(歌词: 带有歌词音乐, 无歌词音乐) × 2(一致性: 一致, 不一致) × 3(脑区: 前, 中, 后)三因素重复测量方差分析发现, 歌词与一致性交互作用显著, F(1, 15) = 8.48, p = 0.01, ηp2 = 0.36。简单效应分析表明, 在无歌词音乐启动下, 情绪不一致条件比一致条件诱发了更大的N400波幅, F(1, 15) = 5.17, p = 0.04, ηp2 = 0.26。然而, 在带有歌词音乐启动下, 情绪一致与不一致条件诱发的N400波幅不存在显著差异, F(1, 15) = 2.90, p = 0.11。在两侧分析中, 2(歌词: 带有歌词音乐, 无歌词音乐) × 2(一致性: 一致, 不一致) × 3(脑区: 前, 中, 后) × 2(半球: 左, 右)四因素重复测量方差分析发现, 歌词与一致性的交互作用显著, F(1, 15) = 7.80, p = 0.02, ηp2 = 0.34。简单效应分析表明, 在无歌词音乐启动下, 情绪不一致条件比一致条件诱发了更大的N400波幅, F(1, 15) = 6.81, p = 0.02, ηp2 = 0.31。然而, 在带有歌词音乐启动下, 情绪一致与不一致条件诱发的N400波幅不存在显著差异, F(1, 15) = 2.18, p = 0.16。在中线和两侧分析中, 其它与歌词或一致性有关的效应均不显著(ps > 0.35)。

类似地, 我们以LPC平均波幅为因变量, 分别进行中线和两侧的分析。在中线分析中, 2(歌词: 带有歌词音乐, 无歌词音乐) × 2(一致性: 一致, 不一致) × 3(脑区: 前, 中, 后)三因素重复测量方差分析表明, 歌词与一致性交互作用显著, F(1, 15) = 7.47, p = 0.02, ηp2 = 0.33。简单效应分析表明, 在带有歌词音乐启动下, 情绪不一致条件比一致条件诱发了更大的LPC波幅, F(1, 15) = 6.90, p = 0.02, ηp2 = 0.32。在无歌词音乐启动下, 情绪不一致条件与一致条件诱发的LPC波幅不存在显著差异, F(1, 15) = 0.78, p = 0.39。在两侧分析中, 2(歌词: 带有歌词音乐, 无歌词音乐) × 2(一致性: 一致, 不一致) × 3(脑区: 前, 中, 后) × 2(半球: 左, 右)四因素重复测量方差分析发现, 歌词与一致性交互作用显著, F(1, 15) = 6.20, p = 0.03, ηp2 = 0.29。简单效应分析表明, 在带有歌词音乐启动下, 情绪不一致条件比一致条件诱发了更大的LPC, F(1, 15) = 5.17, p = 0.04, ηp2 = 0.26。在无歌词音乐启动下, 情绪不一致条件与一致条件诱发的LPC波幅不存在显著差异, F(1, 15) = 0.82, p = 0.38。中线和两侧分析中没有其它与歌词或一致性有关的显著效应(ps > 0.21)。

实验2脑电结果发现, 无歌词音乐启动条件在250~450 ms时间窗口诱发了N400效应, 带有歌词音乐启动条件在500~700 ms时间窗口诱发了LPC效应。该结果表明, 无论音乐是否带有歌词, 听者均能对启动刺激与目标刺激之间的情绪关系进行判断, 但是, 听者加工带有歌词与无歌词音乐情绪存在时间进程上的差异。也就是说, 听者对带有歌词音乐情绪的加工滞后于无歌词音乐。

4 综合讨论

本研究通过两个实验探讨歌词影响音乐情绪加工的神经机制。行为结果显示, 无论音乐是否带有歌词, 听者在一致条件下的反应都比不一致条件更快更准确, 表明无论音乐是否带有歌词, 听者都能加工音乐传达的情绪信息。ERP结果进一步显示, 尽管听者对带有歌词与无歌词音乐情绪的加工都诱发了启动效应, 但是无歌词音乐条件在250~ 450 ms时间窗口诱发了N400效应, 而带有歌词音乐条件在500~700 ms时间窗口诱发了LPC效应, 该结果表明, 歌词影响了听者加工音乐情绪的时间进程, 说明听者对带有歌词音乐情绪的加工滞后于无歌词音乐。

经典N400通常和语义加工有关, 不一致的语义常常比一致诱发更大的N400 (Kutas & Hillyard, 1980), 该成分体现出大脑对概念的整合加工(Brown & Hagoort, 1993; Kutas & Federmeier, 2000; Kutas & Federmeier, 2011)。近年研究也表明, 在情感启动范式中, 情绪不一致的条件比情绪一致的条件诱发了更大的N400 (Schirmer et al., 2002; Zhang et al., 2010; Zhang et al., 2006), 表示对情绪信息的整合加工需要更多的认知资源(Kamiyama et al, 2013; Zhang et al, 2010)。研究也发现, 当启动刺激为短小音乐片段(Daltrozzo & Schön, 2009; Goerlich et al., 2011; Koelsch et al., 2004)与和弦(Steinbeis & Koelsch, 2011)时, 大脑也能对不一致的情绪信息诱发更大波幅的N400。本研究发现, 对无歌词音乐情绪的加工诱发了N400效应, 这一效应的产生主要源于情绪意义的激活(Daltrozzo & Schön, 2009; Eder, Leuthold, Rothermund, & Schweinberger, 2011)。的确, 启动刺激的出现在概念水平上预先激活了与目标刺激有关的情绪表征, 减少了情绪一致条件下的N400波幅(Goerlich et al., 2012)。相比情绪一致条件, 在情绪不一致条件下, 由于与目标刺激相关的情绪表征没有被提前激活, 所以听者对无歌词音乐情绪信息与面孔情绪信息的整合加工需要更多的认知资源, 体现为N400波幅的增大。

与以往使用情感启动范式的研究一致(Herring et al., 2011; Wang & Qin, 2016; Werheid et al., 2005; Zhang et al., 2012; Zhang et al., 2010), 在本研究中, 听者对带有歌词音乐的情绪加工诱发了LPC效应。这可能反映了注意资源的分配。的确, 有研究发现情感启动范式中不一致条件下更大的LPC是由于更多的注意卷入所致(Herring et al., 2011; Werheid et al., 2005; Zhang et al., 2010)。另一方面, 已有研究发现LPC体现了整合加工(Juottonen, Revonsuo, & Lang, 1996)。在语言与音乐的记忆研究中, 研究者发现, 当语言与旋律信息同时呈现时, 听者是将其整合一起, 作为一个整体加工, 而不是分开加工(Serafine, Davidson, Crowder, & Repp, 1986)。在本研究中, 当音乐带有歌词时, 听者需要整合旋律与歌词, 并可能将其作为一个整体与情绪面孔的一致性进行匹配, 由此诱发了LPC效应。然而, 需要说明的是, 尽管听者对带有歌词音乐情绪信息的加工滞后于无歌词音乐(体现为LPC效应和N400效应潜伏期的差异), 但启动效应的出现意味着听者能正确理解并加工带有歌词与无歌词音乐所传达的情绪信息。

作为两个独立的脑电成分, 研究者普遍认为N400与LPC代表了不同的认知加工过程(Ibáñez et al., 2010; Juottonen et al., 1996; Rohaut & Naccache, 2017)。同时, 已有研究表明, 听者对带有歌词与无歌词音乐情绪信息的加工可能涉及不同的神经机制(Brattico et al., 2011)。因此, 虽然本研究认为N400和LPC在一定程度上均反映了整合加工, 但这两个成分所代表的整合加工应当存在一定差异。基于情绪的起源(origin), 情绪双模型理论(emotion duality model)认为, 在个体对刺激做出情绪反应的过程中, 存在两个评价机制:自动评价系统(the automatic evaluating system)和反思性评价系统(the reflective evaluating system) (Jarymowicz & Imbir, 2015)。反思性反应的产生需要以言语为基础, 没有言语的参与则无法产生这种反应(Imbir, Spustek, & Żygierewicz, 2016)。是否带有语言信息恰恰是本研究中带有歌词与无歌词音乐的差异。因此, 本文的LPC可能反映的是一种反思性的整合加工, 而N400可能反映的是一种相对自动化的整合加工。

通过两个实验, 本研究发现, 歌词的参与导致了听者对音乐情绪加工的滞后, 该结果验证了音乐哲学的观点。在音乐哲学家看来, 纯音乐(无歌词音乐)在传达情绪方面比带有歌词音乐更迅速更直接(于润洋, 2000; 张前, 王次炤, 1992), 这是因为, 语言通过命题系统(propositional system)明确地传达情绪信息(Erickson, 2005; Jankélévitch & Abbate, 2003), 而纯音乐正是没有类似语言的语义, 使其在传递情绪过程中, 可以省略命题系统中的翻译(translation)过程, 由此导致纯音乐能够更迅速更直接地传递情绪信息。

从语言与音乐情绪加工的关系来看, 与已有行为研究结果一致(Ali & Peynircioğlu, 2006; Mori & Iwanaga, 2013; Stratton & Zalanowski, 1994), 本研究结果表明歌词对音乐情绪加工具有影响。应该指出的是, 本研究是通过比较有/无歌词条件下的音乐情绪加工, 探究歌词的影响效应。尽管本研究的实验设计无法直接回答语言与音乐在情绪加工方面是否存在交互的问题, 但是, 本研究结果暗示了语言与音乐的情绪加工可能共享特定的机制。具体来说, 与无歌词条件不同, 带有歌词音乐条件诱发了LPC效应, 该LPC效应主要缘于歌词的介入。就带有歌词的条件而言, 歌词与旋律是同时呈现, 既然歌词影响其音乐情绪加工, 则暗示了语言与音乐的情绪加工可能在此存在交互。未来研究需要对此进行进一步验证。

5 结论

本研究表明, 无论是否带有歌词, 听者都能加工音乐所传达的情绪信息。但是, 无歌词音乐条件在250~450 ms时间窗口诱发了N400效应, 而带有歌词音乐条件在500~700 ms时间窗口诱发了LPC效应, 该结果表明歌词影响了大脑加工音乐情绪的时间进程, 听者对带有歌词音乐情绪的加工滞后于无歌词音乐。本研究在一定程度上为音乐与语言关系的探究提供了依据。

参考文献

Songs and emotions: Are lyrics and melodies equal partners?

DOI:10.1177/0305735606067168

URL

[本文引用: 5]

Abstract We explored the role of lyrics and melodies in conveying emotions in songs. Participants rated the intensity of four types of emotions in instrumental music or the same music paired with lyrics. Melodies and lyrics conveyed the same intended emotion in Experiments 1 and 3 but were mismatched in Experiments 2 and 4. The major findings in Experiments 1 and 2 were that lyrics detracted from the emotion in happy and calm music (positive emotions), but enhanced the emotion in sad and angry music (negative emotions). In all cases, melodies of songs were more dominant than the lyrics in eliciting emotions. In addition, in Experiments 3 and 4, the emotion in the songs appeared to transfer, simply by association, to pictures of common objects arbitrarily paired with the songs. Copyright

The ability to identify emotional content of solo improvisations performed vocally and on three different instruments

DOI:10.1177/030573569302100102

URL

[本文引用: 1]

Fifty-eight undergraduate students with low and high musicianship skills rated the degree to which 24 solo improvisations expressed the emotions of sad, angry, and scared. Eight musicians-two violinists, two trumpet players, two vocalists, and two timpanists-performed three short improvisations, each of which expressed one of the three targeted emotions. Accuracy scores were computed to assess the degree to which subjects rated improvi- sations as expressing the emotion intended by the musician in contrast to the other two emotions. The results indicated that the subjects were relatively accurate in assessing the emotional content of the improvisations. In addition, subjects with a higher level of musicianship skills demonstrated greater accuracy for only the trumpet improvisations. Finally, the subjects' accuracy depended not only on the instrument played, but the emotion expressed. For example, subjects were more accurate when identifying scared improvisations performed on a violin. The applied and theoretical implications of these results were discussed.

A functional MRI study of happy and sad emotions in music with and without lyrics

DOI:10.3389/fpsyg.2011.00308

URL

PMID:3227856

[本文引用: 3]

Musical emotions, such as happiness and sadness, have been investigated using instrumental music devoid of linguistic content. However, pop and rock, the most common musical genres, utilize lyrics for conveying emotions. Using participants self-selected musical excerpts, we studied their behavior and brain responses to elucidate how lyrics interact with musical emotion processing, as reflected by emotion recognition and activation of limbic areas involved in affective experience. We extracted samples from subjects selections of sad and happy pieces and sorted them according to the presence of lyrics. Acoustic feature analysis showed that music with lyrics differed from music without lyrics in spectral centroid, a feature related to perceptual brightness, whereas sad music with lyrics did not diverge from happy music without lyrics, indicating the role of other factors in emotion classification. Behavioral ratings revealed that happy music without lyrics induced stronger positive emotions than happy music with lyrics. We also acquired functional magnetic resonance imaging data while subjects performed affective tasks regarding the music. First, using ecological and acoustically variable stimuli, we broadened previous findings about the brain processing of musical emotions and of songs versus instrumental music. Additionally, contrasts between sad music with versus without lyrics recruited the parahippocampal gyrus, the amygdala, the claustrum, the putamen, the precentral gyrus, the medial and inferior frontal gyri (including Broca area), and the auditory cortex, while the reverse contrast produced no activations. Happy music without lyrics activated structures of the limbic system and the right pars opercularis of the inferior frontal gyrus, whereas auditory regions alone responded to happy music with lyrics. These findings point to the role of acoustic cues for the experience of happiness in music and to the importance of lyrics for sad musical emotions.

The processing nature of the N400: Evidence from masked priming

DOI:10.1162/jocn.1993.5.1.34

URL

PMID:23972118

[本文引用: 1]

The N400 is an endogenous event-related brain potential (ERP) that is sensitive to semantic processes during language comprehension. The general question we address in this paper is which aspects of the comprehension process are manifest in the N400. The focus is on the sensitivity of the N400 to the automatic process of lexical access, or to the controlled process of lexical integration. The former process is the reflex-like and effortless behavior of computing a form representation of the linguistic signal, and of mapping this representation onto corresponding entries in the mental lexicon. The latter process concerns the integration of a spoken or written word into a higher-order meaning representation of the context within which it occurs. ERPs and reaction times (RTs) were acquired to target words preceded by semantically related and unrelated prime words. The semantic relationship between a prime and its target has been shown to modulate the amplitude of the N400 to the target. This modulation can arise from lexical access processes, reflecting the automatic spread of activation between words related in meaning in the mental lexicon. Alternatively, the N400 effect can arise from lexical integration processes, reflecting the relative ease of meaning integration between the prime and the target. To assess the impact of automatic lexical access processes on the N400, we compared the effect of masked and unmasked presentations of a prime on the N400 to a following target. Masking prevents perceptual identification, and as such it is claimed to rule out effects from controlled processes. It therefore enables a stringent test of the possible impact of automatic lexical access processes on the N400. The RT study showed a significant semantic priming effect under both unmasked and masked presentations of the prime. The result for masked priming reflects the effect of automatic spreading of activation during the lexical access process. The ERP study showed a significant N400 effect for the unmasked presentation condition, but no such effect for the masked presentation condition. This indicates that the N400 is not a manifestation of lexical access processes, but reflects aspects of semantic integration processes.

Conceptual processing in music as revealed by N400 effects on words and musical targets

DOI:10.1162/jocn.2009.21113

URL

PMID:18823240

[本文引用: 3]

Abstract The cognitive processing of concepts, that is, abstract general ideas, has been mostly studied with language. However, other domains, such as music, can also convey concepts. Koelsch et al. [Koelsch, S., Kasper, E., Sammler, D., Schulze, K., Gunter, T., & Friederici, A. D. Music, language and meaning: Brain signatures of semantic processing. Nature Neuroscience, 7, 302-307, 2004] showed that 10 sec of music can influence the semantic processing of words. However, the length of the musical excerpts did not allow the authors to study the effect of words on musical targets. In this study, we decided to replicate Koelsch et al. findings using 1-sec musical excerpts (Experiment 1). This allowed us to study the reverse influence, namely, of a linguistic context on conceptual processing of musical excerpts (Experiment 2). In both experiments, we recorded behavioral and electrophysiological responses while participants were presented 50 related and 50 unrelated pairs (context/target). Experiments 1 and 2 showed a larger N400 component of the event-related brain potentials to targets following a conceptually unrelated compared to a related context. The presence of an N400 effect with musical targets suggests that music may convey concepts. The relevance of these results for the comprehension of music as a structured set of conceptual units and for the domain specificity of the mechanisms underlying N400 effects are discussed.

The descent of man and selection in relation to sex. London, UK: John Murray

Language, ineffability and paradox in music philosophy (Unpublished doctorial dissertation)

.

Preschoolers’ attribution of affect to music: A comparison between vocal and instrumental performance

Effect of musical accompaniment on attitude: The guitar as a prop for persuasion

DOI:10.1111/j.1559-1816.1972.tb01286.x

URL

This study examined the effects on attitude, mood, and recall of embedding a communication in different musical art forms. Subjects listened to four folk songs, each of which either had or did not have guitar accompaniment, and which were either sung or dramatically spoken. Results indicated greater positive emotional arousal and greater persuasion with the presence of guitar accompaniment. The sung-spoken variation had few effects. The results were interpreted as supporting a conclusion of Dabbs and Janis (1965) that a pleasant activity such as eating creates a momentary mood of compliance toward a communication's recommendations. The present study generalized Dabbs and Janis' conclusion to a stimulus situation involving instrumental music. An alternative interpretation that the music inhibited counterargument production was discussed and rejected. The implications of the study for a more general arousal-persuasion model were noted.

Hearing feelings: Affective categorization of music and speech in alexithymia, an ERP study

The nature of affective priming in music and speech

DOI:10.1162/jocn_a_00213

URL

PMID:22360592

[本文引用: 1]

Abstract The phenomenon of affective priming has caught scientific interest for over 30 years, yet the nature of the affective priming effect remains elusive. This study investigated the underlying mechanism of cross-modal affective priming and the influence of affective incongruence in music and speech on negativities in the N400 time-window. In Experiment 1, participants judged the valence of affective targets (affective categorization). We found that music and speech targets were evaluated faster when preceded by affectively congruent visual word primes, and vice versa. This affective priming effect was accompanied by a significantly larger N400-like effect following incongruent targets. In this experiment, both spreading of activation and response competition could underlie the affective priming effect. In Experiment 2, participants categorized the same affective targets based on nonaffective characteristics. However, as prime valence was irrelevant to the response dimension, affective priming effects could no longer be attributable to response competition. In Experiment 2, affective priming effects were observed neither at the behavioral nor electrophysiological level. The results of this study indicate that both affective music and speech prosody can prime the processing of visual words with emotional connotations, and vice versa. Affective incongruence seems to be associated with N400-like effects during evaluative categorization. The present data further suggest a role of response competition during the affective categorization of music, prosody, and words with emotional connotations.

Revision of the Chinese facial affective picture system

DOI:10.3969/j.issn.1000-6729.2011.01.011

URL

[本文引用: 1]

Objective:To extend the native Chinese Facial Affective Picture System to provide materials for further research on emotion.Methods:The experiments used convenience sampling.One hundred students from the acting and directing department of two art colleges in Beijing were selected as the expresser.And 100 students were recruited from two colleges in Beijing as the raters.Seven types of facial expression pictures including anger,disgust,fearful,sadness,surprised,happy and peaceful were collected from expressers.Then,the type was judged and the pictures were assessed with a 9-point scale by college student raters.The experiment 1 was to expand all emotional types of pictures on the basis of Chinese facial expression materials that produced by our group.One hundred students from the drama club of three colleges in Beijing were selected as the expresser.And each of 10 elderly and children expresser was selected from a community of Beijing.Then,another 100 students were recruited from two colleges in Beijing as the raters.Experiment 2 further expanded the number of negative pictures(such as anger,disgust,fear,sadness)and added some pictures of other age groups.Results:Totally 870 representative facial expression pictures of 7 emotion types were obtained with an identity rate and intensity point for each picture including 74 anger faces,47 disgust faces,64 fear faces,95 sadness faces,120 surprise faces,248 happiness faces and 222 neutral facial expressions.Conclusion:The present study has established the reliable Chinese Facial Affective Picture System.The facial expression pictures in our system are representative materials for emotion research in the future.It requires further study to improve the native facial expression system.

中国面孔表情图片系统的修订

DOI:10.3969/j.issn.1000-6729.2011.01.011

URL

[本文引用: 1]

目的:扩展本土化的中国面孔表情图片系统以提供情绪研究的取材。方法:采用方便取样。从北京2所高等艺术院校的表演系、导演系选取100名学生,作为面孔表情表演者;从北京2所普通高等院校招募100名学生,作为面孔表情评分者。采集表演者的愤怒,厌恶,恐惧,悲伤,惊讶,高兴和平静7种面孔表情图片,再由评分者对图片进行情绪类别的判定和情绪强烈程度的9点量表评分,扩展各种情绪类型的图片数量。然后,从北京3所普通高校戏剧社社员中选取100名学生,从北京某社区选取老年人、儿童各10名,作为面孔表情表演者;另从北京2所普通高等院校招募100名学生,作为面孔表情评分者。进一步扩展负性图片的数量(如,愤怒,厌恶,恐惧,悲伤),并补充一些其他年龄段的图片。结果:得到具有代表性的7种情绪类型的面孔表情图片共870张,每张图片都有其对应的认同率和情绪强度评分,其中,愤怒74张,厌恶47张,恐惧64张,悲伤95张,惊讶120张,高兴248张,平静222张。结论:本研究初步建立了信度较高的中国人面孔表情图片系统,可作为以后情绪研究的选取材料,本系统有待进一步完善。

It's not what you play, it's how you play it: Timbre affects perception of emotion in music

DOI:10.1080/17470210902765957

URL

PMID:2683716

[本文引用: 1]

Salient sensory experiences often have a strong emotional tone, but the neuropsychological relations between perceptual characteristics of sensory objects and the affective information they convey remain poorly defined. Here we addressed the relationship between sound identity and emotional information using music. In two experiments, we investigated whether perception of emotions is influenced by altering the musical instrument on which the music is played, independently of other musical features. In the first experiment, 40 novel melodies each representing one of four emotions (happiness, sadness, fear, or anger) were each recorded on four different instruments (an electronic synthesizer, a piano, a violin, and a trumpet), controlling for melody, tempo, and loudness between instruments. Healthy participants (23 young adults aged 18-30 years, 24 older adults aged 58-75 years) were asked to select which emotion they thought each musical stimulus represented in a four-alternative forced-choice task. Using a generalized linear mixed model we found a significant interaction between instrument and emotion judgement with a similar pattern in young and older adults (p< .0001 for each age group). The effect was not attributable to musical expertise. In the second experiment using the same melodies and experimental design, the interaction between timbre and perceived emotion was replicated (p< .05) in another group of young adults for novel synthetic timbres designed to incorporate timbral cues to particular emotions. Our findings show that timbre (instrument identity) independently affects the perception of emotions in music after controlling for other acoustic, cognitive, and performance factors.

Electrophysiological responses to evaluative priming: The LPP is sensitive to incongruity

DOI:10.1037/a0022804

URL

PMID:21517156

[本文引用: 4]

Abstract Previous studies examining event-related potentials and evaluative priming have been mixed; some find evidence that evaluative priming influences the N400, whereas others find evidence that it affects the late positive potential (LPP). Three experiments were conducted using either affective pictures (Experiments 1 and 2) or words (Experiment 3) in a sequential evaluative priming paradigm. In line with previous behavioral findings, participants responded slower to targets that were evaluatively incongruent with the preceding prime (e.g., negative preceded by positive) compared to evaluatively congruent targets (e.g., negative preceded by negative). In all three studies, the LPP was larger to evaluatively incongruent targets compared to evaluatively congruent ones, and there was no evidence that evaluative incongruity influenced the N400 component. Thus, the present results provide additional support for the notion that evaluative priming influences the LPP and not the N400. We discuss possible reasons for the inconsistent findings in prior research and the theoretical implications of the findings for both evaluative and semantic priming. 2011 APA, all rights reserved

Lyric text mining in music mood classification.

Gesture influences the processing of figurative language in non-native speakers: ERP evidence

DOI:10.1016/j.neulet.2010.01.009

URL

PMID:20079804

[本文引用: 1]

Gestures should play a role in second language comprehension, given their importance in conveying contextual information. In this study, the N400 and the LPC were evaluated in a task involving the observation of videos showing utterances accompanied by gestures. Students studying advanced (G-High participants) and basic German (G-Low participants) as a second language were investigated. The utterance–gesture congruence and metaphoric meaning of content were manipulated during the task. As in previous ERP reports with native speakers, metaphorical expressions were sensitive to gestures. In G-Low participants, no modulation in the 300–500 ms window was observed, and only a modest effect was observed for the 500–700 ms window. More subtle differences of verbal expression were not processed in this group. Consistent with previous reports of the same paradigm with native speakers, the N400 from G-High group discriminated both congruent and incongruent gestures as well as literal and metaphorical sentences. Our results suggest that semantic processing is robust in the learning of a second language, although the amplitude modulation and latency of ERPs might depend on the speaker's proficiency level.

Effects of valence and origin of emotions in word processing evidenced by event related potential correlates in a lexical decision task

DOI:10.3389/fpsyg.2016.00271

URL

PMID:26973569

[本文引用: 1]

This paper presents behavioral and event-related potential (ERP) correlates of emotional word processing during a lexical decision task (LDT). We showed that valence and origin (two distinct affective properties of stimuli) help to account for the ERP correlates of LDT. The origin of emotion is a factor derived from the emotion duality model. This model distinguishes between the automatic and controlled elicitation of emotional states. The subjects’ task was to discriminate words from pseudo-words. The stimulus words were carefully selected to differ with respect to valence and origin whilst being matched with respect to arousal, concreteness, length and frequency in natural language. Pseudo-words were matched to words with respect to length. The subjects were 32 individuals aged from 19 to 26 years who were invited to participate in an EEG study of lexical decision making. They evaluated a list of words and pseudo-words. We found that valence modulated the amplitude of the FN400 component (290–375 ms) at centro-frontal (Fz, Cz) region, whereas origin modulated the amplitude of the component in the LPC latency range (375–670 ms). The results indicate that the origin of stimuli should be taken into consideration while deliberating on the processing of emotional words.

Parallels and nonparallels between language and music

DOI:10.1525/mp.2009.26.3.195

URL

[本文引用: 1]

THE PARALLELS BETWEEN LANGUAGE AND MUSIC CAN BE explored only in the context of (a) the differences between them, and (b) those parallels that are also shared with other cognitive capacities. The two differ in many aspects of structure and function, and, with the exception of the metrical grid, all aspects they share appear to be instances of more general capacities.

Toward a human emotions taxonomy (based on their automatic vs. reflective origin)

DOI:10.1177/1754073914555923

URL

[本文引用: 2]

ABSTRACT Certain emotional processes “bypass the will” and even awareness, whereas others arise due to the deliberative evaluation of objects, states, and events. It is important to differentiate between the automatic versus reflective origins of emotional processes, and sensory versus conceptual bases of diverse negative and positive emotions. A taxonomy of emotions based on different origins is presented. This taxonomy distinguishes between negative and positive automatic versus reflective emotions. The automatic emotions are connected with the (a) homeostatic and (b) hedonistic regulatory mechanisms. The reflective emotions—uniquely human—are described in reference to deliberative processes and appraisals based on two types of conceptual and verbalized evaluative standards: (a) ideal self-standards and (b) general, axiological standards of good and evil.

Dissimilar age influences on two ERP waveforms (LPC and N400) reflecting semantic context effect

DOI:10.1016/0926-6410(96)00022-5

URL

PMID:8883923

[本文引用: 2]

Age-related changes in semantic context effects were examined using late event-related brain potentials (ERPs). Auditory ERPs to semantically congruous and incongruous final words in spoken sentences were recorded in 16 children (aged 5鈥11 years) and 16 adults. Previous findings concerning age-related effects on N400 were replicated: the N400 effect was significantly larger in children than in adults. The main new finding was that a late positive component (LPC) following N400 and modulated by semantic context in adults was not found in children. Thus, the common generalization that semantic context effects decline with age holds only for ERP components occurring in the N400 time window or earlier. The cognitive function reflected by the semantic LPC we observed is not clear, but it seems to have a role different from that of the N400, although in adults the components often co-exist as an N400-LPC complex.

Interaction between musical emotion and facial expression as measured by event-related potentials

DOI:10.1016/j.neuropsychologia.2012.11.031

URL

PMID:23220447

[本文引用: 3]

We examined the integrative process between emotional facial expressions and musical excerpts by using an affective priming paradigm. Happy or sad musical stimuli were presented after happy or sad facial images during electroencephalography (EEG) recordings. We asked participants to judge the affective congruency of the presented face-music pairs. The congruency of emotionally congruent pairs was judged more rapidly than that of incongruent pairs. In addition, the EEG data showed that incongruent musical targets elicited a larger N400 component than congruent pairs. Furthermore, these effects occurred in nonmusicians as well as musicians. In sum, emotional integrative processing of face-music pairs was facilitated in congruent music targets and inhibited in incongruent music targets; this process was not significantly modulated by individual musical experience. This is the first study on musical stimuli primed by facial expressions to demonstrate that the N400 component reflects the affective priming effect. (c) 2012 Elsevier Ltd. All rights reserved.

Music, language and meaning: Brain signatures of semantic processing

DOI:10.1038/nn1197

URL

PMID:14983184

[本文引用: 1]

Abstract Semantics is a key feature of language, but whether or not music can activate brain mechanisms related to the processing of semantic meaning is not known. We compared processing of semantic meaning in language and music, investigating the semantic priming effect as indexed by behavioral measures and by the N400 component of the event-related brain potential (ERP) measured by electroencephalography (EEG). Human subjects were presented visually with target words after hearing either a spoken sentence or a musical excerpt. Target words that were semantically unrelated to prime sentences elicited a larger N400 than did target words that were preceded by semantically related sentences. In addition, target words that were preceded by semantically unrelated musical primes showed a similar N400 effect, as compared to target words preceded by related musical primes. The N400 priming effect did not differ between language and music with respect to time course, strength or neural generators. Our results indicate that both music and language can prime the meaning of a word, and that music can, as language, determine physiological indices of semantic processing.

Electrophysiology reveals semantic memory use in language comprehension

DOI:10.1016/S1364-6613(00)01560-6

URL

PMID:11115760

[本文引用: 1]

The physical energy that we refer to as a word, whether in isolation or embedded in sentences, takes its meaning from the knowledge stored in our brains through a lifetime of experience. Much empirical evidence indicates that, although this knowledge can be used fairly flexibly, it is functionally organized in emantic memory along a number of dimensions, including similarity and association. Here, we review recent findings using an electrophysiological brain component, the N400, that reveal the nature and timing of semantic memory use during language comprehension. These findings show that the organization of semantic memory has an inherent impact on sentence processing. The left hemisphere, in particular, seems to capitalize on the organization of semantic memory to pre-activate the meaning of forthcoming words, even if this strategy fails at times. In addition, these electrophysiological results support a view of memory in which world knowledge is distributed across multiple, plastic-yet-structured, largely modality-specific processing areas, and in which meaning is an emergent, temporally extended process, influenced by experience, context, and the nature of the brain itself.

Thirty years and counting: Finding meaning in the N400 component of the event related brain potential (ERP)

Reading between the lines: Event-related brain potentials during natural sentence processing

DOI:10.1016/0093-934X(80)90133-9

URL

PMID:7470854

[本文引用: 1]

Event-related potentials (ERPs) were recorded from subjects as they silently read 160 different seven-word sentences, presented one word at a time. Each sentence was in itself a natural, meaningful phrase. Substantial intersubject variability was observed in the ERP waveshapes following the words. More than half of the subjects generated P3 components to word stimuli, but those who did showed similar responses to repeated control presentations of a single word. In addition, it was found that all but the first word in the sentence elicited an ERP with a significant left-greater-than-right asymmetry in the late positivity over temporo-parietal regions. The ERPs to the last words were associated with more late positivity than those to the preceding words. One quarter of the sentences, at random, ended with a word printed in a typeface that was different from that of the preceding words. This infrequent change in typeface elicited a complex ERP having three consistent late positive components.

May). Multimodal music mood classification using audio and lyrics. Paper presented at the Seventh International Conference on Machine Learning and Applications, Copenhagen, Denmark

The genesis of music and language

DOI:10.2307/851912

URL

[本文引用: 1]

song developed by fitting standardized, formulaic sequences of (speech) sounds to pre-existingmelodies (1962). It is in fact no accident that pitch, which as melody in time represents the frequencyin space, the essence of the phoneme, the contrastive segmental unit of speech.

The singing Neanderthals: The origins of music, language, mind and body

Pleasure generated by sadness: Effect of sad lyrics on the emotions induced by happy music

Children's judgements of emotion in song

DOI:10.1177/0305735607076445

URL

[本文引用: 2]

ABSTRACT Songs convey emotion by means of expressive performance cues (e.g. pitch level, tempo, vocal tone) and lyrics. Although children can interpret both types of cues, it is unclear whether they would focus on performance cues or salient verbal cues when judging the feelings of a singer. To investigate this question, we had 5- to 10-year-old children and adults listen to song fragments that combined emotive performance cues with meaningless syllables or with lyrics that had emotional implications. In both cases, listeners were asked to judge the singer's feelings from the sound of her voice. In the context of meaningless lyrics, children and adults successfully judged the singer's feelings from performance cues. When the lyrics were emotive, adults reliably judged the singer's feelings from performance cues, but children based their judgements on the lyrics. These findings have implications for children's interpretation of vocal emotion in general and sung performances in particular. Copyright

Musical emotions: Functions, origins, evolution

DOI:10.1016/j.plrev.2009.11.001

URL

PMID:20374916

[本文引用: 1]

Theories of music origins and the role of musical emotions in the mind are reviewed. Most existing theories contradict each other, and cannot explain mechanisms or roles of musical emotions in workings of the mind, nor evolutionary reasons for music origins. Music seems to be an enigma. Nevertheless, a synthesis of cognitive science and mathematical models of the mind has been proposed describing a fundamental role of music in the functioning and evolution of the mind, consciousness, and cultures. The review considers ancient theories of music as well as contemporary theories advanced by leading authors in this field. It addresses one hypothesis that promises to unify the field and proposes a theory of musical origin based on a fundamental role of music in cognition and evolution of consciousness and culture. We consider a split in the vocalizations of proto-humans into two types: one less emotional and more concretely-semantic, evolving into language, and the other preserving emotional connections along with semantic ambiguity, evolving into music. The proposed hypothesis departs from other theories in considering specific mechanisms of the mind rain, which required the evolution of music parallel with the evolution of cultures and languages. Arguments are reviewed that the evolution of language toward becoming the semantically powerful tool of today required emancipation from emotional encumbrances. The opposite, no less powerful mechanisms required a compensatory evolution of music toward more differentiated and refined emotionality. The need for refined music in the process of cultural evolution is grounded in fundamental mechanisms of the mind. This is why today's human mind and cultures cannot exist without today's music. The reviewed hypothesis gives a basis for future analysis of why different evolutionary paths of languages were paralleled by different evolutionary paths of music. Approaches toward experimental verification of this hypothesis in psychological and neuroimaging research are reviewed.

Disentangling conscious from unconscious cognitive processing with event-related EEG potentials

DOI:10.1016/j.neurol.2017.08.001

URL

PMID:28843414

[本文引用: 1]

By looking for properties of consciousness, cognitive neuroscience studies have dramatically enlarged the scope of unconscious cognitive processing. This emerging knowledge inspired the development of new approaches allowing clinicians to probe and disentangle conscious from unconscious cognitive processes in non-communicating brain-injured patients both in terms of behaviour and brain activity. This information is extremely valuable in order to improve diagnosis and prognosis in such patients both at acute and chronic settings. Reciprocally, the growing observations coming from such patients suffering from disorders of consciousness provide valuable constraints to theoretical models of consciousness. In this review we chose to illustrate these recent developments by focusing on brain signals recorded with EEG at bedside in response to auditory stimuli. More precisely, we present the respective EEG markers of unconscious and conscious processing of two classes of auditory stimuli (sounds and words). We show that in both cases, conscious access to the corresponding representation (e.g.: auditory regularity and verbal semantic content) share a similar neural signature (P3b and P600/LPC) that can be distinguished from unconscious processing occurring during an earlier stage (MMN and N400). We propose a two-stage serial model of processing and discuss how unconscious and conscious signatures can be measured at bedside providing relevant informations for both diagnosis and prognosis of consciousness recovery. These two examples emphasize how fruitful can be the bidirectional approach exploring cognition in healthy subjects and in brain-damaged patients.

On the nature of melody-text integration in memory for songs

DOI:10.1016/0749-596X(86)90025-2

URL

[本文引用: 1]

In earlier experiments we found that the melodies of songs were better recognized when the words were those that had originally been heard with the melody than when they were different. Similarly, song texts were better recognized when sung with their original melodies. Some possible causes of this “integration effect” were investigated in the present experiments. Experiment 1 ruled out the hypothesis that integration was due to semantic connotations imposed on the melody by the words, since songs with nonsense texts yielded the same effect. Experiments 2 and 3 ruled out the possibility that the earlier results were caused by a decrement in recognition when a previously heard component is tested in an unfamiliar context. The results support the notion of an integrated memory representation for melody and text in songs.

Sex differentiates the role of emotional prosody during word processing

DOI:10.1016/S0926-6410(02)00108-8

URL

PMID:12067695

[本文引用: 2]

The meaning of a speech stream is communicated by more than the particular words used by the speaker. For example, speech melody, referred to as prosody, also contributes to meaning. In a cross-modal priming study we investigated the influence of emotional prosody on the processing of visually presented positive and negative target words. The results indicate that emotional prosody modulates word processing and that the time-course of this modulation differs for males and females. Women show behavioural and electrophysiological priming effects already with a small interval between the prosodic prime and the visual target word. In men, however, similar effects of emotional prosody on word processing occur only for a longer interval between prime and target. This indicates that women make an earlier use of emotional prosody during word processing as compared to men.

Effects of melody and lyrics on mood and memory

DOI:10.2466/pms.1997.85.1.31

URL

PMID:9293553

Abstract 137 undergraduate Le Moyne College students volunteered in a study on music and its effects on mood and memory. In a 2 x 3 between-subjects design, there were 2 lyric conditions (Happy and Sad Lyrics) and 3 music conditions (No Music, Happy Music, and Sad Music). Participants were asked to listen to instrumental music or mentally to create a melody as they read lyrics to themselves. The study tested cued-recall, self-reported mood state and psychological arousal. Analysis suggested that mood of participants was influenced by the music played, not the lyrics. Results also showed those exposed to No Music had the highest score on the recall test. Personal relevance to the lyrics was not correlated with memory.

Affective priming effects of musical sounds on the processing of word meaning

DOI:10.1162/jocn.2009.21383

URL

PMID:19925192

[本文引用: 1]

Abstract Recent studies have shown that music is capable of conveying semantically meaningful concepts. Several questions have subsequently arisen particularly with regard to the precise mechanisms underlying the communication of musical meaning as well as the role of specific musical features. The present article reports three studies investigating the role of affect expressed by various musical features in priming subsequent word processing at the semantic level. By means of an affective priming paradigm, it was shown that both musically trained and untrained participants evaluated emotional words congruous to the affect expressed by a preceding chord faster than words incongruous to the preceding chord. This behavioral effect was accompanied by an N400, an ERP typically linked with semantic processing, which was specifically modulated by the (mis)match between the prime and the target. This finding was shown for the musical parameter of consonance/dissonance (Experiment 1) and then extended to mode (major/minor) (Experiment 2) and timbre (Experiment 3). Seeing that the N400 is taken to reflect the processing of meaning, the present findings suggest that the emotional expression of single musical features is understood by listeners as such and is probably processed on a level akin to other affective communications (i.e., prosody or vocalizations) because it interferes with subsequent semantic processing. There were no group differences, suggesting that musical expertise does not have an influence on the processing of emotional expression in music and its semantic connotations.

Affective impact of music vs. lyrics

DOI:10.2190/35T0-U4DT-N09Q-LQHW

URL

[本文引用: 5]

Abstract Three experiments examined the relative impact of lyrics vs music on mood. In Exp 1, the lyrics, music, or lyrics plus music of a sad song were presented to 42 college students. While the music alone increased positive affect and decreased depression, the lyrics plus music had the opposite effect. In Exp 2 (44 college students), the sad lyrics plus music also increased depression and decreased positive affect even when performed in an upbeat style. Exp 3 (40 college students) showed that pairing the melody with the sad lyrics led Ss to rate the melody alone as less pleasant 1 wk later. Lyrics appear to have greater power to direct mood change than music alone and can imbue a particular melody with affective qualities. (PsycINFO Database Record (c) 2012 APA, all rights reserved)

Reduced sensitivity to emotional prosody in congenital amusia rekindles the musical protolanguage hypothesis

DOI:10.1073/pnas.1210344109

URL

[本文引用: 1]

A number of evolutionary theories assume that music and language have a common origin as an emotional protolanguage that remains evident in overlapping functions and shared neural circuitry. The most basic prediction of this hypothesis is that sensitivity to emotion in speech prosody derives from the capacity to process music. We examined sensitivity to emotion in speech prosody in a sample of individuals with congenital amusia, a neurodevelopmental disorder characterized by deficits in processing acoustic and structural attributes of music. Twelve individuals with congenital amusia and 12 matched control participants judged the emotional expressions of 96 spoken phrases. Phrases were semantically neutral but prosodie cues (tone of voice) communicated each of six emotional states: happy, tender, afraid, irritated, sad, and no emotion. Congenitally amusic individuals were significantly worse than matched controls at decoding emotional prosody, with decoding rates for some emotions up to 20% lower than that of matched controls. They also reported difficulty understanding emotional prosody in their daily lives, suggesting some awareness of this deficit. The findings support speculations that music and language share mechanisms that trigger emotional responses to acoustic attributes, as predicted by theories that propose a common evolutionary link between these domains.

Affective priming by simple geometric shapes: Evidence from event-related brain potentials

DOI:10.3389/fpsyg.2016.00917

URL

PMID:4911398

[本文引用: 3]

Previous work has demonstrated that simple geometric shapes may convey emotional meaning using various experimental paradigms. However, whether affective meaning of simple geometric shapes can be automatically activated and influence the evaluations of subsequent stimulus is still unclear. Thus the present study employed an affective priming paradigm to investigate whether and how two geometric shapes (circle vs. downward triangle) impact on the affective processing of subsequently presented faces (Experiment 1) and words (Experiment 2). At behavioral level, no significant effect of affective congruency was found. However, ERP results in Experiment 1 and 2 showed a typical effect of affective congruency. The LPP elicited by affectively incongruent trials was larger compared to congruent trials. Our results provide support for the notion that downward triangle is perceived as negative and circle as positive and their emotional meaning can be activated automatically and then exert an influence on the electrophysiological processing of subsequent stimuli. The lack of significant congruent effect in behavioral measures and the inversed N400 congruent effect might reveal that the affective meaning of geometric shapes is weak because they are just abstract threatening cues rather than real threat. In addition, because no male participants are included in the present study, our findings are limited to females.

Priming emotional facial expressions as evidenced by event-related brain potentials

DOI:10.1016/j.ijpsycho.2004.07.006

URL

PMID:15649552

[本文引用: 3]

As human faces are important social signals in everyday life, processing of facial affect has recently entered into the focus of neuroscientific research. In the present study, priming of faces showing the same emotional expression was measured with the help of event-related potentials (ERPs) in order to investigate the temporal characteristics of processing facial expressions. Participants classified portraits of unfamiliar persons according to their emotional expression (happy or angry). The portraits were either preceded by the face of a different person expressing the same affect (primed) or the opposite affect (unprimed). ERPs revealed both early and late priming effects, independent of stimulus valence. The early priming effect was characterized by attenuated frontal ERP amplitudes between 100 and 200 ms in response to primed targets. Its dipole sources were localised in the inferior occipitotemporal cortex, possibly related to the detection of expression-specific facial configurations, and in the insular cortex, considered to be involved in affective processes. The late priming effect, an enhancement of the late positive potential (LPP) following unprimed targets, may evidence greater relevance attributed to a change of emotional expressions. Our results (i) point to the view that a change of affect-related facial configuration can be detected very early during face perception and (ii) support previous findings on the amplitude of the late positive potential being rather related to arousal than to the specific valence of an emotional signal.

The interaction of arousal and valence in affective priming: Behavioral and electrophysiological evidence

DOI:10.1016/j.brainres.2012.07.023

URL

PMID:3694405

[本文引用: 2]

78 Whether emotional arousal influences affective priming remains poorly understood. 78 Whereas valence congruency influenced both the N400 and the LPP, arousal congruency influenced only the LPP. 78 Arousal congruency mainly modulates post-semantic processes. Arousal level of images impacts behavioral and ERP effects of affective priming.

Neural correlates of cross-domain affective priming

DOI:10.1016/j.brainres.2010.03.021

URL

PMID:2857548

[本文引用: 7]

The affective priming effect has mostly been studied using reaction time (RT) measures; however, the neural bases of affective priming are not well established. To understand the neural correlates of cross-domain emotional stimuli presented rapidly, we obtained event-related potential (ERP) measures during an affective priming task using short SOA (stimulus onset asynchrony) conditions. Two sets of 480 picture ord pairs were presented at SOAs of either 150 ms or 250 ms between prime and target stimuli. Participants decided whether the valence of each target word was pleasant or unpleasant. Behavioral results from both SOA conditions were consistent with previous reports of affective priming, with longer RTs for incongruent than congruent pairs at SOAs of 150 ms (771 vs. 738 ms) and 250 ms (765 vs. 720 ms). ERP results revealed that the N400 effect (associated with incongruent pairs in affective processing) occurred at anterior scalp regions at an SOA of 150 ms, and this effect was only observed for negative target words across the scalp at an SOA of 250 ms. In contrast, late positive potentials (LPPs) (associated with attentional resource allocation) occurred across the scalp at an SOA of 250 ms. LPPs were only observed for positive target words at posterior parts of the brain at an SOA of 150 ms. Our finding of ERP signatures at very short SOAs provides the first neural evidence that affective pictures can exert an automatic influence on the evaluation of affective target words.

Electrophysiological correlates of visual affective priming

DOI:10.1016/j.brainresbull.2006.09.023

URL

PMID:1783676

[本文引用: 2]

The present study used event-related potentials (ERPs) to investigate the underlying neural mechanisms of visual affective priming. Eighteen young native English-speakers (6 males, 12 females) participated in the study. Two sets of 720 prime–target pairs (240 affectively congruent, 240 affectively incongruent, and 240 neutral) used either words or pictures as primes and only words as targets. ERPs were recorded from 64 scalp electrodes while participants pressed either “Happy” or “Sad” buttons to indicate target pleasantness. The response time (RT) results confirmed an affective priming effect, with faster responses to affectively congruent trials (659 ms) than affectively incongruent trials (690 ms). Affectively incongruent trials had larger and more negative N200 activation than those to neutral trials. Importantly, a delayed N400 for word prime–target pairs matched the RT results with larger negative amplitudes for incongruent than congruent pairs. This finding suggests that the N400 component is not only sensitive to semantic mismatches, but is also sensitive to affective mismatches for word prime–target pairs.